This post is co-written with Aleksej Klebanskij, Maksim Ponasov and Maksim Kotun from Flo Health.

Flo is the largest app in the Health and Fitness category worldwide, with 70 million monthly active users. With over 120+ medical experts, Flo supports women and people who menstruate during their entire reproductive lives and provides curated cycle and ovulation tracking, personalized health insights, expert tips, and a fully closed community to share questions and concerns. Flo prioritizes safety and keeps a sharp focus on being the most trusted digital source for women’s health information.

In this post, we explain best practices Flo implemented to scale to more than 70 million monthly active users while achieving 60% cost efficiency with Amazon DynamoDB.

Background

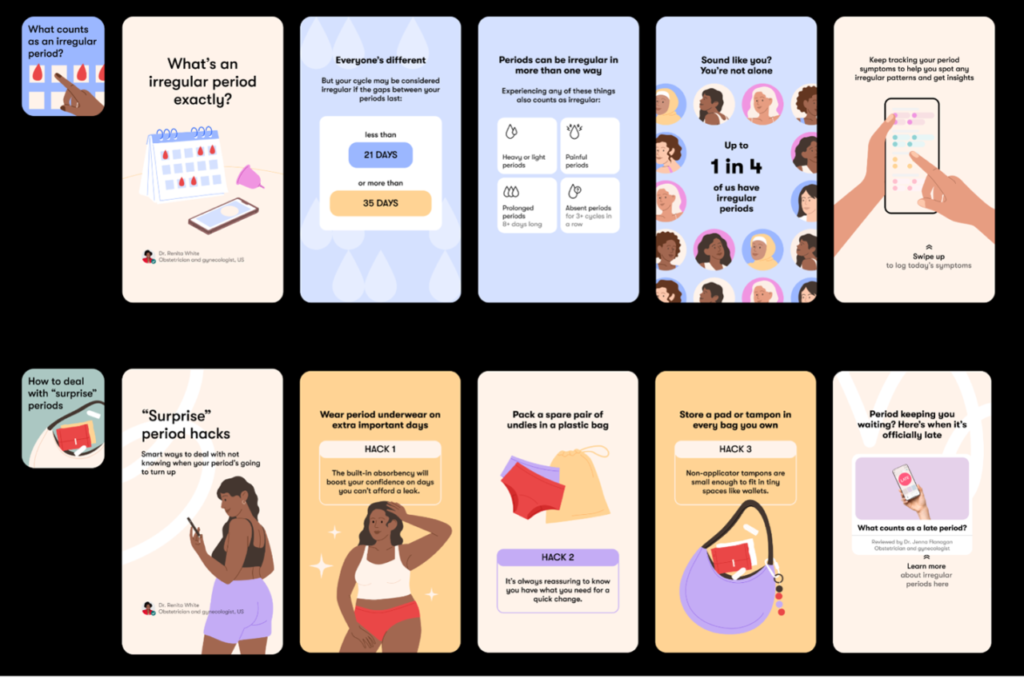

One of the prominent features of the Flo App is Stories, a personalized content stream designed to engage users with timely health insights and tips tailored to their unique needs. This feature dynamically adapts to each user’s health journey, displaying relevant content in real time based on individual preferences and interactions within the app. By leveraging DynamoDB, Flo ensures that Stories remain responsive and up-to-date, seamlessly delivering information to millions of users and enriching their experience with a curated, interactive approach to health education.

From the beginning of developing the Stories feature, Flo Health selected Amazon DynamoDB as the foundation for data storage, primarily due to its alignment with the team’s goals for scalability, speed, and flexibility. When Stories launched, the main screen attracted about 20 million monthly active users, a figure that has now grown beyond 70 million, underscoring the need for a database solution capable of scaling to support substantial read traffic. Stories quickly became a core component of the user journey, playing a pivotal role in adapting each user’s experience based on their interactions. This dynamic and read-heavy workload required rapid, real-time updates, making low-latency reads essential for seamless engagement. Given that most interactions focused on a single record, this simplified the transactional model significantly, enabling efficient data retrieval without complex operations. As the default solution for non-relational use cases at Flo, DynamoDB’s ability to handle high-traffic workloads and provide a simple, scalable key-value model made it the ideal fit, seamlessly supporting Flo’s unique data and traffic demands.

The following image is an example of how Stories appear within the Flo App:

After implementing DynamoDB for the Stories feature, we closely monitored our usage and associated costs, understanding that these were largely driven by our provisioned throughput capacity and consumed storage, along with the use of DynamoDB’s backup feature. By December 2022, our monthly growth rate reached 17.13%, leading us to explore opportunities for more efficient resource utilization. Increased application traffic naturally led to a rise in write operations, resulting in higher data generation, storage requirements, and backup needs. As Stories adoption grew, we proactively sought optimization strategies to manage this growth effectively, ensuring cost efficiency as our user base continued to expand. We explored options to optimize our DynamoDB costs using the AWS Well Architected Framework (WAL).

Well Architected Framework

The AWS Well-Architected Framework is a set of best practices and guidelines developed by AWS to help you build secure, high-performing, resilient, and efficient infrastructure for your applications. It provides a consistent approach for evaluating architectures and implementing designs that align with AWS best practices. The framework is designed to support a wide range of applications, from small projects to enterprise-level solutions.

Key Pillars of the AWS Well-Architected Framework:

- Operational Excellence:

- Focuses on operational processes and procedures.

- Emphasizes the ability to monitor, automate, and continuously improve operational procedures to deliver business value.

- Security:

- Prioritizes the implementation of strong security measures.

- Addresses data protection, identity and access management, detection and response, and the overall security posture of the environment.

- Reliability:

- Ensures the system can recover from failures and meet customer demands.

- Includes strategies for fault tolerance, disaster recovery, and the effective use of resources to maintain a consistent and predictable performance.

- Performance Efficiency:

- Focuses on using resources efficiently to meet system requirements.

- Addresses aspects such as selecting the right types and sizes of resources, optimizing workloads, and adapting to changing requirements.

- Cost Optimization:

- Aims to avoid unnecessary costs and optimize resource usage.

- Provides guidance on optimizing spending, selecting the right pricing models, and monitoring resource consumption.

- Sustainability:

- Minimize the environmental impact of running your workloads in the cloud.

- Provides guidance on how to optimize your applications in a sustainable way.

Flo Health undertook a comprehensive Well-Architected Review with a specific focus on the Cost Optimization pillar for DynamoDB.

- Assessment of DynamoDB usage:

- The first step, an assessment of Flo Health’s DynamoDB implementation. Including a review of data models, table designs, and overall usage patterns to understand how DynamoDB was being utilized within the application.

- Capacity mode analysis:

- Flo assessed read and write capacity units, understanding the specific requirements of different tables. The goal, ensure provisioned resources matched the actual workload, avoiding over-provisioning and unnecessary costs.

- Utilization of reserved capacity:

- Understand the use of reserved capacity for DynamoDB, offering potential cost savings through discounted pricing for committed capacity over an extended period.

- Implementation of auto scaling:

- Auto Scaling configurations reviewed and, if necessary, adjusted to ensure DynamoDB capacity could automatically scale in response to changes in demand. This dynamic scaling helps maintain optimal performance while minimizing costs during periods of lower activity.

- Review of indexing strategies:

- DynamoDB indexes were scrutinized to ensure needed and well-optimized. Unnecessary or inefficient indexes were identified and adjusted to reduce storage costs and improve query performance.

- Data archiving and TTL management:

- Data archiving and Time-to-Live (TTL) configurations were reviewed to identify opportunities to archive or automatically expire data that was no longer needed. This helped in reducing storage costs and optimizing DynamoDB capacity.

- Continuous monitoring and improvement plan:

- We established ongoing monitoring and created a plan for continuous improvement. This included regular reviews of DynamoDB usage, cost reports, and adjusting configurations as the application evolved.

The Well-Architected Review was pivotal in facilitating decision-making. For example, using Time-to-Live (TTL) and reserved capacity reduced our costs by 20%.

Flo’s data optimizations

The following are the data optimizations that Flo implemented:

Datatype size optimizations

To optimize throughput costs and enhance performance, we prioritized reducing the size of items stored in DynamoDB as the DynamoDB pricing model charges for writes in 1KB increments and reads in 4KB increments.

Using a numeric datatype instead of string timestamp datatype

Instead of using string timestamps in the ISO_ZONED_DATE_TIME format (e.g., “2022-11-05T04:10:05.244931+08:00[Asia/Manila]†which is 45 bytes and extends the ISO-8601 format), we switched to using Epoch Unix Timestamps (such as: “1667592605244†which is only 8 bytes). This change significantly reduced the size of this attribute across millions of items, leading to substantial storage savings.

String Story ID to numeric

The Story ID was most frequently stored data in the table, appearing as attributes and values. The average string Story ID was 28 characters long, equating to 28 bytes.

With over 15,000 unique Story IDs, Flo cataloged them and now store the indexes instead of the full string IDs. Depending on the number of significant digits, storing an index takes between 1 to 3 bytes. This method is over nine times more compact, resulting in significant storage savings.

Expiring attributes within an Item

Flo stored stories seen by a user just as a string set. That didn’t allow us to track the lifecycle of this data, and we used to just accumulate it within a record. We have refactored to use map instead of set, where the map keys are Story IDs and values – the number of months since the beginning of this year (2024-01-01T00:00Z).

The business requirement for the Time-to-Live (TTL) of a story in the permanent history is six months. We used to have up to 2,500 Story IDs in a set, but after introducing a TTL, we were able to reduce this number to 500 for active users, which is five times less data stored in a more compact format.

We implemented logic to periodically verify the timestamps of our stories as users access the content. If a timestamp is outdated, we initiate an update to remove the story from the list. This approach ensures maximum efficiency.

Expire items with DynamoDB TTL

We have worked on removing some unused features, during that time, we discovered that only 5% of the stored data is being utilized. Based on this, We decided to introduce a DynamoDB TTL on the table level, which gave us a clean and a cost-effective method for deleting items that are no longer relevant, mechanism to keep only the data of active users. In the process of optimization, considering the amount of accumulated data, the more cost-effective way was to migrate application to a new table with TTL and expire the old one within the TTL time.

During this migration, we have removed unused record blocks and switched to numeric String IDs in attribute keys, which reduce record size by an average of two and half times.

Access optimizations

To enhance system efficiency and reduce costs, we implemented two key access optimizations: dirty checking and group update requests.

Dirty checking

We introduced “dirty checking,†a mechanism that helps ensure that we only perform write operations when necessary. This technique compares the current state of a record with its previous state before making any updates. It does so by issuing a read request to fetch the current record from the DynamoDB table and then comparing it with the intended update. If there are no changes (that is: the record is not “dirtyâ€), the system avoids performing a redundant write operation. While the read request incurs a cost, it is still more cost-efficient than performing unnecessary writes. This process not only reduces overall write throughput consumption but also mitigates the impact of glitching clients that might send multiple simultaneous requests to update the same record. This change allowed us to reduce write throughput consumption by 50%.

Group update requests

One reason for updating records is to apply feedback events received from a client. Previously, we applied one update for each individual event, which meant that multiple updates could be made to the same record in a short period of time. This approach was not efficient, especially when dealing with a high volume of events.

To optimize this process, we have changed our approach. Now, instead of updating the record for each event separately, we collect up to 12 events and perform a single update. This batching of events into a single update operation significantly reduces the number of write operations we need to perform.

Moreover, since DynamoDB charges based on the size of the update in 1KB increments, grouping multiple updates into one not only minimizes the number of write requests but also optimizes our cost efficiency. By updating the record once with a batch of events, we reduce the frequency of charges, as each combined update is typically smaller in size compared to multiple individual updates. This approach has led to a more efficient use of our resources and a reduction in our overall costs.

Results

The optimizations Flo implemented have led to remarkable improvements in system performance and cost efficiency, transforming how the Stories feature operates at scale. Here are the key outcomes:

Significant reduction in Write Capacity Units (WCU) usage

- Threefold decrease in average WCU usage: This optimization enabled Flo to reduce the provisioned WCU limit from 15,000 to 5,000, resulting in substantial cost savings without compromising performance.

Compaction of items

- Five-fold reduction in item size: By switching to more efficient data types and introducing Time-to-Live (TTL) mechanisms, Flo dramatically reduced the size of stored records, optimizing storage and throughput costs.

Here is a sample of how the item looked before optimizations:

And here is the same item after optimizations:

Decreased frequency of updates for large items

- Updates to “big†items, defined as those consuming over 50 WCU per operation, have been reduced by 90%. This reduction minimizes high-cost write operations, ensuring consistent performance and cost savings.

- Flo has a metric tracking how often we make updates for “big†records (a “big†record update consumes more than 50 WCU). The value is 10x less than before optimizations as shown in the following graph.

Elimination of throttling incidents

- Optimizations like dirty checking, batching updates, and TTL implementation have streamlined access patterns and storage efficiency, completely eliminating throttling issues.

Conclusion

Applying the AWS Well-Architected Framework and implementing data optimizations Flo has successfully optimized its DynamoDB implementation, achieving cost efficiency and enhanced performance. Size optimization, implementation of TTL, and reducing write activity through “dirty checkingâ€. Flo reduced provisioned WCU by 60% and eliminated throttling issues, maintaining high performance and scalability.

Ready to optimize your cloud environment and achieve similar success? You can also try out the self-service AWS Well-Architected-Lens. The Well-Architected Lenses extend the guidance offered by AWS Well-Architected to specific industry and technology domains. The Amazon DynamoDB Well-Architected Lens focuses on DynamoDB workloads. It provides best practices, design principles, and questions to assess and review a DynamoDB workload.

About the authors

Aleksej Klebanskij, is an engineering manager at Flo Health who likes to build awesome products. His passion lies in fostering high-performing teams and delivering innovative solutions to complex problems.

Aleksej Klebanskij, is an engineering manager at Flo Health who likes to build awesome products. His passion lies in fostering high-performing teams and delivering innovative solutions to complex problems.

Maksim Koutun, is a director of engineering in the Value Creation stream at Flo Health. As a passionate manager responsible for engineering strategy and culture, he always seeks new ways of working and providing autonomy in his teams.

Maksim Koutun, is a director of engineering in the Value Creation stream at Flo Health. As a passionate manager responsible for engineering strategy and culture, he always seeks new ways of working and providing autonomy in his teams.

Maksim Ponasov, is a software engineer at Flo Health, specializing in the development of distributed, data-intensive systems. In his free time, he enjoys reading and spending time outdoors with his family.

Maksim Ponasov, is a software engineer at Flo Health, specializing in the development of distributed, data-intensive systems. In his free time, he enjoys reading and spending time outdoors with his family.

Lee Hannigan, is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads leveraging DynamoDB’s capabilities.

Lee Hannigan, is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads leveraging DynamoDB’s capabilities.

Mladen Trampic, is Senior Technical Account Manager for AWS Enterprise Support EMEA. Mladen helps AWS customers architect for reliability and cost efficiency, striving to improve the operational excellence. When not working, Mladen enjoys building Computers and learning new things in his Home Lab together with his daughter.

Mladen Trampic, is Senior Technical Account Manager for AWS Enterprise Support EMEA. Mladen helps AWS customers architect for reliability and cost efficiency, striving to improve the operational excellence. When not working, Mladen enjoys building Computers and learning new things in his Home Lab together with his daughter.

Source: Read More