Language modeling, a core component of machine learning, involves predicting the likelihood of a sequence of words. This field primarily enhances machine understanding and generation of human language, serving as a backbone for various applications such as text summarization, translation, and auto-completion systems. Efficient language modeling faces significant hurdles, particularly with large models. The main challenge is the computational and memory overhead associated with processing and storing extensive data sequences, which hampers scalability and real-time processing capabilities.

Existing research in language modeling prominently features the Transformer architecture, known for its self-attention mechanism that effectively processes word sequences regardless of distance. Distinguished adaptations include the decoder-only Transformer, optimizing text generation processes in models like OpenAI’s GPT series. Innovations like Sparse Transformers have also emerged, reducing computational demands by limiting interactions between distant sequence elements. Moreover, hybrid models such as BERT and T5 combine various architectural strengths, enhancing language models’ efficiency and capability in understanding and generating nuanced text.

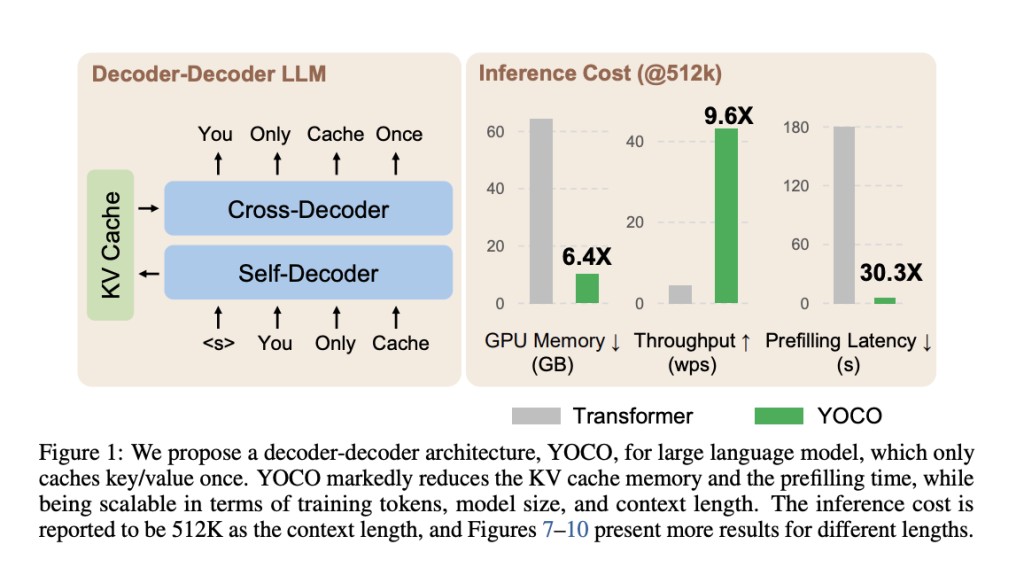

Microsoft Research and Tsinghua University researchers have introduced a novel architecture, You Only Cache Once (YOCO), for large language models. The YOCO architecture presents a unique decoder-decoder framework that diverges from traditional approaches by caching key-value pairs only once. This method significantly reduces the computational overhead and memory usage typically associated with repetitive caching in large language models. YOCO efficiently processes long sequences by leveraging precomputed global KV caches throughout the model’s operation, streamlining the attention mechanism and enhancing overall performance by employing a self-decoder and a cross-decoder.

The YOCO methodology combines the use of self-decoder and cross-decoder mechanisms with advanced attention techniques to optimize language processing. Specifically, the self-decoder utilizes a sliding window and gated retention attention to generate a compact set of KV pairs. The cross-decoder reuses these pairs via cross-attention, eliminating the need for re-encoding and thus conserving computational resources. The model was evaluated on various datasets to assess its performance in real-world scenarios, demonstrating substantial improvements in processing speeds and memory efficiency compared to conventional Transformer-based models.

Experimental results highlight YOCO’s effectiveness, with the model achieving near-perfect needle retrieval accuracy for sequences up to 1 million tokens. YOCO reduces GPU memory demands by approximately 80 times for 65-billion-parameter models. Furthermore, it cuts down prefilling latency from 180 seconds to less than 6 seconds for contexts as large as 512,000 tokens while improving throughput to 43.1 tokens per second compared to 4.5 for the traditional Transformer, marking a 9.6 times increase. These metrics establish YOCO as a highly efficient architecture for processing extensive data sequences.

To summarize, the YOCO architecture introduces an innovative approach to language modeling by caching key-value pairs only once, significantly reducing computational overhead and memory usage. By employing a unique decoder-decoder framework that leverages efficient attention mechanisms, YOCO demonstrates substantial improvements in handling long sequences—achieving near-perfect retrieval accuracy and drastically lowering latency and memory demands. This research provides a scalable, efficient solution for deploying large language models, offering substantial practical benefits for real-world applications that require processing extensive data sequences.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Paper by Microsoft and Tsinghua University Introduces YOCO: A Decoder-Decoder Architectures for Language Models appeared first on MarkTechPost.

Source: Read MoreÂ