Causal learning delves into the foundational principles governing data distributions in the real world, influencing the operational effectiveness of artificial intelligence. The capacity of AI models to comprehend causality impacts their abilities to justify decisions, adapt to new data, and hypothesize alternative realities. Despite the rising interest in large language models (LLMs), evaluating their ability to process causality remains challenging due to the need for a thorough benchmark.

Existing research includes basic benchmarks assessing LLMs like GPT-3 and its variants through simple correlation tasks, often using limited datasets with straightforward causal structures. Studies also routinely examine LLMs such as BERT, RoBERTa, and DeBERTa, but these typically need more diversity in task complexity and dataset variety. Previous frameworks have tried integrating structured data into evaluations yet have yet to combine it effectively with background knowledge. This has restricted their utility in fully exploring LLM capabilities across realistic and complex scenarios, highlighting the need for more comprehensive and diverse evaluation methods in causal learning.

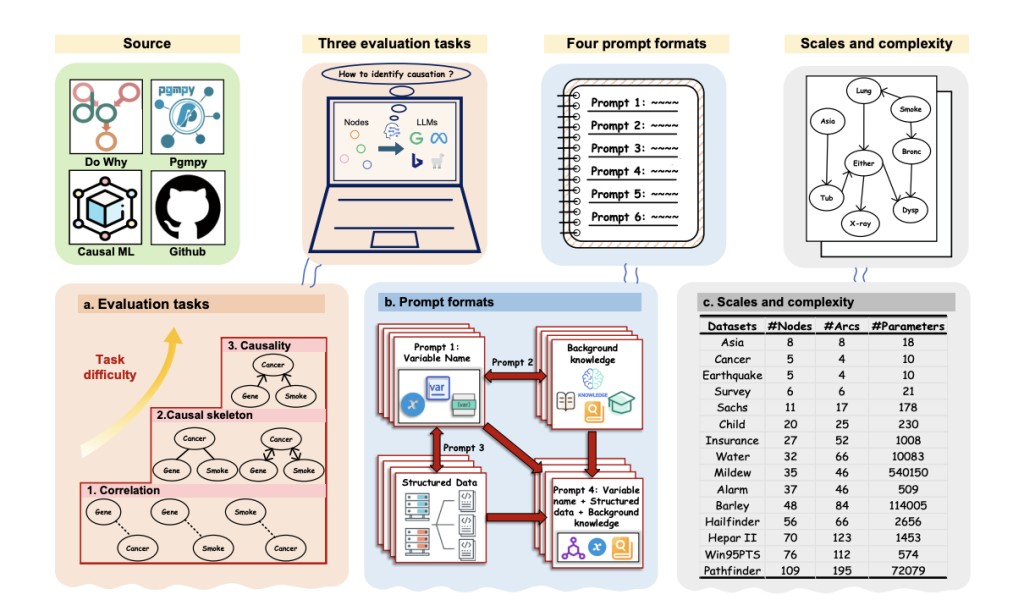

Researchers from Hong Kong Polytechnic University and Chongqing University have introduced CausalBench, a novel benchmark developed to assess LLMs’ causal learning capabilities rigorously. CausalBench stands out due to its comprehensive approach, incorporating multiple layers of complexity and a wide array of tasks that test LLMs’ abilities to interpret and apply causal reasoning in varied contexts. This methodology ensures a robust evaluation of the models under conditions that closely simulate real-world scenarios.

The methodology of CausalBench involves testing LLMs with datasets such as Asia, Sachs, and Survey to assess causal understanding. The framework tasks LLMs to identify correlations, construct causal skeletons, and determine causality directions. Evaluations measure performance through F1 score, accuracy, Structural Hamming Distance (SHD), and Structural Intervention Distance (SID). These tests occur in a zero-shot scenario to gauge each model’s innate causal reasoning capabilities without prior fine-tuning. This ensures the evaluation reflects each LLM’s fundamental abilities to process and analyze causal relationships across increasingly complex scenarios.

Initial evaluations using CausalBench reveal noteworthy performance variations across different LLMs. For instance, specific models like GPT4-Turbo achieved F1 scores above 0.5 in correlation tasks on datasets such as Asia and Sachs. However, performance generally declined in more complex causality assessments involving the Survey dataset, with many models struggling to exceed F1 scores of 0.3. These results highlight the varying capacities of LLMs to handle different levels of causal complexity, providing a clear metric of success and areas for future improvement in model training and algorithm development.

To conclude, researchers from Hong Kong Polytechnic University and Chongqing University introduced CasualBench, a comprehensive benchmark that effectively measures the causal learning capabilities of LLMs. By utilizing diverse datasets and complex evaluation tasks, this research provides crucial insights into the strengths and weaknesses of various LLMs in understanding causality. The findings underscore the need for continued development in model training to enhance AI’s causal reasoning, vital for real-world applications where accurate decision-making and logical inference based on causality are essential.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Advancing AI’s Causal Reasoning: Hong Kong Polytechnic University and Chongqing University Researchers Develop CausalBench for LLM Evaluation appeared first on MarkTechPost.

Source: Read MoreÂ