Enhancing the reasoning capabilities of large language models (LLMs) is pivotal in artificial intelligence. These models, integral to many applications, from automated dialog systems to data analysis, require constant evolution to address increasingly complex tasks. Despite their advancements, traditional LLMs struggle with tasks that require deep, iterative cognitive processes and dynamic decision-making.

The core issue lies in the limited ability of current LLMs to engage in profound reasoning without extensive human intervention. Most models operate under fixed input-output cycles, which do not allow for mid-process revisions based on evolving insights. This leads to less-than-optimal solutions when confronting tasks that require nuanced understanding or complex strategic planning.

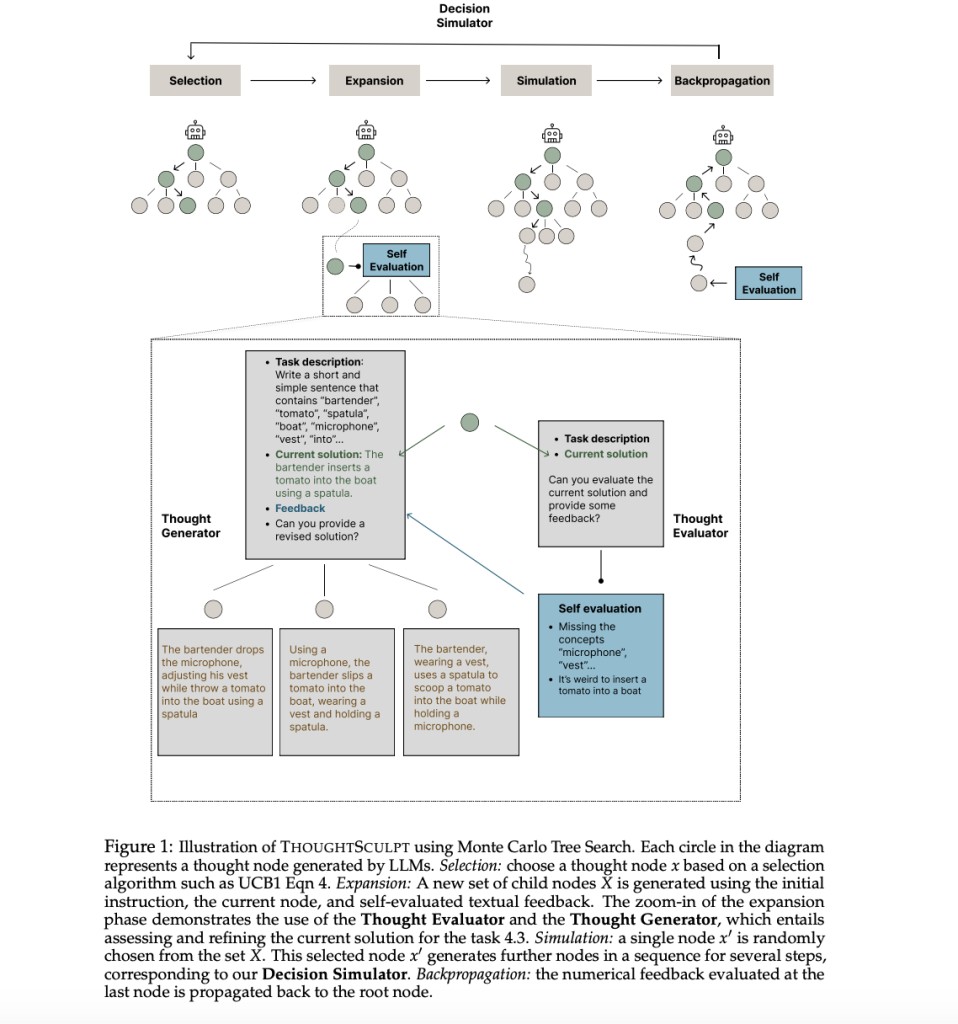

Researchers from UC Berkeley introduced a novel framework known as ThoughtSculpt, which markedly enhances the reasoning prowess of LLMs. This framework incorporates a systematic approach to simulate human-like reasoning, employing Monte Carlo Tree Search (MCTS), a heuristic search algorithm that builds solutions incrementally. ThoughtSculpt innovatively integrates revision actions, allowing the model to backtrack and refine previous outputs, a significant departure from traditional linear progression models.

ThoughtSculpt comprises three main components: the thought evaluator, generator, and decision simulator. The evaluator assesses the quality of each thought node, a potential solution or decision point, providing feedback that guides the generation of improved nodes. The generator then takes this feedback and crafts new nodes, potentially revising or expanding upon previous thoughts. The decision simulator explores these nodes further, evaluating their potential outcomes to ensure the selection of the most promising path forward.

Empirical results demonstrate the efficacy of ThoughtSculpt across various applications. In tasks designed to enhance the interestingness of story outlines, ThoughtSculpt achieved a notable 30% increase in quality. Similarly, solution success rates improved by 16% in constrained word puzzle tasks and enhanced concept coverage in generative tasks by up to 10%. These improvements underscore the framework’s ability to refine and explore a range of solutions more adaptively than possible.

Research Snapshot

In conclusion, the ThoughtSculpt framework developed by UC Berkeley researchers significantly advances the capabilities of LLMs in complex reasoning tasks. Integrating Monte Carlo Tree Search with revision mechanisms allows for iterative refinement of outputs, enhancing decision-making processes. The method has shown remarkable efficacy, achieving up to 30% improvement in story outline interestingness, 16% in crossword puzzle success, and 10% in concept coverage for generative tasks. These results underscore the potential of ThoughtSculpt to revolutionize the application of LLMs across various domains requiring nuanced cognition and problem-solving capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post UC Berkeley Researchers Introduce ThoughtSculpt: Enhancing Large Language Model Reasoning with Innovative Monte Carlo Tree Search and Revision Techniques appeared first on MarkTechPost.

Source: Read MoreÂ