We launched the GitHub Innovation Graph to make it easier for researchers, policymakers, and developers to access longitudinal metrics on software development for economies around the world. We’re pleased to report that researchers are indeed finding the Innovation Graph to be a useful resource, and with today’s Q1 2024 data release, I’m excited to share an interview with two researchers who are using data from the Innovation Graph in their work:

Alexander Quispe is a junior researcher at the World Bank in the Digital Development Global Practice and lecturer in the Department of Economics at PUCP.

Rodrigo Grijalba is a data scientist who specializes in Causal Inference, Economics, Artificial Intelligence-integrated systems, and their intersections.

Research Q&A

Kevin: First of all, Alexander, thanks so much for your bug report in the data repository for the Innovation Graph! Glad you took the initiative to reach out about the data gap, both so that we could fix the missing data and learn about your fascinating work on the paper that you and Rodrigo presented at the Munich Summer Institute in May. Could you give a quick high-level summary for our readers here?

Alexander: In recent years, advancements in artificial intelligence (AI) have revolutionized various fields, with software development being one of the most impacted. The rise of large language models (LLMs) and tools like OpenAI’s ChatGPT and GitHub Copilot, has brought about a significant shift in how developers approach coding, debugging, and software architecture. Our research specifically analyzes the impact of ChatGPT on the velocity of software development, finding that the availability of ChatGPT:

Significantly increased the number of Git pushes per 100,000 inhabitants of each country.

Had a positive (although not statistically significant) correlation with the number of repositories and developers per 100,000 inhabitants.

Generally enhanced developer engagement across various programming languages. High-level languages like Python and JavaScript showed significant increases in unique developers, while the impact on domain-specific languages like HTML and SQL varied.

These results would imply that the impact of ChatGPT thus far does not lie in the increase of developers or projects, but in an acceleration of the pre-established development process.

Rodrigo: GitHub’s Innovation Graph data worked very well with the methods we used, especially the synthetic ones. Having country- and language-level aggregated data meant that we could neatly define our control and treatment groups, and disaggregate them again to find how effects differed, say, by language. There actually are several other disaggregations we could do with this data and we might look into that in the future, but the language differences seemed like the most obvious faceting approach to explore.

Kevin: Interesting! Could you provide an overview of the methods you used in your analysis?

Alexander: We mainly focused on comparative methods for panel data in observational studies, with the goal of estimating the average treatment effect. Our main method used synthetic difference in differences (SDID), as described by Arkhangelsky, Athey, Imbens, and Wager (2021). We also described the results for the difference in differences (DiD) and synthetic control (SC) methods.

Kevin: Nice! Follow-up question: could you provide an “explain like I’m 5†overview of the methods you used in your analysis?

Alexander: Haha sure, we looked at Innovation Graph data at multiple points in time (“panel dataâ€) in order to try to estimate the impact of ChatGPT access for the average country (“average treatment effectâ€). Since this data wasn’t part of a randomized experiment (for example, if ChatGPT was available only to a randomly selected group of countries), this was an “observational study.†In situations where you’re unable to run a randomized experiment, but you still want to be able to say something like “X caused Y to increase by 10%,†you can use causal inference techniques (like DiD, SC, and SDID) to help estimate the causal effect of a treatment.

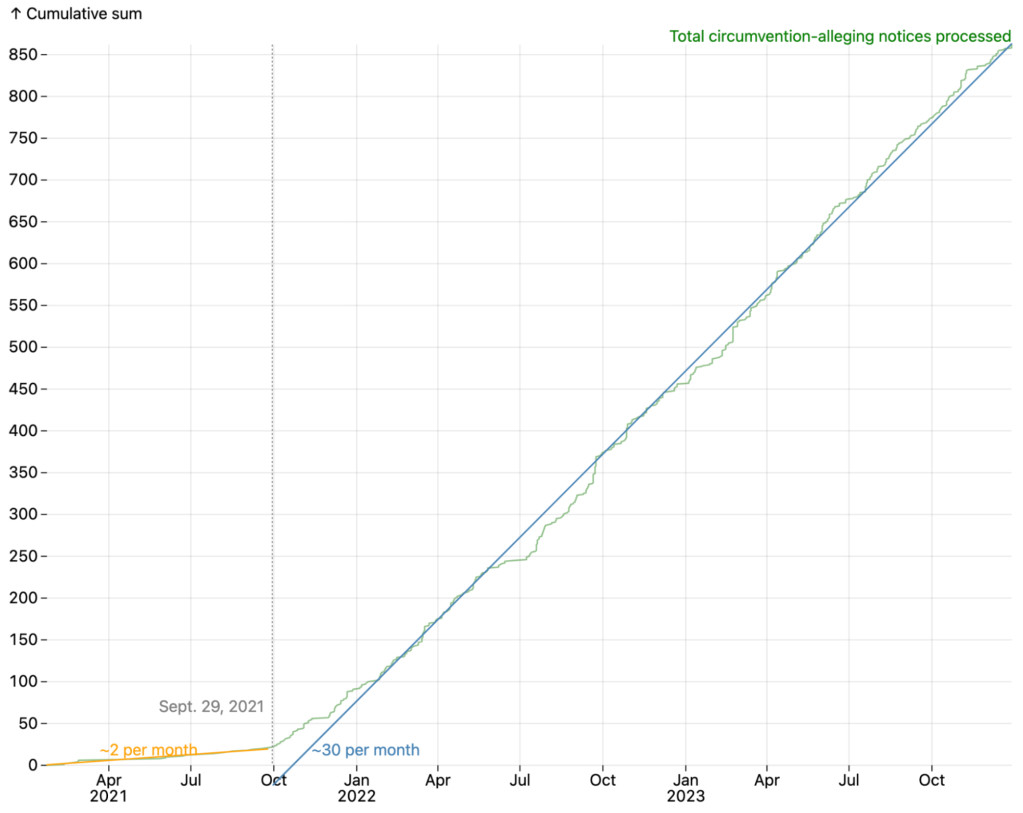

Kevin: Huh, that reminds me of the last release of our transparency reporting data, where we found that a change in our DMCA takedown form caused a 15X increase in the number of DMCA notices alleging circumvention:

Cumulative sum of DMCA notices that allege circumvention, 2021-2023, with regression lines.

Alexander: Oh yes, in causal inference terms, that’s called an “event study,†where there’s a prior trend that changes due to some event, in your case, the DMCA form update. In an event study, you’re trying to compare what actually happened vs. the counterfactual of what would have happened if the event didn’t occur by comparing the time periods from before and after the event. In your case, the dark blue line showing the number of DMCA notices processed due to circumvention seems to extend the prior trend, so it happens to also plot the counterfactual, that is, what would have happened if the form wasn’t updated:

Cumulative sum of DMCA notices that allege circumvention, were processed due to circumvention, or were processed on other grounds, 2021-2023.

Alexander (continued): Sometimes, though, instead of comparing the time periods before/after a treatment, you might want to make a comparison between a group that received a treatment vs. a group that was never treated. For example, maybe you’re interested in estimating the causal effect of bank bailouts during the Great Depression, so you’d like to compare what happened to banks that could access funds from the federal government vs. those that couldn’t. DiD, SC, and SDID are techniques that you can use to try to compare treated vs. untreated groups to figure out what would have happened in the counterfactual scenario if a treatment didn’t happen, and thus, estimate the effect of the treatment. In our paper, that treatment is the legal availability of ChatGPT in certain countries but not others.

Estimated synthetic difference-in-differences (SDID) trends—average number of Git pushes per 100k inhabitants for treated vs. (synthetic) control groups.

Notes: The gray solid line and the dashed black line represent the average number of pushes per 100k inhabitants by quarter for the treated and (synthetic) control groups, respectively. The dashed red vertical line indicates the start of the treatment. The light blue areas at the bottom indicate the importance of pre-treatment quarters for predicting post-treatment levels in the control group. Visually, both groups are nearly parallel before the launch of ChatGPT, but start noticeably diverging after the launch. We can interpret the average treatment effect as the magnitude of this divergence.

Synthetic difference-in-differences (SDID) estimates—number of pushers per 100k inhabitants by programming language.

Notes: here we present the estimated treatment effect by language: the squares represent the estimates, and the lines represent their 95% confidence intervals. All the results shown here are statistically significant, although some are very small. The estimated treatment effects are larger for JavaScript, Python, TypeScript, Shell, HTML, and CSS, and smaller for Rust, C, C++, Assembly, Batchfile, PowerShell, R, MATLAB, and PLpgSQL.

A full explanation of DiD, SC, and SDID probably wouldn’t fit in this Q&A (although see additional learning resources at the bottom), but walking through our analysis briefly: I preferred the SDID method for our analysis because the parallel trends assumption required for the DiD method did not hold for our treatment and control groups. Additionally, the SC method could not be precise due to our limited data for pre-treatment periods, only eleven quarters. The SDID method addresses these limitations by constructing a synthetic control group while also considering pre-treatment period differences, making it a robust choice for our analysis.

Kevin: I’d be curious about the limitations of your paper and data that you wished you had for further work. What would the ideal dataset(s) look like for you?

Alexander:

Limitations

During my presentation at the Summer Institute in Munich, some researchers critiqued our methodology by highlighting that even if countries faced restrictions on ChatGPT, individuals might have used VPNs to bypass these restrictions. This potential workaround could undermine the validity of using these countries as a control group. However, I referred to studies by Kreitmeir and Raschky (2023) and del Rio-Chanona et al. (2023), which acknowledged that while VPNs allow access to ChatGPT, these barriers still pose significant hurdles to widespread and rapid adoption. Therefore, the restrictions remain a valid consideration in our analysis.

Data for future work

I would like to conduct a similar analysis using administrative data at the software developer level. Specifically, I aim to compare the increase in productivity for those who have access to GitHub Copilot with those who do not. Additionally, if we could obtain user experience metrics, we could explore heterogeneous results among users leveraging Copilot. This data would enable us to deepen our understanding of the impact of AI-assisted development tools on individual productivity and software development practices.

Kevin: Predictions for the future? Recommendations for policymakers? Recommendations for developers?

Alexander: The future likely holds increased integration of AI tools like ChatGPT and GitHub Copilot in software development processes. Given the significant positive impact observed on the number of Git pushes, repositories, and unique developers, we can predict that AI-driven development tools will become standard in software engineering.

Policymakers should consider the positive impacts of AI tools like ChatGPT and GitHub Copilot on productivity and support their integration into various sectors. Deregulating access to such tools could foster economic growth by enhancing developer efficiency and accelerating software production.

Developers should embrace AI tools like ChatGPT and GitHub Copilot to boost their productivity and efficiency. By leveraging these tools for coding, debugging, and optimizing software, developers can focus on more complex and creative aspects of software engineering.

Personal Q&A

Kevin: I’d like to change gears a bit to chat more about your personal stories. Alexander, it seems like you’ve got a deep economics background but you also use your computer science skills to write and maintain software libraries for causal inference techniques. Working with fellow economists to build software seems like a pretty unique role. Would love to learn about your journey to getting there.

Alexander: Yes, I love the intersection between economics and computer science. This journey began while I was working as a research associate at MIT. I was taking a causal ML course taught by Victor Chernozhukov, and he was looking for someone to translate tutorials from R to Python. This was in early 2020 before large language models existed. From there, I started collaborating with Victor on creating tutorials and developing Python and Julia software for causal ML methods. I realized that most advanced econometric techniques were primarily written in Stata or R, which limited their use in industry. It felt like a niche area at the time. For sure, back then, Microsoft’s EconML was in its early stages, and in subsequent years, several companies like Uber, Meta, and Amazon began developing their own Python packages. In 2022, I went to Stanford and talked with Professor Susan Athey about translating her Causal Inference and Machine Learning bookdown into Python. Doing this taught me a lot about various methods for heterogeneous treatment effects, such as causal trees and forests, and the intersection of reinforcement learning and causal inference. During my time in the Bay Area, I was impressed by the close collaboration between academia and big tech companies like Uber, Roblox, and Amazon. Academia focuses on developing these methods, while tech companies apply them in practice.

Kevin: Rodrigo, you also have quite the varied background. Could you share a bit about your path into working on data science, causal inference, and software engineering?

Rodrigo: Same as Alexander, I started off as an economist. I love the topic of economics overall, and during my undergrad I also became really interested in software development. At some point, one of the variable topic courses in the faculty was going to cover ML methods and their applications to the social sciences, which piqued my interest. This class was taught by Pavel Coronado, the head of the AI laboratory for Social Sciences at the faculty. He informed us that the laboratory would be starting a graduate diploma program on ML and AI for Social Sciences next semester. I was given the opportunity of joining this program before finishing my undergrad. During this program, I was able to really broaden my knowledge on topics like object-oriented programming, neural networks, big data, etc.; some of these topics were taught by Alexander. Near the end of the program, Alexander was looking for assistants with a good handle on software development for data science. Since then, I have collaborated with the team on a variety of projects.

Kevin: That’s wonderful, sounds like you both benefited from learning software development and applying those skills by engaging with instructors who believed in you (and/or were desperate for help!). Finding a niche that you’re passionate about is such a joy, and I’m curious about how you’ve found living in that niche. What’s the day-to-day like for you both?

Alexander: I work full-time for the World Bank, where I spend most of my time doing econometric analysis using administrative data. We use rich digital transfer data to analyze quasi-experiments, with most of this data coming from countries in South Asia like Pakistan, Bangladesh, and India. The other half of my time, I work with different professors. I have a team called, Dive Into Causal Machine Learning, which Rodrigo is also part of. We focus on creating causal ML packages in Python so that people from industry and government can use them for free. Currently, I am collaborating with Nathan Kallus (Cornell Tech/Netflix) on the intersection of reinforcement learning and causal inference, with Damian Clarke (U. Chile) on the SDID algorithm, and with Pedro Sant’Anna on DiD with multiple time periods package.

Rodrigo: Most of my work is with Alex and D2CML, so I get to be involved in a variety of projects ranging from teaching materials for undergraduate and graduate students to AI applications and academic research. I also do some related freelance work, and I have some open source passion projects.

Kevin: Have things changed since generative AI tooling came along? Have you found generative AI tools to be helpful?

Alexander: When I started creating econometric packages, most of my work was translating code from Stata and R to Python. There were no LLMs, so depending on the package it could take approximately two/three months. Once ChatGPT appeared, it was really helpful for translating code. I think that we can now do the translation twice as fast. However, I should say it works better for Python than for Julia’s translation. What I have learned now is how to ask good questions. A lot of the work for panel datasets is preprocessing big datasets, and for that, you have to be a good prompt engineer to be precise with the questions you formulate. I would like to try GitHub Copilot because I believe it could optimize my algorithms more effectively. Reviewing all the scripts with ChatGPT sometimes can be quite tedious.

Rodrigo: I have definitely found generative AI helpful. The way I use it is that it gives me an overall structure or a starting point for a task, and I take things from there. For example, I’m a very indecisive writer, so prompting a generative AI tool with an overall topic and some guidelines tends to give me the starting point I need, which really enhances my productivity even when the final product is completely different from the initial generative AI response. Things have definitely changed with AI tools: one notable example is the amount of students that use generative AI tools for their assignments. I think some effort goes into generating good responses with AI, and making sure that the responses are appropriate. So, instead of banning the use of generative AI, I think it’s best to become very familiar with it. I believe being a good prompt engineer will be an extremely useful skill in the near future.

Kevin: Advice you might have for folks who are starting out in software engineering or research? What tips might you give to a younger version of yourself, say, from 10 years ago?

Alexander: For those starting in software engineering or research, here’s my advice. Take an algorithms course, preferably in C++, as it provides a solid foundation for learning any programming language and is crucial for data processing and econometric implementation. Focus on mastering linear algebra; understanding matrix decomposition and optimization methods will make creating packages much easier. Learn Git and GitHub to become proficient in version control and teamwork. Additionally, take courses in causal inference and machine learning; these are now accessible to undergraduates and are essential for modern research. For those heading to graduate school, studying reinforcement learning and its integration with causal inference is highly beneficial, as these techniques are increasingly used in tech companies for data analysis.

Rodrigo: For both software engineering and research, starting out can be overwhelming because there is a lot you don’t know. We can also fall into “optimization paralysis†when we try to figure out the optimal course of action. For the first problem, sometimes it is enough to immerse yourself in the knowledge without trying too hard to understand it, and the understanding comes by itself like learning a language through immersion. For the second problem, some degree of impulsiveness can be very helpful, funnily enough; build an intuition and trust it.

Kevin: Learning resources you might recommend to someone interested in learning more about this space?

Alexander: For sure, this is the list for future causal ML researchers.

The Book of Why by Judea Pearl

Causal Inference: The Mixtape by Scott Cunningham

The Effect by Nick Huntington-Klein

Applied Causal Inference Powered by ML and AI by Victor Chernozhukov et al

Machine Learning and Causal Inference: A Short Tutorial by Guido W. Imbens and Susan Athey

Awesome Causal Inference by Matteo Courthoud

Dive into Causal Machine Learning ( self promotion 😉 )

Rodrigo: Right now my favorite resource for this topic is Applied Causal Inference Powered by ML and AI by Chernozhukov, Hansen, Kallus Spindler, and Syrgkanis. I also like having an introductory econometrics textbook by hand; Introductory Econometrics: A Modern Approach by Jeffrey M. Wooldridge is a really good resource in this regard.

Kevin: Thank you, Alexander and Rodrigo! It’s been fascinating to learn about your current work and broader career trajectory. We truly appreciate you taking the time to speak with us and will absolutely keep following your work, where we hope the Innovation Graph can continue to serve as a helpful resource.

The post How researchers are using GitHub Innovation Graph data to estimate the impact of ChatGPT appeared first on The GitHub Blog.

Source: Read MoreÂ