As we all know, in the world of reels, Photos, and videos, Everyone is creating content and uploading to public-facing applications, such as social media. There is no control over the type of images users upload to the website. Here, we will discuss how to restrict inappropriate photos.

The AWS Rekognition Service can help you restrict this. AWS Rekognition Content moderation can detect inappropriate or unwanted content and provide levels for the images. By using that, not only can you make your business site compliant, but it also saves you a lot of cost, as you must pay only for what you use, without minimum fees, licenses, or upfront commitments.

This will require Lambda and API Gateway.

Implementing AWS Rekognition

To implement the solution, you must create a Lambda function and an API Gateway.

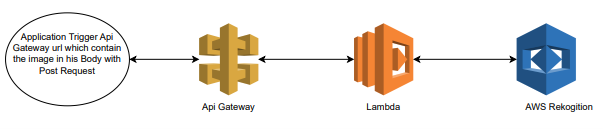

So the flow of solution will be like in the following manner:

Lambda

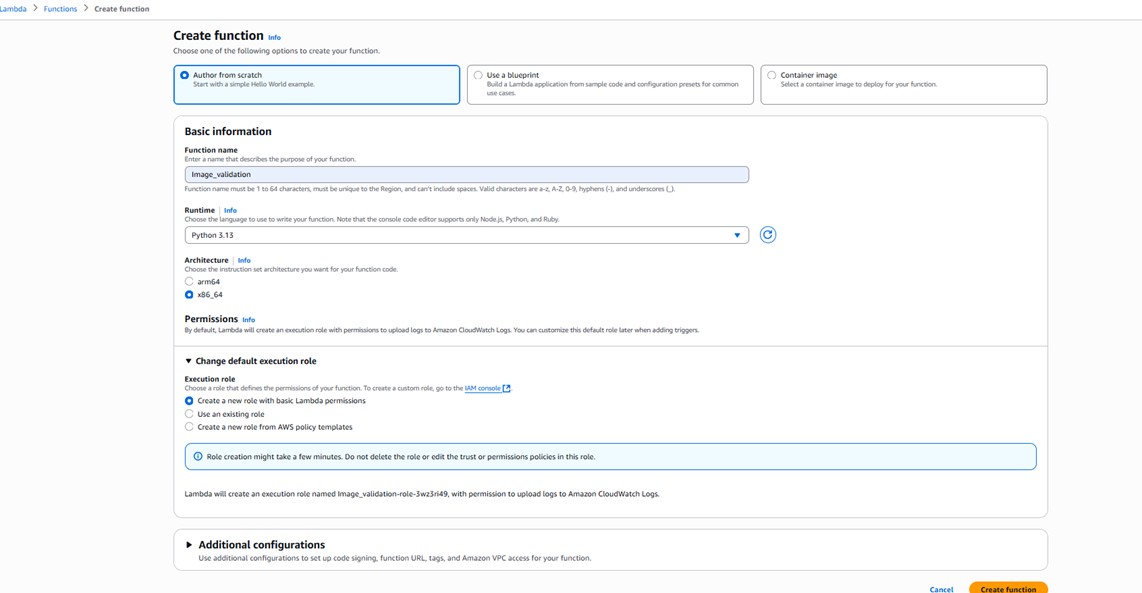

To create Lambda, you can follow the steps below:

1. Go to AWS Lambda Service and click on Create function, add information, and click on Create function.

- Make sure to add the permission below in Lambda Execution role:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "rekognition:*",

"Resource": "*"

}

]

}- You can use the Python code below:

Below, the sample Python code sends the image to the AWS Rekognition service, retrieves the moderation level from it, and based on that, decides whether it is safe or unsafe.

responses = rekognition.detect_moderation_labels(

Image={'Bytes': image_bytes},

MinConfidence=80

)

print(responses)

response = str(responses)

filter_keywords = ["Weapons","Graphic Violence","Death and Emaciation","Crashes","Products","Drugs & Tobacco Paraphernalia & Use","Alcohol Use","Alcoholic Beverages","Explicit Nudity","Explicit Sexual Activity","Sex Toys","Non-Explicit Nudity","Obstructed Intimate Parts","Kissing on the Lips","Female Swimwear or Underwear","Male Swimwear or Underwear","Middle Finger","Swimwear or Underwear","Nazi Party","White Supremacy","Extremist","Gambling" ]

def check_for_unsafe_keywords(response: str):

response_lower = response.lower()

unsafe_keywords_found = [

keyword for keyword in filter_keywords if keyword.lower() in response_lower

]

return unsafe_keywords_found

unsafe = check_for_unsafe_keywords(response)

if unsafe:

print("Unsafe keywords found:", unsafe)

return {

'statusCode': 403,

'headers': {'Content-Type': 'application/json'

},

'body': json.dumps({

"Unsafe": "Asset is Unsafe",

"labels": unsafe

})

}

else:

print("No unsafe content detected.")

return {

'statusCode': 200,

'headers': {'Content-Type': 'application/json'},

'body': json.dumps({

"safe": "Asset is safe",

"labels": unsafe

})

}4. Then click on the Deploy button to deploy the code.

AWS Api Gateway

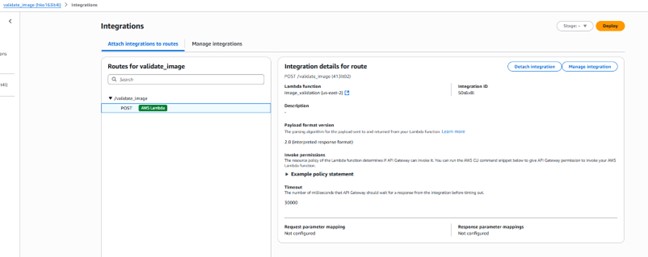

You need to create an API gateway that can help you send an image to Lambda to process with AWS Rekognition and then send the response to the User.

Sample API Integration:

Once this is all set, when you send the image to the API gateway in the body, you will receive a response in a safe or unsafe manner.

Conclusion

With this solution, your application prevents the uploading of any inappropriate or unwanted images. It is very cost-friendly too. It also helps make your site compliant.

Source: Read MoreÂ