Abstract

We live in a time when automating processes is no longer a luxury, but a necessity for any team that wants to remain competitive. But automation has evolved. It is no longer just about executing repetitive tasks, but about creating solutions that understand context, learn over time, and make smarter decisions. In this blog, I want to show you how n8n (a visual and open-source automation tool) can become the foundation for building intelligent agents powered by AI.

We will explore what truly makes an agent “intelligent,” including how modern AI techniques allow agents to retrieve contextual information, classify tickets, or automatically respond based on prior knowledge.

I will also show you how to connect AI services and APIs from within a workflow in n8n, without the need to write thousands of lines of code. Everything will be illustrated with concrete examples and real-world applications that you can adapt to your own projects.

This blog is an invitation to go beyond basic bots and start building agents that truly add value. If you are exploring how to take automation to the next level, thiss journey will be of great interest to you.

Introduction

Automation has moved from being a trend to becoming a foundational pillar for development, operations, and business teams. But amid the rise of tools that promise to do more with less, a key question emerges: how can we build truly intelligent workflows that not only execute tasks but also understand context and act with purpose? This is where AI agents begin to stand out.

This blog was born from that very need. Over the past few months, I’ve been exploring how to take automation to the next level by combining two powerful elements: n8n (a visual automation platform) and the latest advances in artificial intelligence. This combination enables the design of agents capable of understanding, relating, and acting based on the content they receive—with practical applications in classification, search, personalized assistance, and more.

In the following sections, I’ll walk you through how these concepts work, how they connect with each other, and most importantly, how you can apply them yourself (without needing to be an expert in machine learning or advanced development). With clear explanations and real-world examples built with n8n, this blog aims to be a practical, approachable guide for anyone looking to go beyond basic automation and start building truly intelligent solutions.

What are AI Agents?

An AI agent is an autonomous system (software or hardware) that perceives its environment, processes information, and makes decisions to achieve specific goals. It does not merely react to basic events; it can analyze context, query external sources, and select the most appropriate action. Unlike traditional bots, intelligent agents integrate reasoning and sometimes memory, allowing them to adapt and make decisions based on accumulated experience (Wooldridge & Jennings, 1995; Cheng et al., 2024).

In the context of n8n, an AI agent translates into workflows that not only execute tasks but also interpret data using language models and act according to the situation, enabling more intelligent and flexible processes.

From Predictable to Intelligent: Traditional Bot vs. Context-Aware AI Agent

A traditional bot operates based on a set of rigid rules and predefined responses, which limits its ability to adapt to unforeseen situations or understand nuances in conversation. Its interaction is purely reactive: it responds only to specific commands or keywords, without considering the conversation’s history or the context in which the interaction occurs. In contrast, a context-aware artificial intelligence agent uses advanced natural language processing techniques and conversational memory to adapt its responses according to the flow of the conversation and the previous information provided by the user. This allows it to offer a much more personalized, relevant, and coherent experience, overcoming the limitations of traditional bots. Context-aware agents significantly improve user satisfaction, as they can understand intent and dynamically adapt to different conversational scenarios (Chen, Xu, & Wang, 2022).

Figure 1: Architecture of an intelligent agent with hybrid memory in n8n (Dąbrowski, 2024).

How Does n8n Facilitate the Creation of Agents?

n8n is an open-source automation platform that enables users to design complex workflows visually, without the need to write large amounts of code. It simplifies the creation of intelligent agents by seamlessly integrating language models (such as OpenAI or Azure OpenAI), vector databases, conditional logic, and contextual memory storage.

With n8n, an agent can receive text input, process it using an AI model, retrieve relevant information from a vector store, and respond based on conversational history. All of this is configured through visual nodes within a workflow, making advanced solutions accessible even to those without a background in artificial intelligence.

Thanks to its modular and flexible design, n8n has become an ideal platform for building agents that not only automate tasks but also understand, learn, and act autonomously.

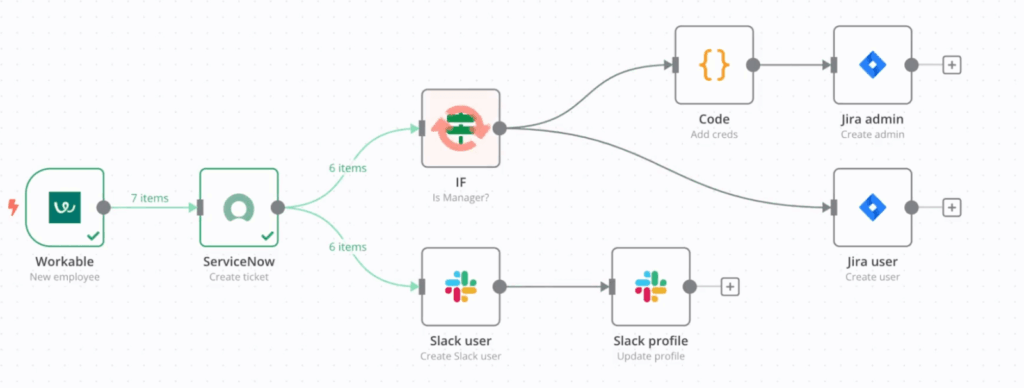

Figure 2: Automated workflow in n8n for onboarding and permission management using Slack, Jira, and ServiceNow (TextCortex, 2025).

Integrations with OpenAI, Python, External APIs, and Conditional Flows

One of n8n’s greatest strengths is its ability to connect with external tools and execute custom logic. Through native integrations, it can interact with OpenAI (or Azure OpenAI), enabling the inclusion of language models for tasks such as text generation, semantic classification, or automated responses.

Additionally, n8n supports custom code execution through Python or JavaScript nodes, expanding its capabilities and making it highly adaptable to different use cases. It can also communicate with any external service that provides a REST API, making it ideal for enterprise-level integrations.

Lastly, its conditional flow system allows for dynamic branching within workflows, evaluating logical conditions in real time and adjusting the agent’s behavior based on the context or incoming data.

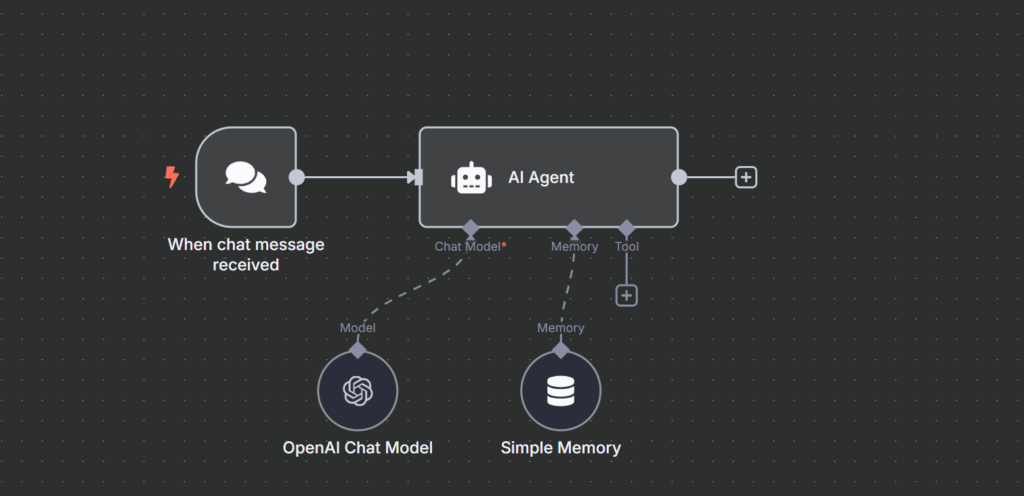

Figure 3: Basic conversational agent flow in n8n with language model and contextual memory.

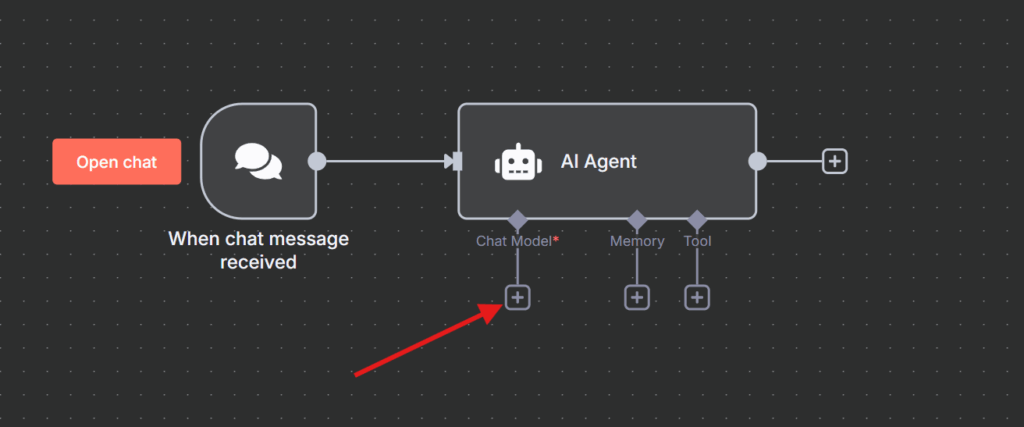

This basic flow in n8n represents the core logic of a conversational intelligent agent. The process begins when a message is received through the “When chat message received” node. That message is then passed to the AI Agent node, which serves as the central component of the system.

The agent is connected to two key elements: a language model (OpenAI Chat Model) that interprets and generates responses, and a simple memory that allows it to retain the context or conversation history. This combination enables the agent not only to produce relevant responses but also to remember previous information and maintain coherence across interactions.

This type of architecture demonstrates how, with just a few nodes, it is possible to build agents with contextual behavior and basic reasoning capabilities—ideal for customer support flows, internal assistants, or automated conversational interfaces.

Before the agent can interact with users, it needs to be connected to a language model. The following shows how to configure this integration in n8n.

Configuring the Language Model in the AI Agent

As developers at Perficient, we have the advantage of accessing OpenAI services through the Azure platform. This integration allows us to leverage advanced language models in a secure, scalable manner, fully aligned with corporate policies, and facilitates the development of artificial intelligence solutions tailored to our needs.

One of the fundamental steps in building an AI agent in n8n is to define the language model that will be used to process and interpret user inputs. In this case, we use the OpenAI Chat Model node, which enables the agent to connect with advanced language models available through the Azure OpenAI API.

When configuring this node, n8n will require an access credential, which is essential for authenticating the connection between n8n and your Azure OpenAI service. If you do not have one yet, you can create it from the Azure portal by following these steps:

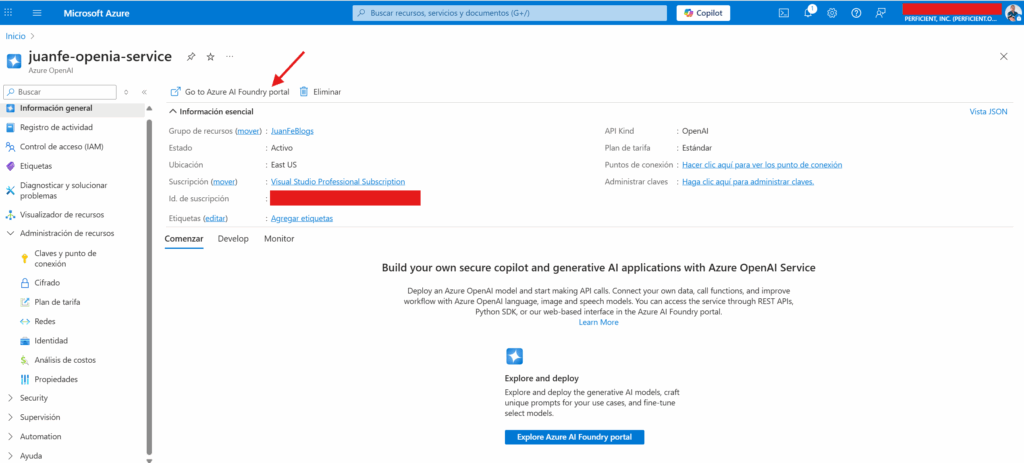

- Go to the Azure portal. If you do not yet have an Azure OpenAI resource, create one by selecting “Create a resource“, searching for “Azure OpenAI”, and following the setup wizard to configure the service with your subscription parameters. Then access the implemented resource.

- Go to url https://ai.azure.com and sign in with your Azure account. Select the Azure OpenAI resource you created and, from the side menu, navigate to the “Deployments” section. There you must create a new deployment, selecting the language model you want to use (for example, GPT 3.5 or GPT 4) and assigning it a unique deployment name. You can also click on the Command-Go to Azure AI Foundry portal option as shown in the image.

Figure 4: Access to the Azure AI Foundry portal from the Azure OpenAI resource.

- Once the deployment is created, go to “API Keys & Endpoints” to copy the access key (API Key) and the endpoint corresponding to your resource.

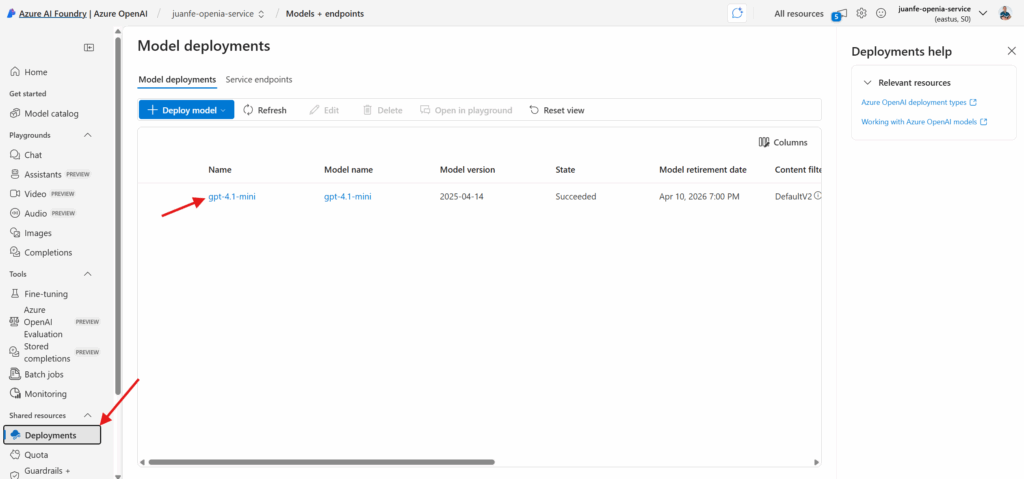

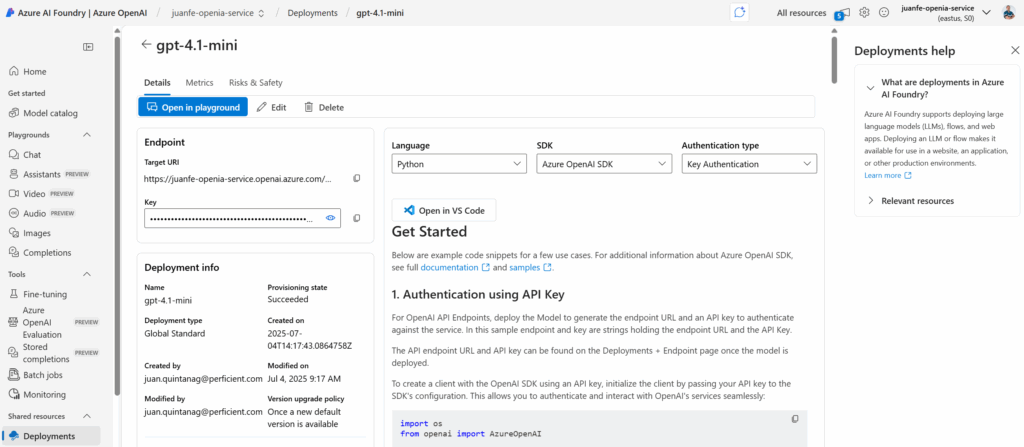

Figure 5: Visualization of model deployments in Azure AI Foundry.

Once the model deployment has been created in Azure AI Foundry, it is essential to access the deployment details in order to obtain the necessary information for integrating and consuming the model from external applications. This view provides the API endpoint, the access key (API Key), as well as other relevant technical details of the deployment, such as the assigned name, status, creation date, and available authentication parameters.

This information is crucial for correctly configuring the connection from tools like n8n, ensuring a secure and efficient integration with the language model deployed in Azure OpenAI.

Figure 6: Azure AI Foundry deployment and credentialing details.

-

Step 1:

In n8n, select the “+ Create new credential” option in the node configuration, and enter the endpoint, the API key, and the deployment name you configured. But first we must create the AI Agent:

Figure 7: Chat with AI Agent.

Step 2:

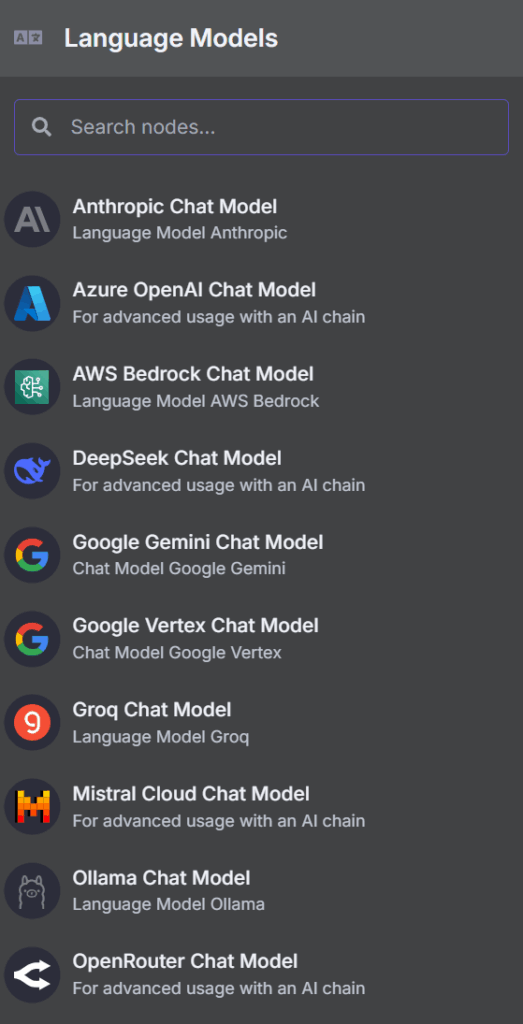

After the creation of the Agent, the model is added, as shown in the figure above. Within the n8n environment, integration with language models is accomplished through specialized nodes for each AI provider.

To connect our agent with Azure OpenAI services, it is necessary to select the Azure OpenAI Chat Model node in the Language Models section.

This node enables you to leverage the advanced capabilities of language models deployed in Azure, making it easy to build intelligent and customizable workflows for various corporate use cases. Its configuration is straightforward and, once properly authenticated, the agent will be ready to process requests using the selected model from Azure’s secure and scalable infrastructure.

Figure 8: Selection of the Azure OpenAI Chat Model node in n8n.

Step 3:

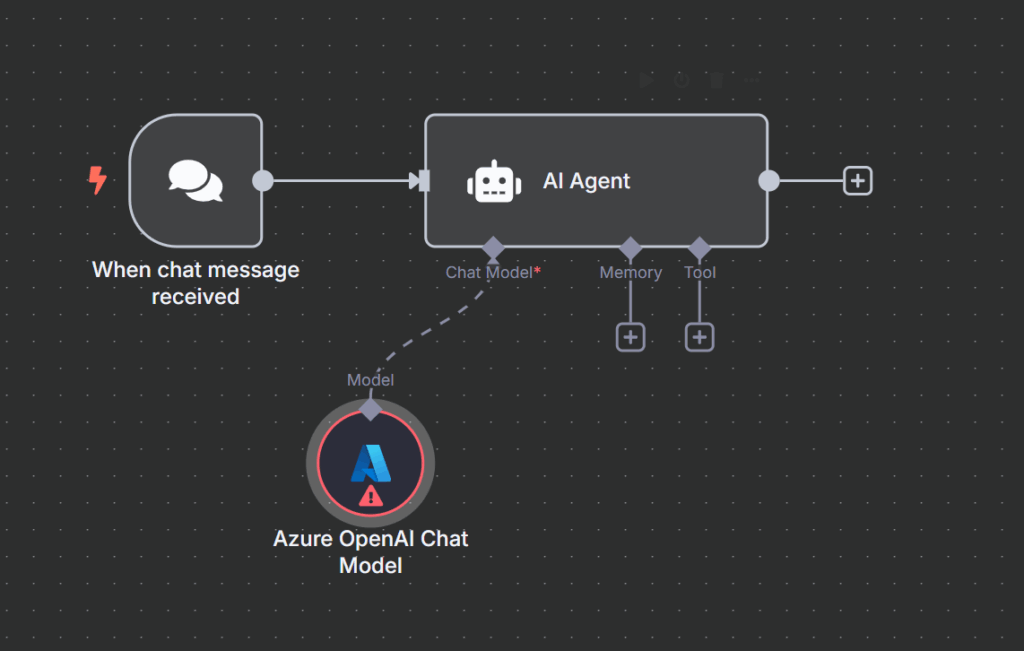

Once the Azure OpenAI Chat Model node has been selected, the next step is to integrate it into the n8n workflow as the primary language model for the AI agent.

The following image illustrates how this model is connected to the agent, allowing chat inputs to be processed intelligently by leveraging the capabilities of the model deployed in Azure. This integration forms the foundation for building more advanced and personalized conversational assistants in enterprise environments.

Figure 9: Selection of the Azure OpenAI Chat Model node in n8n.

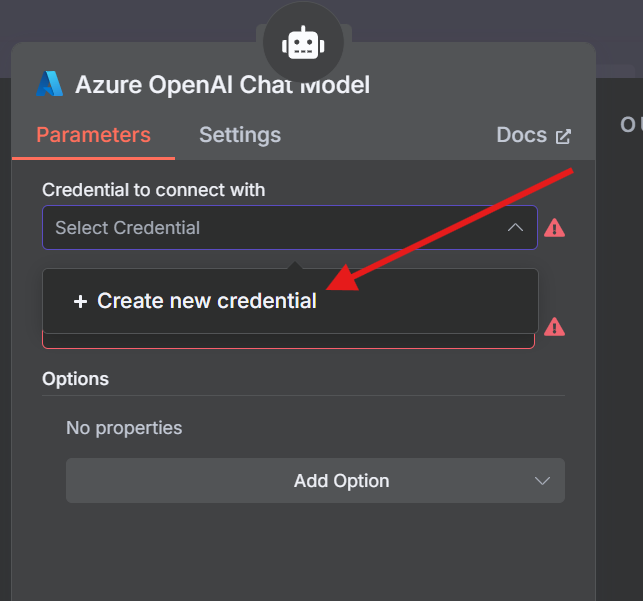

Step 4:

When configuring the Azure OpenAI Chat Model node in n8n, it is necessary to select the access credential that will allow the connection to the Azure service.

If a credential has not yet been created, you can do so directly from this panel by selecting the “Create new credential” option.

This step is essential to authenticate and authorize the use of language models deployed in Azure within your automation workflows.

Figura 10: Selection of the Azure OpenAI Chat Model node in n8n.

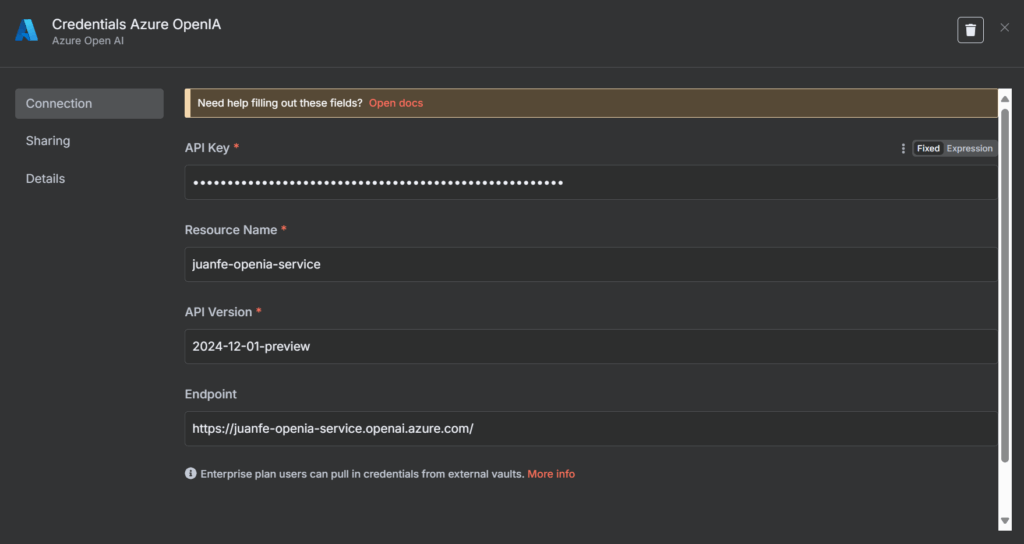

Step 5:

To complete the integration with Azure OpenAI in n8n, it is necessary to properly configure the access credentials.

The following screen shows the required fields, where you must enter the API Key, resource name, API version, and the corresponding endpoint.

This information ensures that the connection between n8n and Azure OpenAI is secure and functional, enabling the use of language models deployed in the Azure cloud.

Figure 11: Azure OpenAI credentials configuration in n8n.

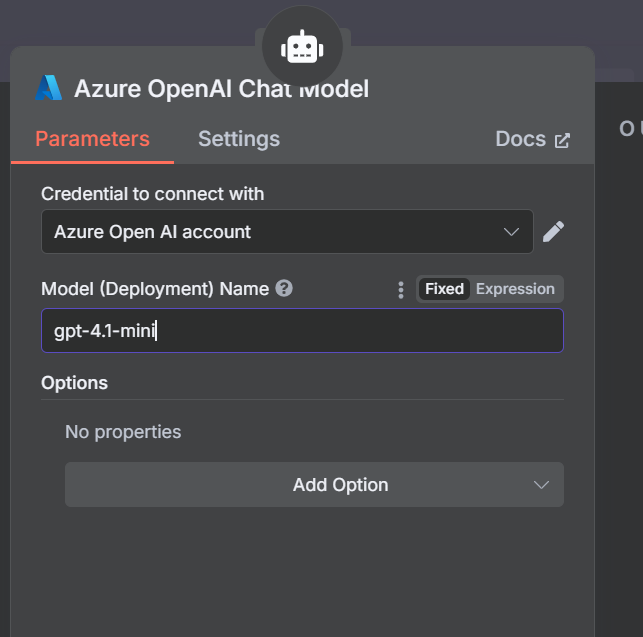

Step 6:

After creating and selecting the appropriate credentials, the next step is to configure the model deployment name in the Azure OpenAI Chat Model node.

In this field, you must enter exactly the name assigned to the model deployment in Azure, which will allow n8n to correctly use the deployed instance to process natural language requests. Remember to select the Model of the selected implementation in Azure OpenIA, in this case gtp-4.1-mini:

Figure 12: Configuration of the deployment name in the Azure OpenAI Chat Model node.

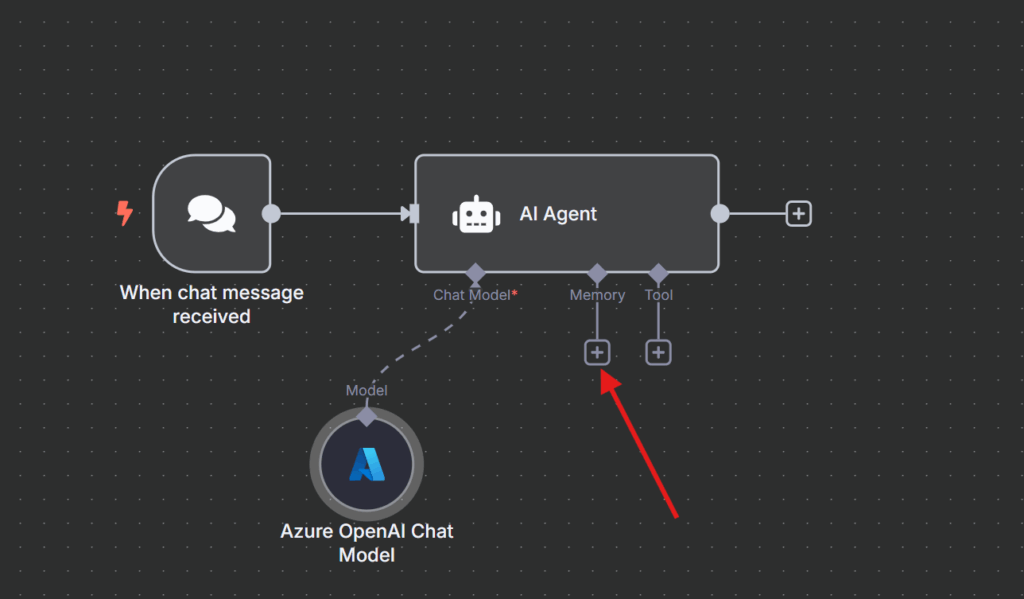

Step 7:

Once the language model is connected to the AI agent in n8n, you can enhance its capabilities by adding memory components.

Integrating a memory system allows the agent to retain relevant information from previous interactions, which is essential for building more intelligent and contextual conversational assistants.

In the following image, the highlighted area shows where a memory module can be added to enrich the agent’s behavior.

Figure 13: Connecting the memory component to the AI agent in n8n.

Step 8:

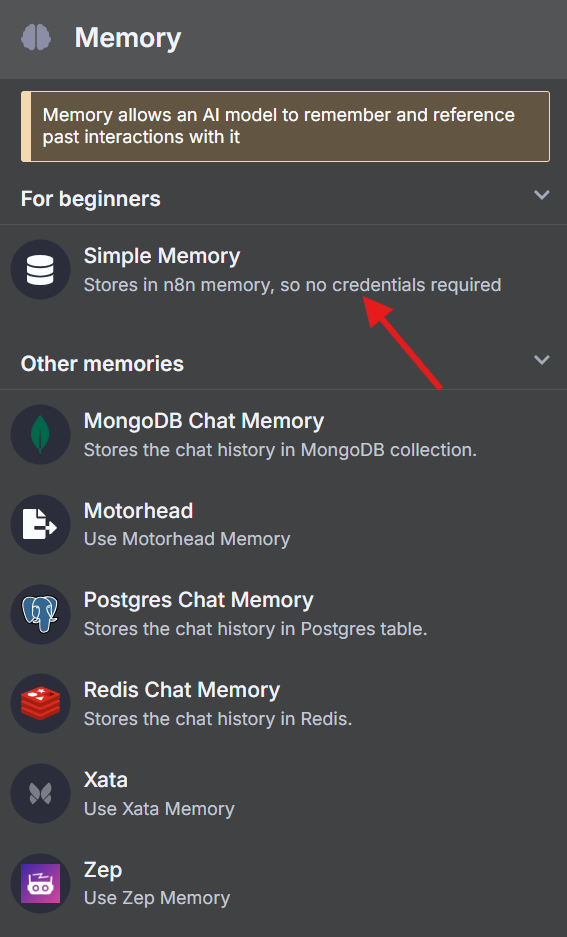

To start equipping the AI agent with memory capabilities, n8n offers different options for storing conversation history.

The simplest alternative is Simple Memory, which stores the data directly in n8n’s internal memory without requiring any additional credentials.

There are also more advanced options available, such as storing the history in external databases like MongoDB, Postgres, or Redis, which provide greater persistence and scalability depending on the project’s requirements.

Figure 14: Memory storage options for AI agents in n8n.

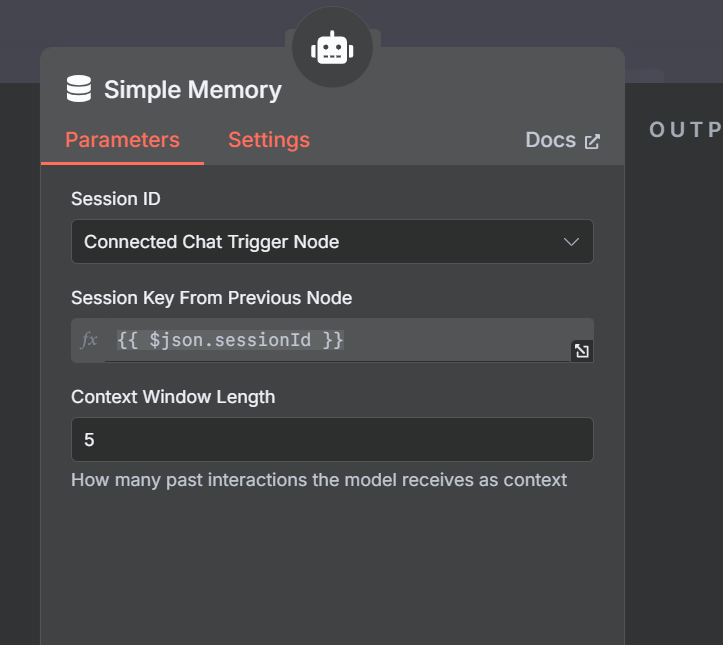

Step 9:

The configuration of the Simple Memory node in n8n allows you to easily define the parameters for managing the conversational memory of the AI agent.

In this interface, you can specify the session identifier, the field to be used as the conversation tracking key, and the number of previous interactions the model will consider as context.

These settings are essential for customizing information retention and improving continuity in the user’s conversational experience.

Figure 15: Memory storage options for AI agents in n8n.

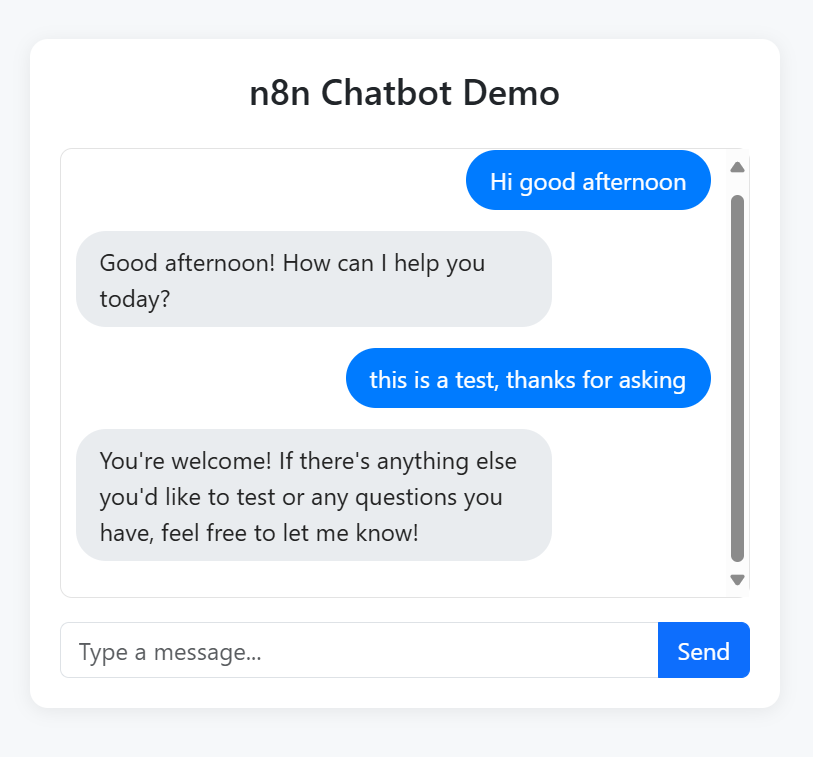

Step 10:

The following image shows the successful execution of a conversational workflow in n8n, where the AI agent responds to a chat message using a language model deployed on Azure and manages context through a memory component.

You can see how each node in the workflow performs its function and how the conversation history is stored, enabling the agent to provide more natural and contextual responses.

Figure 16: Execution of a conversational workflow with Azure OpenAI and memory in n8n.

Once a valid credential has been added and selected, the node will be ready to send requests to the chosen language model (such as GPT 3.5 or GPT 4) and receive natural language responses, allowing the agent to continue the conversation or execute actions automatically.

With this integration, n8n becomes a powerful automation tool, enabling use cases such as conversational assistants, support bots, intelligent classification, and much more.

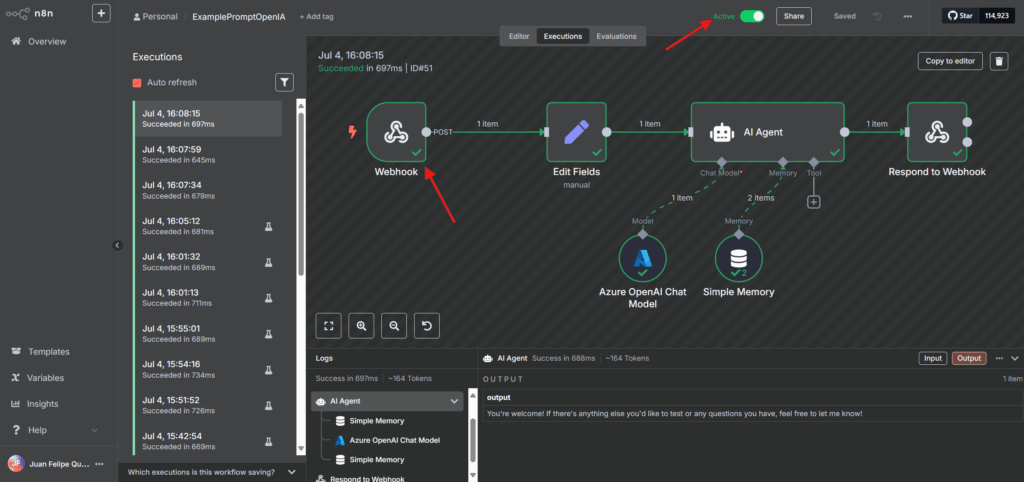

Integration the AI Agent into web application

Before integrating the AI agent into a web application, it is essential to have a ready-to-use n8n workflow that receives and responds to messages via a Webhook. Below is a typical workflow example where the main components for conversational processing are connected.

For the purposes of this blog, we will assume that both the Webhook node (which receives HTTP requests) and the Set/Edit Fields node (which prepares the data for the agent) have already been created. As shown in the following image, the workflow continues with the configuration of the language model (Azure OpenAI Chat Model), memory management (Simple Memory), processing via the AI Agent node, and finally, sending the response back to the user using the Respond to Webhook node.

Figure 17: n8n Workflow for AI Agent Integration with Webhook.

Before connecting the web interface to the AI agent deployed in n8n, it is essential to validate that the Webhook is working correctly. The following image shows how, using a tool like Postman, you can send an HTTP POST request to the Webhook endpoint, including the user’s message and the session identifier. As a result, the flow responds with the message generated by the agent, demonstrating that the end-to-end integration is functioning properly.

Figure 18: Testing the n8n Webhook with Postman.

-

- Successful Test of the n8n Chatbot in a WebApp: The following image shows the functional integration between a chatbot built in n8n and a custom web interface using Bootstrap. By sending messages through the application, responses from the AI agent deployed on Azure OpenAI are displayed in real time, enabling a seamless and fully automated conversational experience directly from the browser.

Figure 19: n8n Chatbot Web Interface Working with Azure OpenAI.

-

Introductory Text: Before consuming the agent from a web page or an external application, it is essential to ensure that the flow in n8n is activated. As shown in the image, the “Active” button must be enabled (green) so that the webhook works continuously and can receive requests at any time. Additionally, remember that when deploying to a production environment, you must change the webhook URL, using the appropriate public address instead of “localhost”, ensuring external access to the flow.

Figure 20: Activation and Execution Tracking of the Flow in n8n.

- Successful Test of the n8n Chatbot in a WebApp: The following image shows the functional integration between a chatbot built in n8n and a custom web interface using Bootstrap. By sending messages through the application, responses from the AI agent deployed on Azure OpenAI are displayed in real time, enabling a seamless and fully automated conversational experience directly from the browser.

Conclusions

Intelligent automation is essential for today’s competitiveness

Automating tasks is no longer enough; integrating intelligent agents allows teams to go beyond simple repetition, adding the ability to understand context, learn from experience, and make informed decisions to deliver real value to business processes.

Intelligent agents surpass the limitations of traditional bots

Unlike classic bots that respond only to rigid rules, contextual agents can analyze the flow of conversation, retain memory, adapt to changing situations, and offer personalized and coherent responses, significantly improving user satisfaction.

n8n democratizes the creation of intelligent agents

Thanks to its low-code/no-code approach and more than 400 integrations, n8n enables both technical and non-technical users to design complex workflows with artificial intelligence, without needing to be experts in advanced programming or machine learning.

The integration of language models and memory in n8n enhances conversational workflows

Easy connection with advanced language models (such as Azure OpenAI) and the ability to add memory components makes n8n a flexible and scalable platform for building sophisticated and customizable conversational agents.

Proper activation and deployment of workflows ensures the availability of AI agents

To consume agents from external applications, it is essential to activate workflows in n8n and use the appropriate production endpoints, thus ensuring continuous, secure, and scalable responses from intelligent agents in real-world scenarios.

References

- Wooldridge, M., & Jennings, N. R. (1995). Intelligent agents: Theory and practice. The Knowledge Engineering Review, 10(2), 115–152.

- Cheng, Y., Zhang, C., Zhang, Z., Meng, X., Hong, S., Li, W., Zhao, J. (2024). Exploring Large Language Model based Intelligent Agents: Definitions, Methods, and Prospects. arXiv.

- Chen, C., Xu, Y., & Wang, Z. (2022). Context-Aware Conversational Agents: A Review of Methods and Applications. IEEE Transactions on Artificial Intelligence, 3(4), 410-425.

- Zamani, H., Sadoughi, N., & Croft, W. B. (2023). Intelligent Workflow Automation: Integrating Memory-Augmented Agents in Business Processes. Journal of Artificial Intelligence Research, 76, 325-348.

- Dąbrowski, D. (2024). Day 67 of 100 Days Agentic Engineer Challenge: n8n Hybrid Long-Term Memory. Medium. https://damiandabrowski.medium.com/day-67-of-100-days-agentic-engineer-challenge-n8n-hybrid-long-term-memory-ce55694d8447

- n8n. (2024). Build your first AI Agent – powered by Google Gemini with memory. https://n8n.io/workflows/4941-build-your-first-ai-agent-powered-by-google-gemini-with-memory

- Luo, Y., Liang, P., Wang, C., Shahin, M., & Zhan, J. (2021). Characteristics and challenges of low code development: The practitioners’ perspective. arXiv. http://dx.doi.org/10.48550/arXiv.2107.07482

- TextCortex. (2025). N8N Review: Features, pricing & use cases. Cybernews. https://cybernews.com/ai-tools/n8n-review/

Source: Read MoreÂ