Introduction

When using cloud-based event-driven systems, it’s important to react to changes at the storage level, such as when files are added, altered, or deleted. Google Cloud Platform (GCP) makes this easy by enabling Cloud Storage and Pub/Sub talk to one other directly. This arrangement lets you send out structured real-time alerts whenever something happens inside a bucket. This configuration is specifically designed to catch deletion occurrences. When a file is deleted from a GCS bucket, a message is sent to a Pub/Sub topic. That subject becomes the main connection, providing alerts to any systems that are listening, such as a Cloud Run service, an external API, or another microservice. These systems can then react by cleaning up data, recording the incident, or sending out alarms. The architecture also takes care of critical backend needs. It employs IAM roles to set limits on who can access what, has retry rules in case something goes wrong for a short time, and links to a Dead-Letter Queue (DLQ) to keep messages that couldn’t be sent even after numerous tries. The whole system stays loosely coupled and strong because it employs technologies that are built into GCP. You can easily add or remove downstream services without changing the initial bucket. This pattern is a dependable and adaptable way to enforce cleanup rules, keep track of changes for auditing, or start actions in real time. In this article, we’ll explain the fundamental ideas, show you how to set it up, and talk about the important design choices that make this type of event notification system work with Pub/Sub to keep everything running smoothly.

Why Use Pub/Sub for Storage Notifications?

Pub/Sub makes it easy to respond to changes in Cloud Storage, like when a file is deleted, without having to connect everything closely. You don’t link each service directly to the storage bucket. Instead, you send events using Pub/Sub. This way, logging tools, data processors, and alarm systems may all work on their own without interfering with each other. The best thing? You can count on it. Even if something goes wrong, Pub/Sub makes sure that events don’t get lost. And since you only pay when messages are delivered or received, you don’t have to pay for resources that aren’t being used. This setup lets you be flexible, respond in real time, and evolve, which is great for cloud-native systems that need to be able to adapt and stay strong.

Architecture Overview

Step 1: Create a Cloud Storage Bucket

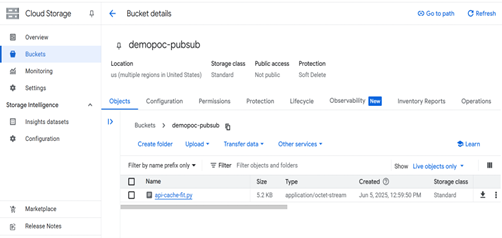

If you don’t already have a bucket, go to the Cloud Storage console, click ‘Create Bucket’, and follow these steps:

– Name: Choose a globally unique bucket name (e.g., demopoc-pubsub)

– Location: Pick a region or multi-region

– Default settings: You can leave the rest unchanged for this demo

Step 2: Create a Pub/Sub Topic

Go to Pub/Sub in the Cloud Console and:

1. Click ‘Create Topic’

2. Name it something like demo-poc-pubsub

3. Leave the rest as defaults

4. Click Create

Step 3: Create a Pub/Sub Subscription (Pull-based)

- Click on the topic gcs-object-delete-topic

2. Click ‘Create Subscription’

3. Choose a pull subscription

4. Name it gcs-delete-sub

5. Leave other options as default

6. Click Create

Step 4: Grant Pub/Sub Permission to Publish to the Topic

Go to the IAM permissions for your topic:

1. In the Pub/Sub console, go to your topic

2. Click ‘Permissions’

3. Click ‘Grant Access’

4. Add the GCS service account: service-<project-number-sample>@gs-project-accounts.iam.gserviceaccount.com

5. Assign it the role: Pub/Sub Publisher

6. Click Save

Step 5: Connect Cloud Storage to Pub/Sub via Shell

Open your cloud shell terminal and run:

gcloud storage buckets notifications create gs://my-delete-audit-bucket –topic=gcs-object-delete-topic –event-types=OBJECT_DELETE –payload-format=json

Explanation of gsutil command:

gs://my-delete-audit-bucket: Your storage bucket

–topic: Pub/Sub topic name

–event-types=OBJECT_DELETE: Triggers only when objects are deleted

–payload-format=json: Format of the Pub/Sub message

Step 6: Test the Notification System

Then pull the message from Pub/Sub console.

Expected message payload:

{

“kind”: “storage#object”,

“bucket”: “my-delete-audit-bucket”,

“name”: “test.txt”,

“timeDeleted”: “2025-06-05T14:32:29.123Z”

}

Sample Use Cases

- Audit Trail for Object Deletion Use Case: Keep track of every deletion for compliance or internal audits. How it works:When a file is destroyed, the Cloud Storage trigger sends an event to Pub/Sub. A Cloud Function or Dataflow task subscribes to the topic and sends metadata (such as the file name, timestamp, user, and bucket) to Big-Query.

Why it matters:

Keeps an unchangeable audit trail, helps with compliance (such HIPAA and SOC2), security audits, and internal investigations.

Enforcement of Data RetentionUse Case: Stop people from accidentally deleting data or make sure that data is kept for at least a certain amount of time.How it works:

When an object is deleted, the system checks to see if it should have been deleted based on its name, metadata, and other factors.

It logs the incident or restores the object from backup (for example, using Nearline or Archive tier) if it finds a violation.Why it matters:

Helps keep data safe from being lost because to accidental or unlawful deletion. Makes sure that data lifecycle policies are followed

3. Start cleaning jobs downstream

Use Case: When an object is removed, connected data in other systems should be cleaned away automatically.How it works:

When you delete a GCS object, a Cloud Function or Dataflow pipeline is triggered by the Pub/Sub message. This job deletes records that are linked to it in Big-Query, Fire-store, or invalidates cache/CDN entries.

Why it matters:

Keeps data systems working together. Stops recordings from being left behind or metadata from getting old4. Alerts in Real Time

Use Case: Let teams or monitoring systems know when sensitive or unexpected removals happen.How it works:

A Cloud Function that listens to Pub/Sub looks at the event. It gives an alert if the deletion meets certain criteria, such as a certain folder or file type.

Why it matters

Allows for a response in real time. Increases the ability to see high-risk operations

Result:

We created a modular, fault-tolerant real-time event-driven pipeline by using a Pub/Sub-based notification system for deleting Cloud Storage objects. When an object is added to or removed from the specified GCS bucket, alert notifications are sent to a Pub/Sub topic. That topic makes sure that the message gets to one or more downstream consumers.

Conclusion

Combining Cloud Storage with Pub/Sub for deleting objects is a basic idea in today’s GCP design. It publishes events to a Pub/Sub topic in almost real time when something is deleted. These events can be used for audit trails, enforcing data policies, automatic cleanups, and even alarms.This method promotes loose coupling by enabling Cloud Storage send events without having to know who the subscribers are. Cloud Functions, Dataflow, and custom applications that subscribers use can handle messages on their own. This makes the system easier to scale and manage.Using pub/sub makes production workflows more organized because it adds reliability, parallelism, retries, and other benefits. If GCP engineers want to design cloud systems that are adaptable, responsive, and ready for the future, they need to be experts at event-driven integration.

Source: Read MoreÂ