Laravel’s streaming response feature enables efficient handling of large datasets by sending data incrementally as it’s generated, reducing memory usage and improving response times.

Route::get('/stream', function () {

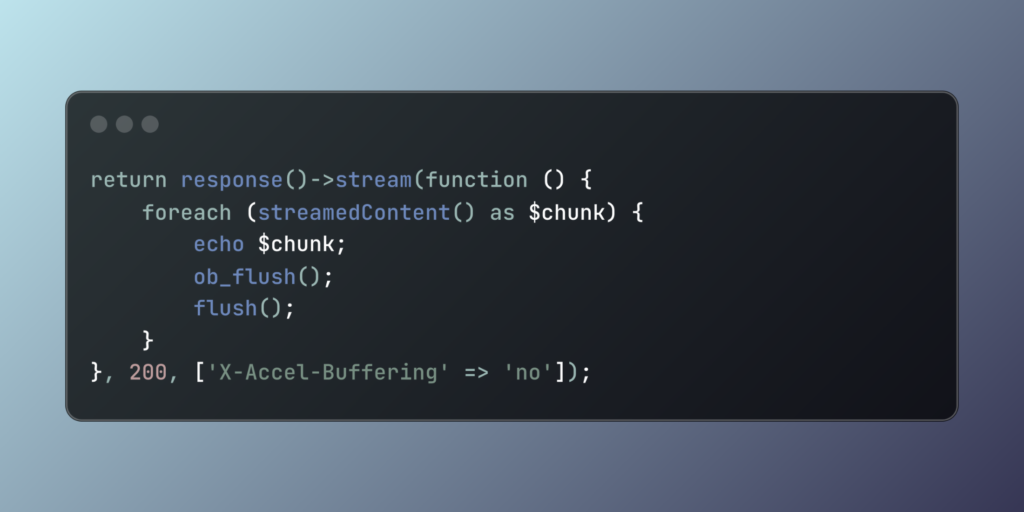

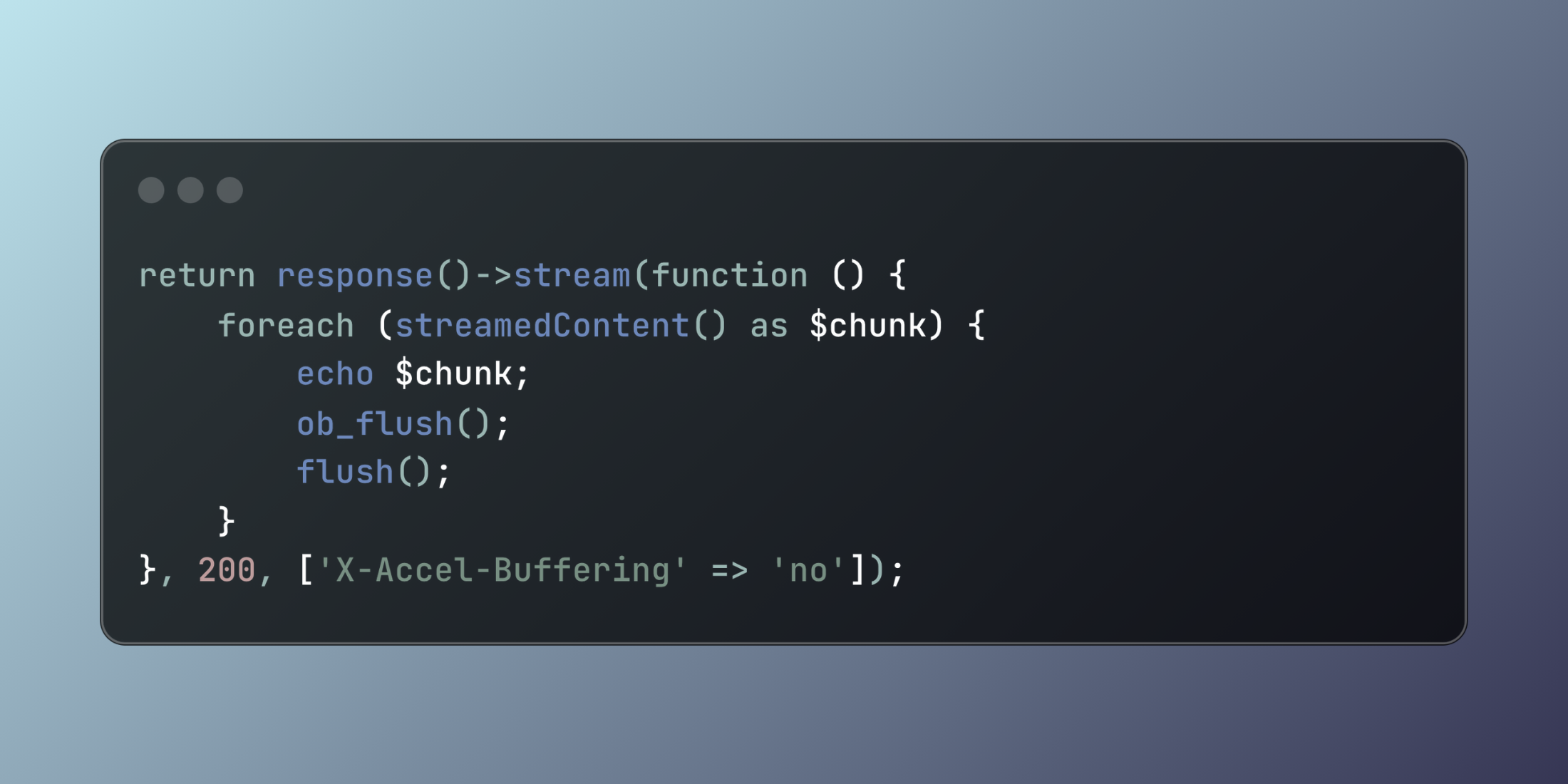

return response()->stream(function () {

foreach (range(1, 100) as $number) {

echo "Line {$number}n";

ob_flush();

flush();

}

}, 200, ['Content-Type' => 'text/plain']);

});

Let’s explore a practical example of streaming a large data export:

<?php

namespace AppHttpControllers;

use AppModelsOrder;

use IlluminateSupportFacadesDB;

class ExportController extends Controller

{

public function exportOrders()

{

return response()->stream(function () {

// Output CSV headers

echo "Order ID,Customer,Total,Status,Daten";

ob_flush();

flush();

// Process orders in chunks to maintain memory efficiency

Order::query()

->with('customer')

->orderBy('created_at', 'desc')

->chunk(500, function ($orders) {

foreach ($orders as $order) {

echo sprintf(

"%s,%s,%.2f,%s,%sn",

$order->id,

str_replace(',', ' ', $order->customer->name),

$order->total,

$order->status,

$order->created_at->format('Y-m-d H:i:s')

);

ob_flush();

flush();

}

});

}, 200, [

'Content-Type' => 'text/csv',

'Content-Disposition' => 'attachment; filename="orders.csv"',

'X-Accel-Buffering' => 'no'

]);

}

}

Streaming responses enable efficient handling of large datasets while maintaining low memory usage and providing immediate feedback to users.

The post Optimizing Large Data Delivery with Laravel Streaming Responses appeared first on Laravel News.

Join the Laravel Newsletter to get all the latest

Laravel articles like this directly in your inbox.

Source: Read MoreÂ