What went wrong on July 19, 2024?

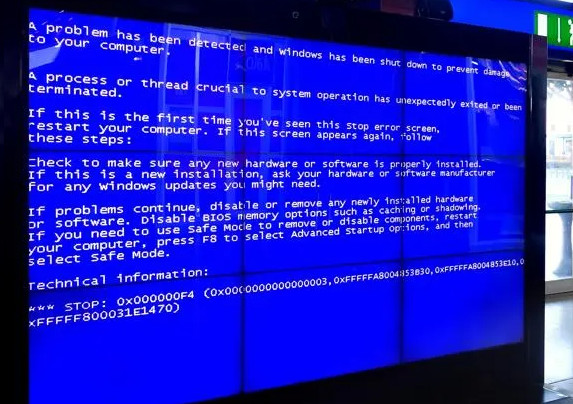

On July 19, 2024, the cybersecurity company CrowdStrike made a mass update to its CrowdStrike Falcon sensor on millions of computers worldwide. These updates are used to help identifying new threats and improve its cyberattack prevention capabilities. This upgrade proved to be a big mistake, causing a major crash on Windows systems everywhere.

Lets dive a little on the technical side

I’m not a Windows expert but internet is full of them and luckily, it’s not so hard to find some information on why this happened from a Windows point of view.

The Kernel

All operating systems have something in common, you have the user space and the kernel space. The user space refers to everything that runs outside of the operating system’s kernel, basically all the everyday applications. When running on user space, you don’t have direct access to the computer’s hardware, including CPU, memory, etc… Applications are much more limited to what they can do and access than anything running on kernel mode. When an application fails it just shutdowns without affecting other applications. When the kernel fails, the whole system comes down.

CrowdStrike Kernel-mode boot-start driver

The CrowdStrike sensor needs to run on kernel mode to be able to prevent some of the threats. Because of this, CrowdStrike created a driver that is signed as safe to run on the kernel.

How could the driver still be considered safe when the changes proved to be so lethal? The driver itself was not changed, and therefore was still digitally signed as safe.

Signing the driver means getting a Microsoft certificate for the driver. Getting this certificate is a non-trivial task that takes time. In order to be ahead of any possible threat by always updating their sensor as fast as possible, CrowdStrike came up with a strategy to update what the driver does without modifying the driver itself.

The driver reads external files that contain the information needed to prevent any kind of cyberattack. Instead of updating the driver, which would mean getting a new certificate, they update these external channel files. By updating these files, they don’t need to wait for a new certificate and still be fast on their work to keep servers secure.

Since CrowdStrike wants to make sure their driver is always loaded on system boot, they flagged it as a boot-start driver. This means that the system cannot boot without the driver. What happens if the driver fails to load? The system can’t boot.

The July 19, 2024 update

On July 19, 2024, CrowdStrike rolled out a mass upload of a new channel file. This file would make the driver fail (kernel panic) and cause a system shutdown, with the additional problem of not being able to boot.

CrowdStrike noticed the issue and sent a new update an hour later, but any computer already affected wouldn’t get the new file version.

The solution? Physically going to the computer, boot on safe mode (this loads a really limited number of drivers) and deleting the file.

According to people that got access to the file and looked at it, the file was just zeros. Certainly not the file that CrowdStrike really wanted to deploy.

You’re correct.

Full of zeros at least. pic.twitter.com/PJcCsUb9Vc

— christian_taillon (@christian_tail) July 19, 2024

What can be done to prevent another July 19?

Everybody that works on the IT industry knows that bugs are impossible to avoid and without internal knowledge of how things work in CrowdStrike it seems unfair to make comments on their engineering practices or quality.

This, however, doesn’t stop me from commenting on how I think the problem could have been avoided (it’s easy to talk with monday’s newspaper as we say in Uruguay)

Make sure you have a deployment pipeline that includes automated tests. And make sure your tests are complete. TDD is a good practice to help accomplish this, it’s not enough but it is a strong starting point.

When working with multiple systems, always do updates incrementally. This is called canary deployment and means rolling out the update to a small percentage of systems first. Make sure everything went as expected and then continue with the rest. If you are going to update millions of systems, start with 1, then 100, then 1000, etc…

Avoid doing friday deployments. You want to make sure that someone will be there to troubleshoot any issues that are raised, mainly on global environments.

Make sure your software handles errors gracefully. You don’t want a corrupt content or config file with the right name to crash your application.

An additional question that maybe we can answer on a different post, is: What could CrowdStrike customers have done to prevent this from happening?

Source: Read MoreÂ