Legal teams spend bulk of their time manually reviewing documents during eDiscovery. This process involves analyzing electronically stored information across emails, contracts, financial records, and collaboration systems for legal proceedings. This manual approach creates significant bottlenecks: attorneys must identify privileged communications, assess legal risks, extract contractual obligations, and maintain regulatory compliance across thousands of documents per case. The process is not only resource-intensive and time-consuming, but also prone to human error when dealing with large document volumes.

Amazon Bedrock Agents with multi-agent collaboration directly addresses these challenges by helping organizations deploy specialized AI agents that process documents in parallel while maintaining context across complex legal workflows. Instead of sequential manual review, multiple agents work simultaneously—one extracts contract terms while another identifies privileged communications, all coordinated by a central orchestrator. This approach can reduce document review time by 60–70% while maintaining the accuracy and human oversight required for legal proceedings, though actual performance varies based on document complexity and foundation model (FM) selection.

In this post, we demonstrate how to build an intelligent eDiscovery solution using Amazon Bedrock Agents for real-time document analysis. We show how to deploy specialized agents for document classification, contract analysis, email review, and legal document processing, all working together through a multi-agent architecture. We walk through the implementation details, deployment steps, and best practices to create an extensible foundation that organizations can adapt to their specific eDiscovery requirements.

Solution overview

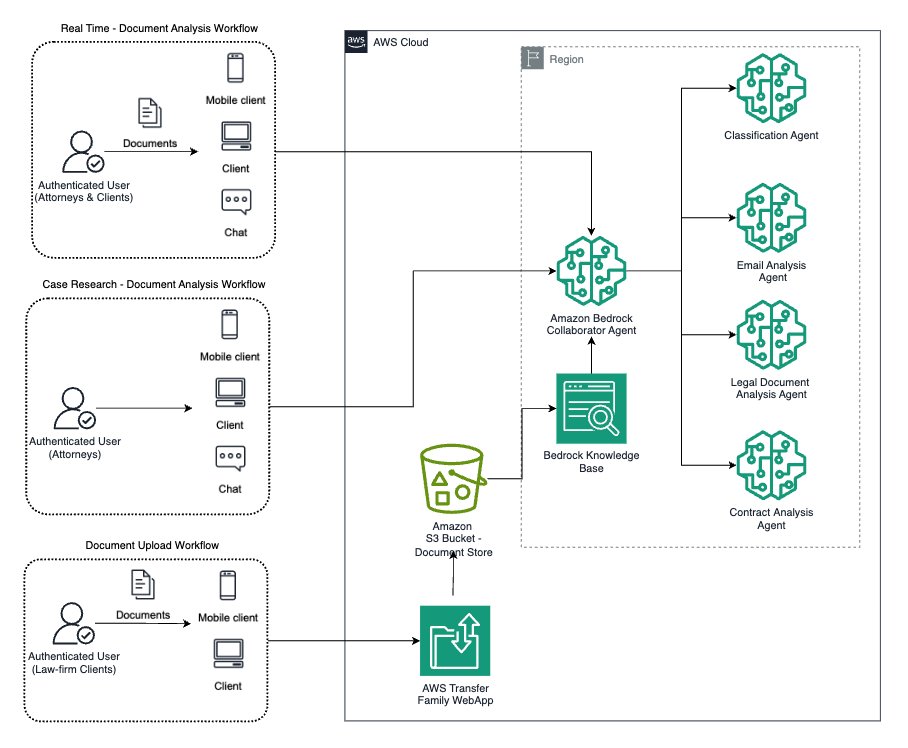

This solution demonstrates an intelligent document analysis system using Amazon Bedrock Agents with multi-agent collaboration functionality. The system uses multiple specialized agents to analyze legal documents, classify content, assess risks, and provide structured insights. The following diagram illustrates the solution architecture.

The architecture diagram shows three main workflows for eDiscovery document analysis:

- Real-time document analysis workflow – Attorneys and clients (authenticated users) can upload documents and interact through mobile/web clients and chat. Documents are processed in real time for immediate analysis without persistent storage—uploaded documents are passed directly to the Amazon Bedrock Collaborator Agent endpoint.

- Case research document analysis workflow – This workflow is specifically for attorneys (authenticated users). It allows document review and analysis through mobile/web clients and chat. It’s focused on the legal research aspects of previously processed documents.

- Document upload workflow – Law firm clients (authenticated users) can upload documents through mobile/web clients. Documents are transferred by using AWS Transfer Family web apps to an Amazon Simple Storage Service (Amazon S3) bucket for storage.

Although this architecture supports all three workflows, this post focuses specifically on implementing the real-time document analysis workflow for two key reasons: it represents the core functionality that delivers immediate value to legal teams, and it provides the foundational patterns that can be extended to support the other workflows. The real-time processing capability demonstrates the multi-agent coordination that makes this solution transformative for eDiscovery operations.

Real-time document analysis workflow

This workflow processes uploaded documents through coordinated AI agents, typically completing analysis within 1–2 minutes of upload. The system accelerates early case assessment by providing structured insights immediately, compared to traditional manual review that can take hours per document. The implementation coordinates five specialized agents that process different document aspects in parallel, listed in the following table.

| Agent Type | Primary Function | Processing Time* | Key Outputs |

|---|---|---|---|

| Collaborator Agent | Central orchestrator and workflow manager | 2–5 seconds | Document routing decisions, consolidated results |

| Document Classification Agent | Initial document triage and sensitivity detection | 5–10 seconds | Document type, confidence scores, sensitivity flags |

| Email Analysis Agent | Communication pattern analysis | 10–20 seconds | Participant maps, conversation threads, timelines |

| Legal Document Analysis Agent | Court filing and legal brief analysis | 15–30 seconds | Case citations, legal arguments, procedural dates |

| Contract Analysis Agent | Contract terms and risk assessment | 20–40 seconds | Party details, key terms, obligations, risk scores |

*Processing times are estimates based on testing with Anthropic’s Claude 3.5 Haiku on Amazon Bedrock and might vary depending on document complexity and size. Actual performance in your environment may differ.

Let’s explore an example of processing a sample legal settlement agreement. The workflow consists of the following steps:

- The Collaborator Agent identifies the document as requiring both contract and legal analysis.

- The Contract Analysis Agent extracts parties, payment terms, and obligations (40 seconds).

- The Legal Document Analysis Agent identifies case references and precedents (30 seconds).

- The Document Classification Agent flags confidentiality levels (10 seconds).

- The Collaborator Agent consolidates findings into a comprehensive report (15 seconds).

Total processing time is approximately 95 seconds for the sample document, compared to 2–4 hours of manual review for similar documents. In the following sections, we walk through deploying the complete eDiscovery solution, including Amazon Bedrock Agents, the Streamlit frontend, and necessary AWS resources.

Prerequisites

Make sure you have the following prerequisites:

- An AWS account with appropriate permissions for Amazon Bedrock, AWS Identity and Access Management (IAM), and AWS CloudFormation.

- Amazon Bedrock model access for Anthropic’s Claude 3.5 Haiku v1 in your deployment AWS Region. You can use a different supported model of your choice for this solution. If you use a different model than the default (Anthropic’s Claude 3.5 Haiku v1), you must modify the CloudFormation template to reflect your chosen model’s specifications before deployment. At the time of writing, Anthropic’s Claude 3.5 Haiku is available in US East (N. Virginia), US East (Ohio), and US West (Oregon). For current model availability, see Model support by AWS Region.

- The AWS Command Line Interface (AWS CLI) installed and configured with appropriate credentials.

- Python 3.8+ installed.

- Terminal or command prompt access.

Deploy the AWS infrastructure

You can deploy the following CloudFormation template, which creates the five Amazon Bedrock agents, inference profile, and supporting IAM resources. (Costs will be incurred for the AWS resources used). Complete the following steps:

- Launch the CloudFormation stack.

You will be redirected to the AWS CloudFormation console. In the stack parameters, the template URL will be prepopulated.

- For EnvironmentName, enter a name for your deployment (default:

LegalBlogSetup). - Review and create the stack.

After successful deployment, note the following values from the CloudFormation stack’s Outputs tab:

CollabBedrockAgentIdCollabBedrockAgentAliasId

Configure AWS credentials

Test if AWS credentials are working:aws sts get-caller-identityIf you need to configure credentials, use the following command:

Set up the local environment

Complete the following steps to set up your local environment:

- Create a new directory for your project:

- Set up a Python virtual environment:

- Download the Streamlit application:

- Install dependencies:

Configure and run the application

Complete the following steps:

- Run the downloaded Streamlit frontend UI file eDiscovery-LegalBlog-UI.py by executing the following command in your terminal or command prompt:

This command will start the Streamlit server and automatically open the application in your default web browser.

- Under Agent configuration, provide the following values:

- For AWS_REGION, enter your Region.

- For AGENT_ID, enter the Amazon Bedrock Collaborator Agent ID.

- For AGENT_ALIAS_ID, enter the Amazon Bedrock Collaborator Agent Alias ID.

- Choose Save Configuration.

Now you can upload documents (TXT, PDF, and DOCX) to analyze and interact with.

Test the solution

The following is a demonstration of testing the application.

Implementation considerations

Although Amazon Bedrock Agents significantly streamlines eDiscovery workflows, organizations should consider several key factors when implementing AI-powered document analysis solutions. Consider the following legal industry requirements for compliance and governance:

- Attorney-client privilege protection – AI systems must maintain confidentiality boundaries and can’t expose privileged communications during processing

- Cross-jurisdictional compliance – GDPR, CCPA, and industry-specific regulations vary by region and case type

- Audit trail requirements – Legal proceedings demand comprehensive processing documentation for all AI-assisted decisions

- Professional responsibility – Lawyers remain accountable for AI outputs and must demonstrate competency in deployed tools

You might encounter technical implementation challenges, such as document processing complexity:

- Variable document quality – Scanned PDFs, handwritten annotations, and corrupted files require preprocessing strategies

- Format diversity – Legal documents span emails, contracts, court filings, and multimedia content requiring different processing approaches

- Scale management – Large cases involving over 100,000 documents require careful resource planning and concurrent processing optimization

The system integration also has specific requirements:

- Legacy system compatibility – Most law firms use established case management systems that need seamless integration

- Authentication workflows – Multi-role access (attorneys, paralegals, clients) with different permission levels

- AI confidence thresholds – Determining when human review is required based on processing confidence scores

Additionally, consider your human/AI collaboration framework. The most successful eDiscovery implementations maintain human oversight at critical decision points. Although Amazon Bedrock Agents excels at automating routine tasks like document classification and metadata extraction, legal professionals remain essential for the following factors:

- Complex legal interpretations requiring contextual understanding

- Privilege determinations that impact case strategy

- Quality control of AI-generated insights

- Strategic analysis of document relationships and case implications

This collaborative approach optimizes the eDiscovery process—AI handles time-consuming data processing while legal professionals focus on high-stakes decisions requiring human judgment and expertise. For your implementation strategy, consider a phased deployment approach. Organizations should implement staged rollouts to minimize risk while building confidence:

- Pilot programs using lower-risk document categories (routine correspondence, standard contracts)

- Controlled expansion with specialized agents and broader user base

- Full deployment enabling complete multi-agent collaboration organization-wide

Lastly, consider the following success planning best practices:

- Establish clear governance frameworks for model updates and version control

- Create standardized testing protocols for new agent deployments

- Develop escalation procedures for edge cases requiring human intervention

- Implement parallel processing during validation periods to maintain accuracy

By addressing these considerations upfront, legal teams can facilitate smoother implementation and maximize the benefits of AI-powered document analysis while maintaining the accuracy and oversight required for legal proceedings.

Clean up

If you decide to discontinue using the solution, complete the following steps to remove it and its associated resources deployed using AWS CloudFormation:

- On the AWS CloudFormation console, choose Stacks in the navigation pane.

- Locate the stack you created during the deployment process (you assigned a name to it).

- Select the stack and choose Delete.

Results

Amazon Bedrock Agents transforms eDiscovery from time-intensive manual processes into efficient AI-powered operations, delivering measurable operational improvements across business services organizations. With a multi-agent architecture, organizations can process documents in 1–2 minutes compared to 2–4 hours of manual review for similar documents, achieving a 60–70% reduction in review time while maintaining accuracy and compliance requirements. A representative implementation from the financial services sector demonstrates this transformative potential: a major institution transformed their compliance review process from a 448-page manual workflow requiring over 10,000 hours to an automated system that reduced external audit times from 1,000 to 300–400 hours and internal audits from 800 to 320–400 hours. The institution now conducts 30–40 internal reviews annually with existing staff while achieving greater accuracy and consistency across assessments. These results demonstrate the potential across implementations: organizations implementing this solution can progress from initial efficiency gains in pilot phases to a 60–70% reduction in review time at full deployment. Beyond time savings, the solution delivers strategic advantages, including resource optimization that helps legal professionals focus on high-value analysis rather than routine document processing, improved compliance posture through systematic identification of privileged communications, and future-ready infrastructure that adapts to evolving legal technology requirements.

Conclusion

The combination of Amazon Bedrock multi-agent collaboration, real-time processing capabilities, and the extensible architecture provided in this post offers legal teams immediate operational benefits while positioning them for future AI advancements—creating the powerful synergy of AI efficiency and human expertise that defines modern legal practice.

To learn more about Amazon Bedrock, refer to the following resources:

- GitHub repo: Amazon Bedrock Workshop

- Amazon Bedrock User Guide

- Workshop: GenAI for AWS Cloud Operations

- Workshop: Using generative AI on AWS for diverse content types

About the authors

Puneeth Ranjan Komaragiri is a Principal Technical Account Manager at AWS. He is particularly passionate about monitoring and observability, cloud financial management, and generative AI domains. In his current role, Puneeth enjoys collaborating closely with customers, using his expertise to help them design and architect their cloud workloads for optimal scale and resilience.

Puneeth Ranjan Komaragiri is a Principal Technical Account Manager at AWS. He is particularly passionate about monitoring and observability, cloud financial management, and generative AI domains. In his current role, Puneeth enjoys collaborating closely with customers, using his expertise to help them design and architect their cloud workloads for optimal scale and resilience.

Pramod Krishna is a Senior Solutions Architect at AWS. He works as a trusted advisor for customers, helping customers innovate and build well-architected applications in AWS Cloud. Outside of work, Krishna enjoys reading, music, and traveling.

Pramod Krishna is a Senior Solutions Architect at AWS. He works as a trusted advisor for customers, helping customers innovate and build well-architected applications in AWS Cloud. Outside of work, Krishna enjoys reading, music, and traveling.

Sean Gifts Is a Senior Technical Account Manager at AWS. He is excited about helping customers with application modernization, specifically event-driven architectures that use serverless frameworks. Sean enjoys helping customers improve their architecture with simple, scalable solutions. Outside of work, he enjoys exercising, enjoying new foods, and traveling.

Sean Gifts Is a Senior Technical Account Manager at AWS. He is excited about helping customers with application modernization, specifically event-driven architectures that use serverless frameworks. Sean enjoys helping customers improve their architecture with simple, scalable solutions. Outside of work, he enjoys exercising, enjoying new foods, and traveling.

Source: Read MoreÂ