Enterprises adopting advanced AI solutions recognize that robust security and precise access control are essential for protecting valuable data, maintaining compliance, and preserving user trust. As organizations expand AI usage across teams and applications, they require granular permissions to safeguard sensitive information and manage who can access powerful models. Amazon SageMaker Unified Studio addresses these needs so organizations can configure fine-grained access policies, making sure that only authorized users can interact with foundation models (FMs) while supporting secure, collaborative innovation at scale.

Launched in 2025, SageMaker Unified Studio is a single data and AI development environment where you can find and access the data in your organization and act on it using the best tools across use cases. SageMaker Unified Studio brings together the functionality and tools from existing AWS analytics and AI/ML services, including Amazon EMR, AWS Glue, Amazon Athena, Amazon Redshift, Amazon Bedrock, and Amazon SageMaker AI.

Amazon Bedrock in SageMaker Unified Studio provides various options for discovering and experimenting with Amazon Bedrock models and applications. For example, you can use a chat playground to try a prompt with Anthropic’s Claude without having to write code. You can also create a generative AI application that uses an Amazon Bedrock model and features, such as a knowledge base or a guardrail. To learn more, refer to Amazon Bedrock in SageMaker Unified Studio.

In this post, we demonstrate how to use SageMaker Unified Studio and AWS Identity and Access Management (IAM) to establish a robust permission framework for Amazon Bedrock models. We show how administrators can precisely manage which users and teams have access to specific models within a secure, collaborative environment. We guide you through creating granular permissions to control model access, with code examples for common enterprise governance scenarios. By the end, you will understand how to tailor access to generative AI capabilities to meet your organization’s requirements—addressing a core challenge in enterprise AI adoption by enabling developer flexibility while maintaining strong security standards.

Solution overview

In SageMaker Unified Studio, a domain serves as the primary organizational structure, so you can oversee multiple AWS Regions, accounts, and workloads from a single interface. Each domain is assigned a unique URL and offers centralized control over studio configurations, user accounts, and network settings.

Inside each domain, projects facilitate streamlined collaboration. Projects can span different Regions or accounts within a Region, and their metadata includes details such as the associated Git repository, team members, and their permissions. Every account where a project has resources is assigned at least one project role, which determines the tools, compute resources, datasets, and artificial intelligence and machine learning (AI/ML) assets accessible to project members. To manage data access, you can adjust the IAM permissions tied to the project’s role. SageMaker Unified Studio uses several IAM roles. For a complete list, refer to Identity and access management for Amazon SageMaker Unified Studio.

There are two primary methods for users to interact with Amazon Bedrock models in SageMaker Unified Studio: the SageMaker Unified Studio playground and SageMaker Unified Studio projects.

In the SageMaker Unified Studio playground scenario, model consumption roles provide secure access to Amazon Bedrock FMs. You can choose between automatic role creation for individual models or configuring a single role for all models. The default AmazonSageMakerBedrockModelConsumptionRole comes with preconfigured permissions to consume Amazon Bedrock models, including invoking the Amazon Bedrock application inference profile created for a particular SageMaker Unified Studio domain. To fine-tune access control, you can add inline policies to these roles that explicitly allow or deny access to specific Amazon Bedrock models.

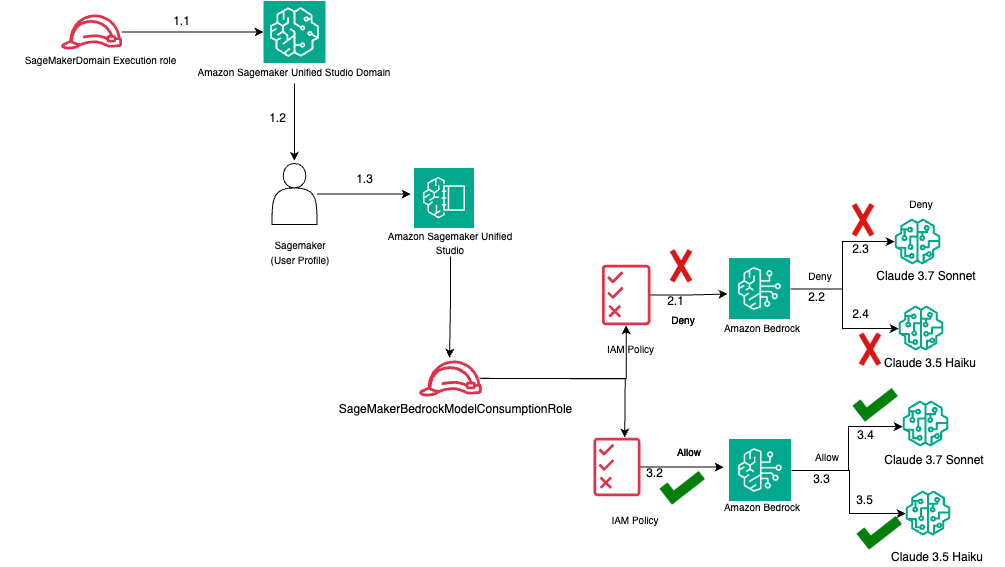

The following diagram illustrates this architecture.

The workflow consists of the following steps:

- Initial access path:

- The

SageMakerDomainexecution role connects to the SageMaker Unified Studio domain. - The connection flows to the SageMaker user profile.

- The user profile accesses SageMaker Unified Studio.

- The

- Restricted access path (top flow):

- The direct access attempt from SageMaker Unified Studio to Amazon Bedrock is denied.

- The IAM policy blocks access to FMs.

- Anthropic’s Claude 3.7 Sonnet access is denied (marked with X).

- Anthropic’s Claude 3.5 Haiku access is denied (marked with X).

- Permitted access path (bottom flow):

SageMakerBedrockModelConsumptionRoleis used.- The appropriate IAM policy allows access (marked with a checkmark).

- Amazon Bedrock access is permitted.

- Anthropic’s Claude 3.7 Sonnet access is allowed (marked with checkmark).

- Anthropic’s Claude 3.5 Haiku access is allowed (marked with checkmark).

- Governance mechanism:

- IAM policies serve as the control point for model access.

- Different roles determine different levels of access permission.

- Access controls are explicitly defined for each FM.

In the SageMaker Unified Studio project scenario, SageMaker Unified Studio uses a model provisioning role to create an inference profile for an Amazon Bedrock model in a project. The inference profile is required for the project to interact with the model. You can either let SageMaker Unified Studio automatically create a unique model provisioning role, or you can provide a custom model provisioning role. The default AmazonSageMakerBedrockModelManagementRole has the AWS policy AmazonDataZoneBedrockModelManagementPolicy attached. You can restrict access to specific account IDs through custom trust policies. You can also attach inline policies and use the statement CreateApplicationInferenceProfileUsingFoundationModels to allow or deny access to specific Amazon Bedrock models in your project.

The following diagram illustrates this architecture.

The workflow consists of the following steps:

- Initial access path:

- The

SageMakerDomainexecution role connects to the SageMaker Unified Studio domain. - The connection flows to the SageMaker user profile.

- The user profile accesses SageMaker Unified Studio.

- The

- Restricted access path (top flow):

- The direct access attempt from SageMaker Unified Studio to Amazon Bedrock is denied.

- The IAM policy blocks access to FMs.

- Anthropic’s Claude 3.7 Sonnet access is denied (marked with X).

- Anthropic’s Claude 3.5 Haiku access is denied (marked with X).

- Permitted access path (bottom flow):

SageMakerBedrockModelManagementRoleis used.- The appropriate IAM policy allows access (marked with a checkmark).

- Amazon Bedrock access is permitted.

- Anthropic’s Claude 3.7 Sonnet access is allowed (marked with checkmark).

- Anthropic’s Claude 3.5 Haiku access is allowed (marked with checkmark).

- Governance mechanism:

- IAM policies serve as the control point for model access.

- Different roles determine different levels of access permission.

- Access controls are explicitly defined for each FM.

By customizing the policies attached to these roles, you can control which actions are permitted or denied, thereby governing access to generative AI capabilities.

To use a specific model from Amazon Bedrock, SageMaker Unified Studio uses the model ID of the chosen model as part of the API calls. At the time of writing, SageMaker Unified Studio supports the following Amazon Bedrock models (the full list of current models can be found here), grouped by model provider:

- Amazon:

- Amazon Titan Text G1 – Premier:

amazon.titan-text-premier-v1:0 - Amazon Nova Pro:

amazon.nova-pro-v1:0 - Amazon Nova Lite:

amazon.nova-lite-v1:0 - Amazon Nova Canvas:

amazon.nova-canvas-v1:0

- Amazon Titan Text G1 – Premier:

- Stability AI:

- SDXL 1.0:

stability.stable-diffusion-xl-v1

- SDXL 1.0:

- AI21 Labs:

- Jamba-Instruct:

ai21.jamba-instruct-v1:0 - Jamba 1.5 Large:

ai21.jamba-1-5-large-v1:0 - Jamba 1.5 Mini:

ai21.jamba-1-5-mini-v1:0

- Jamba-Instruct:

- Anthropic:

- Claude 3.7 Sonnet:

anthropic.claude-3-7-sonnet-20250219-v1:0

- Claude 3.7 Sonnet:

- Cohere:

- Command R+:

cohere.command-r-plus-v1:0 - Command Light:

cohere.command-light-text-v14 - Embed Multilingual:

cohere.embed-multilingual-v3

- Command R+:

- DeepSeek:

- DeepSeek-R1:

deepseek.r1-v1:0

- DeepSeek-R1:

- Meta:

- Llama 3.3 70B Instruct:

meta.llama3-3-70b-instruct-v1:0 - Llama 4 Scout 17B Instruct:

meta.llama4-scout-17b-instruct-v1:0 - Llama 4 Maverick 17B Instruct:

meta.llama4-maverick-17b-instruct-v1:0

- Llama 3.3 70B Instruct:

- Mistral AI:

- Mistral 7B Instruct:

mistral.mistral-7b-instruct-v0:2 - Pixtral Large (25.02):

mistral.pixtral-large-2502-v1:0

- Mistral 7B Instruct:

Create a model consumption role for the playground scenario

In the following steps, you create an IAM role with a trust policy, add two inline policies, and attach them to the role.

Create the IAM role with a trust policy

Complete the following steps to create an IAM role with a trust policy:

- On the IAM console, in the navigation pane, choose Roles, then choose Create role.

- For Trusted entity type, select Custom trust policy.

- Delete the default policy in the editor and enter the following trust policy (replace account-id for the

aws:SourceAccountfield with your AWS account ID):

- Choose Next.

- Skip the Add permissions page by choosing Next.

- Enter a name for the role (for example,

DataZoneBedrock-Role) and an optional description. - Choose Create role.

Add the first inline policy

Complete the following steps to add an inline policy:

- On the IAM console, open the newly created role details page.

- On the Permissions tab, choose Add permissions and then Create inline policy.

- On the JSON tab, delete the default policy and enter the first inline policy:

- Choose Review policy.

- Name the policy (for example,

DataZoneDomainInferencePolicy) and choose Create policy.

Add the second inline policy

Complete the following steps to add another inline policy:

- On the role’s Permissions tab, choose Add permissions and then Create inline policy.

- On the JSON tab, delete the default policy and enter the second inline policy (replace account-id in the

bedrock:InferenceProfileArnfield with your account ID):

- Choose Review policy.

- Name the policy (for example,

BedrockFoundationModelAccessPolicy) and choose Create policy.

Explanation of the policies

In this section, we discuss the details of the policies.

Trust policy explanation

This trust policy defines who can assume the IAM role:

- It allows the Amazon DataZone service (

datazone.amazonaws.com) to assume this role - The service can perform

sts:AssumeRoleandsts:SetContextactions - A condition restricts this to only when the AWS account you specify is your AWS account

This makes sure that only Amazon DataZone from your specified account can assume this role.

First inline policy explanation

This policy controls access to Amazon Bedrock inference profiles:

- It allows invoking Amazon Bedrock models (

bedrock:InvokeModelandbedrock:InvokeModelWithResponseStream), but only for resources that are application inference profiles (arn:aws:bedrock:::application-inference-profile/*) - It has three important conditions:

- The profile must be tagged with

AmazonDataZoneDomainmatching the domain ID of the caller - The resource must be in the same AWS account as the principal making the request

- The resource must not have an

AmazonDataZoneProjecttag set to"true"

This effectively limits access to only those inference profiles that belong to the same Amazon DataZone domain as the caller and are not associated with a specific project.

Second inline policy explanation

This policy controls which specific FMs can be accessed:

- It allows the same Amazon Bedrock model invocation actions, but only for specific Anthropic Claude 3.5 Haiku models in three Regions:

us-east-1us-east-2us-west-2

- It has a condition that the request must come through an inference profile from your account

Combined effect on the SageMaker Unified Studio domain generative AI playground

Together, these policies create a secure, controlled environment for using Amazon Bedrock models in the SageMaker Unified Studio domain through the following methods:

- Limiting model access – Only the specified Anthropic Claude 3.5 Haiku model can be used, not other Amazon Bedrock models

- Enforcing access through inference profiles – Models can only be accessed through properly configured application inference profiles

- Maintaining domain isolation – Access is restricted to inference profiles tagged with the user’s Amazon DataZone domain

- Helping to prevent cross-account access – Resources must be in the same account as the principal

- Regional restrictions – Access the model is only allowed in three specific AWS Regions

This implementation follows the principle of least privilege by providing only the minimum permissions needed for the intended use case, while maintaining proper security boundaries between different domains and projects.

Create a model provisioning role for the project scenario

In this section, we walk through the steps to create an IAM role with a trust policy and add the required inline policy to make sure that the models are limited to the approved ones.

Create the IAM role with a trust policy

Complete the following steps to create an IAM role with a trust policy:

- On the IAM console, in the navigation pane, choose Roles, then choose Create role.

- For Trusted entity type, select Custom trust policy.

- Delete the default policy in the editor and enter the following trust policy (replace account-id for the

aws:SourceAccountfield with your account ID):

- Choose Next.

- Skip the Add permissions page by choosing Next.

- Enter a name for the role (for example,

SageMakerModelManagementRole) and an optional description, such asRole for managing Bedrock model access in SageMaker Unified Studio. - Choose Create role.

Add the inline policy

Complete the following steps to add an inline policy:

- On the IAM console, open the details page of the newly created role.

- On the Permissions tab, choose Add permissions and then Create inline policy.

- On the JSON tab, delete the default policy and enter the following inline policy:

- Choose Review policy.

- Name the policy (for example,

BedrockModelManagementPolicy) and choose Create policy.

Explanation of the policies

In this section, we discuss the details of the policies.

Trust policy explanation

This trust policy defines who can assume the IAM role:

- It allows the Amazon DataZone service to assume this role

- The service can perform

sts:AssumeRoleandsts:SetContextactions - A condition restricts this to only when the source AWS account is your AWS account

This makes sure that only Amazon DataZone from your specific account can assume this role.

Inline policy explanation

This policy controls access to Amazon Bedrock inference profiles and models:

- It allows creating and managing application inference profiles (

bedrock:CreateInferenceProfile,bedrock:TagResource,bedrock:DeleteInferenceProfile) - It specifically permits creating inference profiles for only the

anthropic.claude-3-5-haiku-20241022-v1:0model - Access is controlled through several conditions:

- Resources must be in the same AWS account as the principal making the request

- Operations are restricted based on the

AmazonDataZoneProjecttag - Creating profiles requires proper tagging with

AmazonDataZoneProject - Deleting profiles is only allowed for resources with the appropriate

AmazonDataZoneProjecttag

Combined effect on SageMaker Unified Studio domain project

Together, these policies create a secure, controlled environment for using Amazon Bedrock models in SageMaker Unified Studio domain projects through the following methods:

- Limiting model access – Only the specified Anthropic Claude 3.5 Haiku model can be used

- Enforcing proper tagging – Resources must be properly tagged with

AmazonDataZoneProjectidentifiers - Maintaining account isolation – Resources must be in the same account as the principal

- Implementing least privilege – Only specific actions (create, tag, delete) are permitted on inference profiles

- Providing project-level isolation – Access is restricted to inference profiles tagged with appropriate project identifiers

This implementation follows the principle of least privilege by providing only the minimum permissions needed for project-specific use cases, while maintaining proper security boundaries between different projects and making sure that only approved FMs can be accessed.

Configure a SageMaker Unified Studio domain to use the roles

Complete the following steps to create a SageMaker Unified Studio domain and configure it to use the roles you created:

- On the SageMaker console, choose the appropriate Region.

- Choose Create a Unified Studio domain and then choose Quick setup.

- For Name, enter a meaningful domain name.

- Scroll down to Generative AI resources.

- Under Model provisioning role, choose the model management role created in the previous section.

- Under Model consumption role, select Use a single existing role for all models and choose the model consumption role created in the previous section.

- Complete the remaining steps according to your AWS IAM Identity Center configurations and create your domain.

Clean up

To avoid incurring future charges related to various services used in SageMaker Unified Studio, log out of the SageMaker Unified Studio domain and delete the domain in SageMaker Unified Studio.

Conclusion

In this post, we demonstrated how the SageMaker Unified Studio playground and SageMaker Unified Studio projects invoke large language models powered by Amazon Bedrock, and how enterprises can govern access to these models, whether you want to limit access to specific models or to every model from the service. You can combine the IAM policies shown in this post in the same IAM role to provide complete control. By following these guidelines, enterprises can make sure their use of generative AI models is both secure and aligned with organizational policies. This approach not only safeguards sensitive data but also empowers business analysts and data scientists to harness the full potential of AI within a controlled environment.

Now that your environment is configured with strong identity based policies, we suggest reading the following posts to learn how Amazon SageMaker Unified Studio enables you to securely innovate quickly, and at scale, with generative AI:

- An integrated experience for all your data and AI with Amazon SageMaker Unified Studio

- Unified scheduling for visual ETL flows and query books in Amazon SageMaker Unified Studio

- Foundational blocks of Amazon SageMaker Unified Studio: An admin’s guide to implement unified access to all your data, analytics, and AI

About the authors

Varun Jasti is a Solutions Architect at Amazon Web Services, working with AWS Partners to design and scale artificial intelligence solutions for public sector use cases to meet compliance standards. With a background in Computer Science, his work covers broad range of ML use cases primarily focusing on LLM training/inferencing and computer vision. In his spare time, he loves playing tennis and swimming.

Varun Jasti is a Solutions Architect at Amazon Web Services, working with AWS Partners to design and scale artificial intelligence solutions for public sector use cases to meet compliance standards. With a background in Computer Science, his work covers broad range of ML use cases primarily focusing on LLM training/inferencing and computer vision. In his spare time, he loves playing tennis and swimming.

Saptarshi Banarjee serves as a Senior Solutions Architect at AWS, collaborating closely with AWS Partners to design and architect mission-critical solutions. With a specialization in generative AI, AI/ML, serverless architecture, Next-Gen Developer Experience tools and cloud-based solutions, Saptarshi is dedicated to enhancing performance, innovation, scalability, and cost-efficiency for AWS Partners within the cloud ecosystem.

Saptarshi Banarjee serves as a Senior Solutions Architect at AWS, collaborating closely with AWS Partners to design and architect mission-critical solutions. With a specialization in generative AI, AI/ML, serverless architecture, Next-Gen Developer Experience tools and cloud-based solutions, Saptarshi is dedicated to enhancing performance, innovation, scalability, and cost-efficiency for AWS Partners within the cloud ecosystem.

Jon Turdiev is a Senior Solutions Architect at Amazon Web Services, where he helps startup customers build well-architected products in the cloud. With over 20 years of experience creating innovative solutions in cybersecurity, AI/ML, healthcare, and Internet of Things (IoT), Jon brings deep technical expertise to his role. Previously, Jon founded Zehntec, a technology consulting company, and developed award-winning medical bedside terminals deployed in hospitals worldwide. Jon holds a Master’s degree in Computer Science and shares his knowledge through webinars, workshops, and as a judge at hackathons.

Jon Turdiev is a Senior Solutions Architect at Amazon Web Services, where he helps startup customers build well-architected products in the cloud. With over 20 years of experience creating innovative solutions in cybersecurity, AI/ML, healthcare, and Internet of Things (IoT), Jon brings deep technical expertise to his role. Previously, Jon founded Zehntec, a technology consulting company, and developed award-winning medical bedside terminals deployed in hospitals worldwide. Jon holds a Master’s degree in Computer Science and shares his knowledge through webinars, workshops, and as a judge at hackathons.

Lijan Kuniyil is a Senior Technical Account Manager at AWS. Lijan enjoys helping AWS enterprise customers build highly reliable and cost-effective systems with operational excellence. Lijan has over 25 years of experience in developing solutions for financial, healthcare and consulting companies.

Lijan Kuniyil is a Senior Technical Account Manager at AWS. Lijan enjoys helping AWS enterprise customers build highly reliable and cost-effective systems with operational excellence. Lijan has over 25 years of experience in developing solutions for financial, healthcare and consulting companies.

Source: Read MoreÂ