Amazon Bedrock Guardrails provides configurable safeguards to help build trusted generative AI applications at scale. It provides organizations with integrated safety and privacy safeguards that work across multiple foundation models (FMs), including models available in Amazon Bedrock, as well as models hosted outside Amazon Bedrock from other model providers and cloud providers. With the standalone ApplyGuardrail API, Amazon Bedrock Guardrails offers a model-agnostic and scalable approach to implementing responsible AI policies for your generative AI applications. Guardrails currently offers six key safeguards: content filters, denied topics, word filters, sensitive information filters, contextual grounding checks, and Automated Reasoning checks (preview), to help prevent unwanted content and align AI interactions with your organization’s responsible AI policies.

As organizations strive to implement responsible AI practices across diverse use cases, they face the challenge of balancing safety controls with varying performance and language requirements across different applications, making a one-size-fits-all approach ineffective. To address this, we’ve introduced safeguard tiers for Amazon Bedrock Guardrails, so you can choose appropriate safeguards based on your specific needs. For instance, a financial services company can implement comprehensive, multi-language protection for customer-facing AI assistants while using more focused, lower-latency safeguards for internal analytics tools, making sure each application upholds responsible AI principles with the right level of protection without compromising performance or functionality.

In this post, we introduce the new safeguard tiers available in Amazon Bedrock Guardrails, explain their benefits and use cases, and provide guidance on how to implement and evaluate them in your AI applications.

Solution overview

Until now, when using Amazon Bedrock Guardrails, you were provided with a single set of the safeguards associated to specific AWS Regions and a limited set of languages supported. The introduction of safeguard tiers in Amazon Bedrock Guardrails provides three key advantages for implementing AI safety controls:

- A tier-based approach that gives you control over which guardrail implementations you want to use for content filters and denied topics, so you can select the appropriate protection level for each use case. We provide more details about this in the following sections.

- Cross-Region Inference Support (CRIS) for Amazon Bedrock Guardrails, so you can use compute capacity across multiple Regions, achieving better scaling and availability for your guardrails. With this, your requests get automatically routed during guardrail policy evaluation to the optimal Region within your geography, maximizing available compute resources and model availability. This helps maintain guardrail performance and reliability when demand increases. There’s no additional cost for using CRIS with Amazon Bedrock Guardrails, and you can select from specific guardrail profiles for controlling model versioning and future upgrades.

- Advanced capabilities as a configurable tier option for use cases where more robust protection or broader language support are critical priorities, and where you can accommodate a modest latency increase.

Safeguard tiers are applied at the guardrail policy level, specifically for content filters and denied topics. You can tailor your protection strategy for different aspects of your AI application. Let’s explore the two available tiers:

- Classic tier (default):

- Maintains the existing behavior of Amazon Bedrock Guardrails

- Limited language support: English, French, and Spanish

- Does not require CRIS for Amazon Bedrock Guardrails

- Optimized for lower-latency applications

- Standard tier:

- Provided as a new capability that you can enable for existing or new guardrails

- Multilingual support for more than 60 languages

- Enhanced robustness against prompt typos and manipulated inputs

- Enhanced prompt attack protection covering modern jailbreak and prompt injection techniques, including token smuggling, AutoDAN, and many-shot, among others

- Enhanced topic detection with improved understanding and handling of complex topics

- Requires the use of CRIS for Amazon Bedrock Guardrails and might have a modest increase in latency profile compared to the Classic tier option

You can select each tier independently for content filters and denied topics policies, allowing for mixed configurations within the same guardrail, as illustrated in the following hierarchy. With this flexibility, companies can implement the right level of protection for each specific application.

- Policy: Content filters

- Tier: Classic or Standard

- Policy: Denied topics

- Tier: Classic or Standard

- Other policies: Word filters, sensitive information filters, contextual grounding checks, and Automated Reasoning checks (preview)

To illustrate how these tiers can be applied, consider a global financial services company deploying AI in both customer-facing and internal applications:

- For their customer service AI assistant, they might choose the Standard tier for both content filters and denied topics, to provide comprehensive protection across many languages.

- For internal analytics tools, they could use the Classic tier for content filters prioritizing low latency, while implementing the Standard tier for denied topics to provide robust protection against sensitive financial information disclosure.

You can configure the safeguard tiers for content filters and denied topics in each guardrail through the AWS Management Console, or programmatically through the Amazon Bedrock SDK and APIs. You can use a new or existing guardrail. For information on how to create or modify a guardrail, see Create your guardrail.

Your existing guardrails are automatically set to the Classic tier by default to make sure you have no impact on your guardrails’ behavior.

Quality enhancements with the Standard tier

According to our tests, the new Standard tier improves harmful content filtering recall by more than 15% with a more than 7% gain in balanced accuracy compared to the Classic tier. A key differentiating feature of the new Standard tier is its multilingual support, maintaining strong performance with over 78% recall and over 88% balanced accuracy for the most common 14 languages.The enhancements in protective capabilities extend across several other aspects. For example, content filters for prompt attacks in the Standard tier show a 30% improvement in recall and 16% gain in balanced accuracy compared to the Classic tier, while maintaining a lower false positive rate. For denied topic detection, the new Standard tier delivers a 32% increase in recall, resulting in an 18% improvement in balanced accuracy.These substantial evolutions in detection capabilities for Amazon Bedrock Guardrails, combined with consistently low false positive rates and robust multilingual performance, also represent a significant advancement in content protection technology compared to other commonly available solutions. The multilingual improvements are particularly noteworthy, with the new Standard tier in Amazon Bedrock Guardrails showing consistent performance gains of 33–49% in recall across different language evaluations compared to other competitors’ options.

Benefits of safeguard tiers

Different AI applications have distinct safety requirements based on their audience, content domain, and geographic reach. For example:

- Customer-facing applications often require stronger protection against potential misuse compared to internal applications

- Applications serving global customers need guardrails that work effectively across many languages

- Internal enterprise tools might prioritize controlling specific topics in just a few primary languages

The combination of the safeguard tiers with CRIS for Amazon Bedrock Guardrails also addresses various operational needs with practical benefits that go beyond feature differences:

- Independent policy evolution – Each policy (content filters or denied topics) can evolve at its own pace without disrupting the entire guardrail system. You can configure these with specific guardrail profiles in CRIS for controlling model versioning in the models powering your guardrail policies.

- Controlled adoption – You decide when and how to adopt new capabilities, maintaining stability for production applications. You can continue to use Amazon Bedrock Guardrails with your previous configurations without changes and only move to the new tiers and CRIS configurations when you consider it appropriate.

- Resource efficiency – You can implement enhanced protections only where needed, balancing security requirements with performance considerations.

- Simplified migration path – When new capabilities become available, you can evaluate and integrate them gradually by policy area rather than facing all-or-nothing choices. This also simplifies testing and comparison mechanisms such as A/B testing or blue/green deployments for your guardrails.

This approach helps organizations balance their specific protection requirements with operational considerations in a more nuanced way than a single-option system could provide.

Configure safeguard tiers on the Amazon Bedrock console

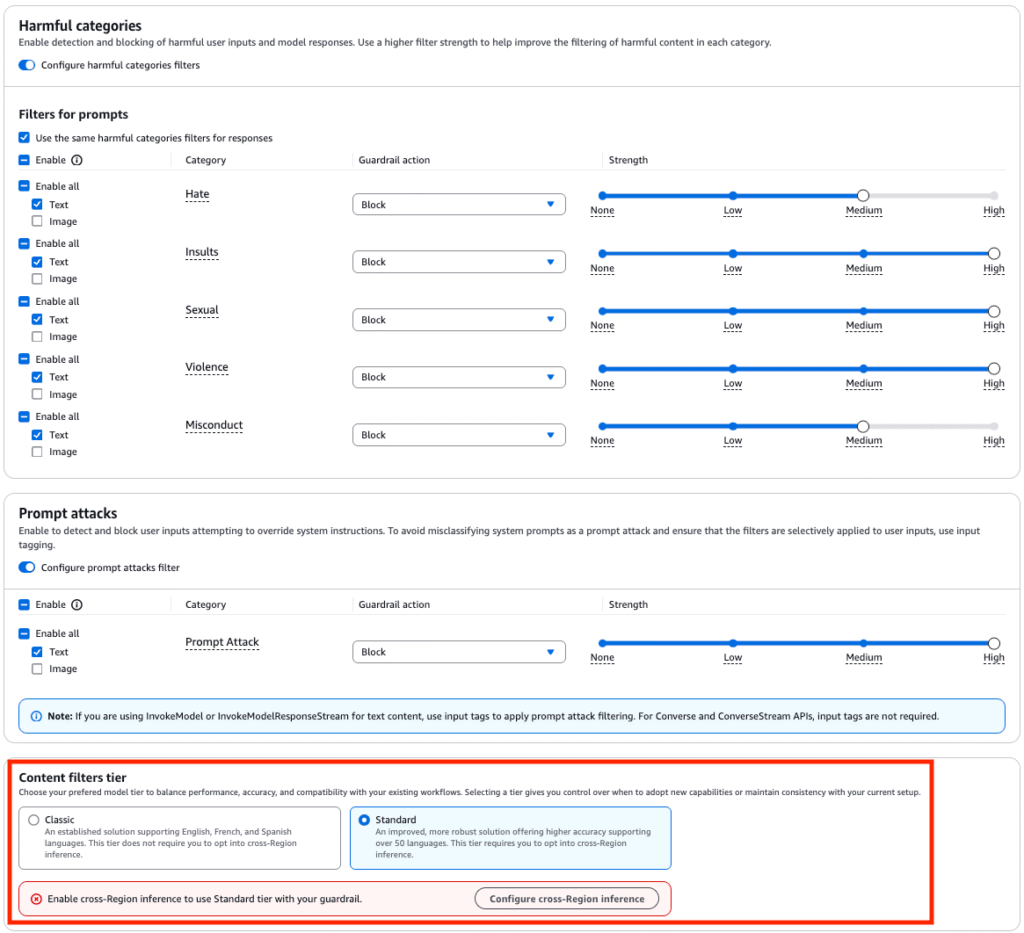

On the Amazon Bedrock console, you can configure the safeguard tiers for your guardrail in the Content filters tier or Denied topics tier sections by selecting your preferred tier.

Use of the new Standard tier requires setting up cross-Region inference for Amazon Bedrock Guardrails, choosing the guardrail profile of your choice.

Configure safeguard tiers using the AWS SDK

You can also configure the guardrail’s tiers using the AWS SDK. The following is an example to get started with the Python SDK:

Within a given guardrail, the content filter and denied topic policies can be configured with its own tier independently, giving you granular control over how guardrails behave. For example, you might choose the Standard tier for content filtering while keeping denied topics in the Classic tier, based on your specific requirements.

For migrating existing guardrails’ configurations to use the Standard tier, add the sections highlighted in the preceding example for crossRegionConfig and tierConfig to your current guardrail definition. You can do this using the UpdateGuardrail API, or create a new guardrail with the CreateGuardrail API.

Evaluating your guardrails

To thoroughly evaluate your guardrails’ performance, consider creating a test dataset that includes the following:

- Safe examples – Content that should pass through guardrails

- Harmful examples – Content that should be blocked

- Edge cases – Content that tests the boundaries of your policies

- Examples in multiple languages – Especially important when using the Standard tier

You can also rely on openly available datasets for this purpose. Ideally, your dataset should be labeled with the expected response for each case for assessing accuracy and recall of your guardrails.

With your dataset ready, you can use the Amazon Bedrock ApplyGuardrail API as shown in the following example to efficiently test your guardrail’s behavior for user inputs without invoking FMs. This way, you can save the costs associated with the large language model (LLM) response generation.

Later, you can repeat the process for the outputs of the LLMs if needed. For this, you can use the ApplyGuardrail API if you want an independent evaluation for models in AWS or outside in another provider, or you can directly use the Converse API if you intend to use models in Amazon Bedrock. When using the Converse API, the inputs and outputs are evaluated with the same invocation request, optimizing latency and reducing coding overheads.

Because your dataset is labeled, you can directly implement a mechanism for assessing the accuracy, recall, and potential false negatives or false positives through the use of libraries like SKLearn Metrics:

Alternatively, if you don’t have labeled data or your use cases have subjective responses, you can also rely on mechanisms such as LLM-as-a-judge, where you pass the inputs and guardrails’ evaluation outputs to an LLM for assessing a score based on your own predefined criteria. For more information, see Automate building guardrails for Amazon Bedrock using test-drive development.

Best practices for implementing tiers

We recommend considering the following aspects when configuring your tiers for Amazon Bedrock Guardrails:

- Start with staged testing – Test both tiers with a representative sample of your expected inputs and responses before making broad deployment decisions.

- Consider your language requirements – If your application serves users in multiple languages, the Standard tier’s expanded language support might be essential.

- Balance safety and performance – Evaluate both the accuracy improvements and latency differences to make informed decisions. Consider if you can afford a few additional milliseconds of latency for improved robustness with the Standard tier or prefer a latency-optimized option for more straight forward evaluations with the Classic tier.

- Use policy-level tier selection – Take advantage of the ability to select different tiers for different policies to optimize your guardrails. You can choose separate tiers for content filters and denied topics, while combining with the rest of the policies and features available in Amazon Bedrock Guardrails.

- Remember cross-Region requirements – The Standard tier requires cross-Region inference, so make sure your architecture and compliance requirements can accommodate this. With CRIS, your request originates from the Region where your guardrail is deployed, but it might be served from a different Region from the ones included in the guardrail inference profile for optimizing latency and availability.

Conclusion

The introduction of safeguard tiers in Amazon Bedrock Guardrails represents a significant step forward in our commitment to responsible AI. By providing flexible, powerful, and evolving safety tools for generative AI applications, we’re empowering organizations to implement AI solutions that are not only innovative but also ethical and trustworthy. This capabilities-based approach enables you to tailor your responsible AI practices to each specific use case. You can now implement the right level of protection for different applications while creating a path for continuous improvement in AI safety and ethics.The new Standard tier delivers significant improvements in multilingual support and detection accuracy, making it an ideal choice for many applications, especially those serving diverse global audiences or requiring enhanced protection. This aligns with responsible AI principles by making sure AI systems are fair and inclusive across different languages and cultures. Meanwhile, the Classic tier remains available for use cases prioritizing low latency or those with simpler language requirements, allowing organizations to balance performance with protection as needed.

By offering these customizable protection levels, we’re supporting organizations in their journey to develop and deploy AI responsibly. This approach helps make sure that AI applications are not only powerful and efficient but also align with organizational values, comply with regulations, and maintain user trust.

To learn more about safeguard tiers in Amazon Bedrock Guardrails, refer to Detect and filter harmful content by using Amazon Bedrock Guardrails, or visit the Amazon Bedrock console to create your first tiered guardrail.

About the Authors

Koushik Kethamakka is a Senior Software Engineer at AWS, focusing on AI/ML initiatives. At Amazon, he led real-time ML fraud prevention systems for Amazon.com before moving to AWS to lead development of AI/ML services like Amazon Lex and Amazon Bedrock. His expertise spans product and system design, LLM hosting, evaluations, and fine-tuning. Recently, Koushik’s focus has been on LLM evaluations and safety, leading to the development of products like Amazon Bedrock Evaluations and Amazon Bedrock Guardrails. Prior to joining Amazon, Koushik earned his MS from the University of Houston.

Koushik Kethamakka is a Senior Software Engineer at AWS, focusing on AI/ML initiatives. At Amazon, he led real-time ML fraud prevention systems for Amazon.com before moving to AWS to lead development of AI/ML services like Amazon Lex and Amazon Bedrock. His expertise spans product and system design, LLM hosting, evaluations, and fine-tuning. Recently, Koushik’s focus has been on LLM evaluations and safety, leading to the development of products like Amazon Bedrock Evaluations and Amazon Bedrock Guardrails. Prior to joining Amazon, Koushik earned his MS from the University of Houston.

Hang Su is a Senior Applied Scientist at AWS AI. He has been leading the Amazon Bedrock Guardrails Science team. His interest lies in AI safety topics, including harmful content detection, red-teaming, sensitive information detection, among others.

Hang Su is a Senior Applied Scientist at AWS AI. He has been leading the Amazon Bedrock Guardrails Science team. His interest lies in AI safety topics, including harmful content detection, red-teaming, sensitive information detection, among others.

Shyam Srinivasan is on the Amazon Bedrock product team. He cares about making the world a better place through technology and loves being part of this journey. In his spare time, Shyam likes to run long distances, travel around the world, and experience new cultures with family and friends.

Shyam Srinivasan is on the Amazon Bedrock product team. He cares about making the world a better place through technology and loves being part of this journey. In his spare time, Shyam likes to run long distances, travel around the world, and experience new cultures with family and friends.

Aartika Sardana Chandras is a Senior Product Marketing Manager for AWS Generative AI solutions, with a focus on Amazon Bedrock. She brings over 15 years of experience in product marketing, and is dedicated to empowering customers to navigate the complexities of the AI lifecycle. Aartika is passionate about helping customers leverage powerful AI technologies in an ethical and impactful manner.

Aartika Sardana Chandras is a Senior Product Marketing Manager for AWS Generative AI solutions, with a focus on Amazon Bedrock. She brings over 15 years of experience in product marketing, and is dedicated to empowering customers to navigate the complexities of the AI lifecycle. Aartika is passionate about helping customers leverage powerful AI technologies in an ethical and impactful manner.

Satveer Khurpa is a Sr. WW Specialist Solutions Architect, Amazon Bedrock at Amazon Web Services, specializing in Amazon Bedrock security. In this role, he uses his expertise in cloud-based architectures to develop innovative generative AI solutions for clients across diverse industries. Satveer’s deep understanding of generative AI technologies and security principles allows him to design scalable, secure, and responsible applications that unlock new business opportunities and drive tangible value while maintaining robust security postures.

Satveer Khurpa is a Sr. WW Specialist Solutions Architect, Amazon Bedrock at Amazon Web Services, specializing in Amazon Bedrock security. In this role, he uses his expertise in cloud-based architectures to develop innovative generative AI solutions for clients across diverse industries. Satveer’s deep understanding of generative AI technologies and security principles allows him to design scalable, secure, and responsible applications that unlock new business opportunities and drive tangible value while maintaining robust security postures.

Antonio Rodriguez is a Principal Generative AI Specialist Solutions Architect at Amazon Web Services. He helps companies of all sizes solve their challenges, embrace innovation, and create new business opportunities with Amazon Bedrock. Apart from work, he loves to spend time with his family and play sports with his friends.

Antonio Rodriguez is a Principal Generative AI Specialist Solutions Architect at Amazon Web Services. He helps companies of all sizes solve their challenges, embrace innovation, and create new business opportunities with Amazon Bedrock. Apart from work, he loves to spend time with his family and play sports with his friends.

Source: Read MoreÂ