The Challenge of Fine-Tuning Large Transformer Models

Self-attention enables transformer models to capture long-range dependencies in text, which is crucial for comprehending complex language patterns. These models work efficiently with massive datasets and achieve remarkable performance without needing task-specific structures. As a result, they are widely applied across industries, including software development, education, and content generation.

A key limitation in applying these powerful models is the reliance on supervised fine-tuning. Adapting a base transformer to a specific task typically involves retraining the model with labeled data, which demands significant computational resources, sometimes amounting to thousands of GPU hours. This presents a major barrier for organizations that lack access to such hardware or seek quicker adaptation times. Consequently, there is a pressing need for methods that can elicit task-specific capabilities from pre-trained transformers without modifying their parameters.

Inference-Time Prompting as an Alternative to Fine-Tuning

To address this issue, researchers have explored inference-time techniques that guide the model’s behavior using example-based inputs, bypassing the need for parameter updates. Among these methods, in-context learning has emerged as a practical approach where a model receives a sequence of input-output pairs to generate predictions for new inputs. Unlike traditional training, these techniques operate during inference, enabling the base model to exhibit desired behaviors solely based on context. Despite their promise, there has been limited formal proof to confirm that such techniques can consistently match fine-tuned performance.

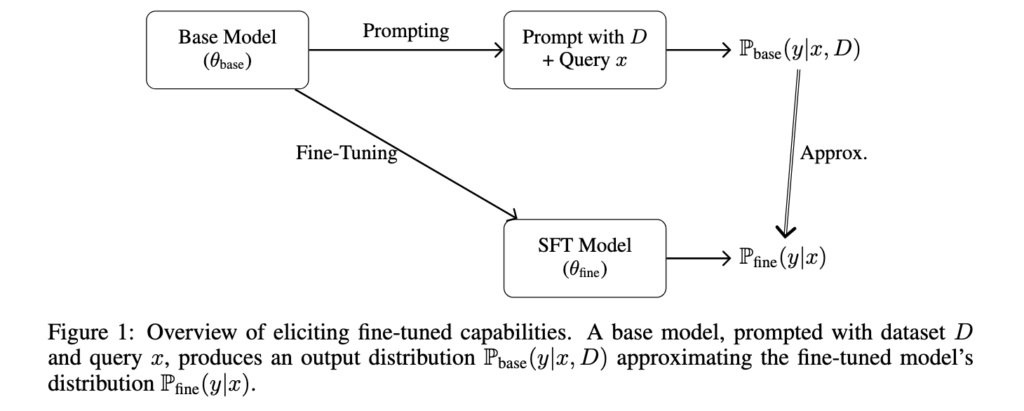

Theoretical Framework: Approximating Fine-Tuned Models via In-Context Learning

Researchers from Patched Codes, Inc. introduced a method grounded in the Turing completeness of transformers, demonstrating that a base model can approximate the behavior of a fine-tuned model using in-context learning, provided sufficient computational resources and access to the original training dataset. Their theoretical framework offers a quantifiable approach to understanding how dataset size, context length, and task complexity influence the quality of the approximation. The analysis specifically examines two task types—text generation and linear classification—and establishes bounds on dataset requirements to achieve fine-tuned-like outputs with a defined error margin.

Prompt Design and Theoretical Guarantees

The method involves designing a prompt structure that concatenates a dataset of labeled examples with a target query. The model processes this sequence, drawing patterns from the examples to generate a response. For instance, a prompt could include input-output pairs like sentiment-labeled reviews, followed by a new review whose sentiment must be predicted. The researchers constructed this process as a simulation of a Turing machine, where self-attention mimics the tape state and feed-forward layers act as transition rules. They also formalized conditions under which the total variation distance between the base and fine-tuned output distributions remains within an acceptable error ε. The paper provides a construction for this inference technique and quantifies its theoretical performance.

Quantitative Results: Dataset Size and Task Complexity

The researchers provided performance guarantees based on dataset size and task type. For text generation tasks involving a vocabulary size V, the dataset must be of sizeOmVϵ2log1δ to ensure the base model approximates the fine-tuned model within an error ε across mmm contexts. When the output length is fixed at l, a smaller dataset of size Ol logVϵ2log1δ suffices. For linear classification tasks where the input has dimension d, the required dataset size becomes Odϵ, or with context constraints, O1ϵ2log1δ. These results are robust under idealized assumptions but also adapted to practical constraints like finite context length and partial dataset availability using techniques such as retrieval-augmented generation.

Implications: Towards Efficient and Scalable NLP Models

This research presents a detailed and well-structured argument demonstrating that inference-time prompting can closely match the capabilities of supervised fine-tuning, provided sufficient contextual data is supplied. It successfully identifies a path toward more resource-efficient deployment of large language models, presenting both a theoretical justification and practical techniques. The study demonstrates that leveraging a model’s latent capabilities through structured prompts is not just viable but scalable and highly effective for specific NLP tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post From Fine-Tuning to Prompt Engineering: Theory and Practice for Efficient Transformer Adaptation appeared first on MarkTechPost.

Source: Read MoreÂ