According to the World Health Organization, more than 2.2 billion people globally have vision impairment. For compliance with disability legislation, such as the Americans with Disabilities Act (ADA) in the United States, media in visual formats like television shows or movies are required to provide accessibility to visually impaired people. This often comes in the form of audio description tracks that narrate the visual elements of the film or show. According to the International Documentary Association, creating audio descriptions can cost $25 per minute (or more) when using third parties. For building audio descriptions internally, the effort for businesses in the media industry can be significant, requiring content creators, audio description writers, description narrators, audio engineers, delivery vendors and more according to the American Council of the Blind (ACB). This leads to the natural question, can you automate this process with the help of generative AI offerings in Amazon Web Services (AWS)?

Newly announced in December at re:Invent 2024, the Amazon Nova Foundation Models family is available through Amazon Bedrock and includes three multimodal foundational models (FMs):

- Amazon Nova Lite (GA) – A low-cost multimodal model that’s lightning-fast for processing image, video, and text inputs

- Amazon Nova Pro (GA) – A highly capable multimodal model with a balanced combination of accuracy, speed, and cost for a wide range of tasks

- Amazon Nova Premier (GA) – Our most capable model for complex tasks and a teacher for model distillation

In this post, we demonstrate how you can use services like Amazon Nova, Amazon Rekognition, and Amazon Polly to automate the creation of accessible audio descriptions for video content. This approach can significantly reduce the time and cost required to make videos accessible for visually impaired audiences. However, this post doesn’t provide a complete, deployment-ready solution. We share pseudocode snippets and guidance in sequential order, in addition to detailed explanations and links to resources. For a complete script, you can use additional resources, such as Amazon Q Developer, to build a fully functional system. The automated workflow described in the post involves analyzing video content, generating text descriptions, and narrating them using AI voice generation. In summary, while powerful, this requires careful integration and testing to deploy effectively. By the end of this post, you’ll understand the key steps, but some additional work is needed to create a production-ready solution for your specific use case.

Solution overview

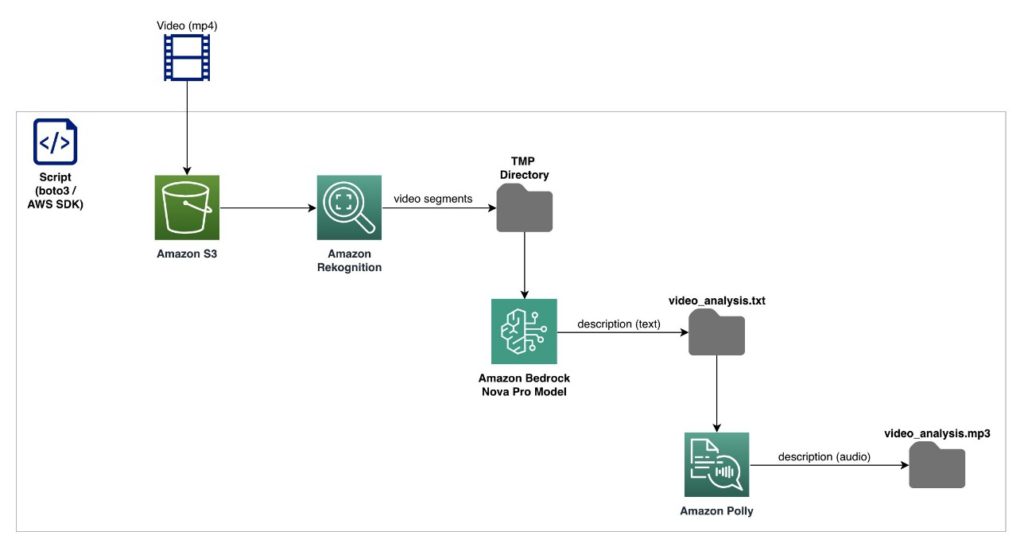

The following architecture diagram demonstrates the end-to-end workflow of the proposed solution. We will describe each component in-depth in the later sections of this post, but note that you can define the logic within a single script. You can then run your script on an Amazon Elastic Compute Cloude (Amazon EC2) instance or on your local computer. For this post, we assume that you will run the script on an Amazon SageMaker notebook.

Services used

The services shown in the architecture diagram include:

- Amazon S3 – Amazon Simple Storage Service (Amazon S3) is an object storage service that provides scalable, durable, and highly available storage. In this example, we use Amazon S3 to store the video files (input) and scene description (text files) and audio description (MP3 files) output generated by the solution. The script starts by fetching the source video from an S3 bucket.

- Amazon Rekognition – Amazon Rekognition is a computer vision service that can detect and extract video segments or scenes by identifying technical cues such as shot boundaries, black frames, and other visual elements. To yield higher accuracy for the generated video descriptions, you use Amazon Rekognition to segment the source video into smaller chunks before passing it to Amazon Nova. These video segments can be stored in a temporary directory on your compute machine.

- Amazon Bedrock – Amazon Bedrock is a managed service that provides access to large, pre-trained AI models such as the Amazon Nova Pro model, which is used in this solution to analyze the content of each video segment and generate detailed scene descriptions. You can store these text descriptions in a text file (for example,

video_analysis.txt). - Amazon Polly – Amazon Polly is a text-to-speech service that is used to convert the text descriptions generated by the Amazon Nova Pro model into high-quality audio, made available using an MP3 file.

Prerequisites

To follow along with the solution outlined in this post, you should have the following in place:

- A video file. For this post, we use a public domain video, This is Coffee.

- An AWS account with access to the following services:

- Amazon Rekognition

- Amazon Nova Pro

- Amazon S3

- Amazon Polly

- Configure your AWS Command Line Interface (AWS CLI) or environment with valid credentials (using

aws configureor environment variables)

- To write the script, you need access to an AWS Software Development Kit (AWS SDK) in the language of your choice. In this post, we assume that you will use the AWS SDK for Python (Boto3). Additional information on AWS SDK for Boto3 is available in the Quickstart for Boto3.

You can use AWS SDK to create, configure, and manage AWS services. For Boto3, you can include it at the top of your script using: import boto3

Additionally, you need a mechanism to split videos. If you’re using Python, we recommend the moviepy library.

import moviepy # pip install moviepy

Solution walkthrough

The solution includes the following basic steps, which you can use as a basic structure and customize or expand to fit your use case.

- Define the requirements for the AWS environment, including defining the use of the Amazon Nova Pro model for its visual support and the AWS Region you’re working in. For optimal throughput, we recommend using inference profiles when configuring Amazon Bedrock to invoke the Amazon Nova Pro model. Initialize a client for Amazon Rekognition, which you use for its support of segmentation.

- Define a function for detecting segments in the video. Amazon Rekognition supports segmentation, which means users have the option to detect and extract different segments or scenes within a video. By using the Amazon Rekognition Segment API, you can perform the following:

- Detect technical cues such as black frames, color bars, opening and end credits, and studio logos in a video.

- Detect shot boundaries to identify the start, end, and duration of individual shots within the video.

The solution uses Amazon Rekognition to partition the video into multiple segments and perform Amazon Nova Pro-based inference on each segment. Finally, you can piece together each segment’s inference output to return a comprehensive audio description for the entire video.

In the preceding image, there are two scenes: a screenshot of one scene on the left followed by the scene that immediately follows it on the right. With the Amazon Rekognition segmentation API, you can identify that the scene has changed—that the content that is displayed on screen is different—and therefore you need to generate a new scene description.

- Create the segmentation job and:

- Upload the video file for which you want to create an audio description to Amazon S3.

- Start the job using that video.

Setting SegmentType=[‘SHOT’] identifies the start, end, and duration of a scene. Additionally, MinSegmentConfidence sets the minimum confidence Amazon Rekognition must have to return a detected segment, with 0 being lowest confidence and 100 being highest.

- Use the

analyze_chunkfunction. This function defines the main logic of the audio description solution. Some items to note aboutanalyze_chunk:- For this example, we sent a video scene to Amazon Nova Pro for an analysis of the contents using the prompt

Describe what is happening in this video in detail. This prompt is relatively straightforward and experimentation or customization for your use case is encouraged. Amazon Nova Pro then returned the text description for our video scene. - For longer videos with many scenes, you might encounter throttling. This is resolved by implementing a retry mechanism. For details on throttling and quotas for Amazon Bedrock, see Quotas for Amazon Bedrock.

- For this example, we sent a video scene to Amazon Nova Pro for an analysis of the contents using the prompt

In effect, the raw scenes are converted into rich, descriptive text. Using this text, you can generate a complete scene-by-scene walkthrough of the video and send it to Amazon Polly for audio.

- Use the following code to orchestrate the process:

- Initiate the detection of the various segments by using Amazon Rekognition.

- Each segment is processed through a flow of:

- Extraction.

- Analysis using Amazon Nova Pro.

- Compiling the analysis into a

video_analysis.txtfile.

- The

analyze_videofunction brings together all the components and produces a text file that contains the complete, scene-by-scene analysis of the video contents, with timestamps

If you refer back to the previous screenshot, the output—without any additional refinement—will look similar to the following image.

The following screenshot is an example is a more extensive look at the video_analysis.txt for the coffee.mp4 video:

- Send the contents of the text file to Amazon Polly. Amazon Polly adds a voice to the text file, completing the workflow of the audio description solution.

For a list of different voices that you can use in Amazon Polly, see Available voices in the Amazon Polly Developer Guide.

Your final output with Polly should sound something like this:

Clean up

It’s a best practice to delete the resources you provisioned for this solution. If you used an EC2 or SageMaker Notebook Instance, stop or terminate it. Remember to delete unused files from your S3 bucket (eg: video_analysis.txt and video_analysis.mp3).

Conclusion

Recapping the solution at a high level, in this post, you used:

- Amazon S3 to store the original video, intermediate data, and the final audio description artifacts

- Amazon Rekognition to partition the video file into time-stamped scenes

- Computer vision capabilities from Amazon Nova Pro (available through Amazon Bedrock) to analyze the contents of each scene

We showed you how to use Amazon Polly to create an MP3 audio file from the final scene description text file, which is what will be consumed by the audience members. The solution outlined in this post demonstrates how to fully automate the process of creating audio descriptions for video content to improve accessibility. By using Amazon Rekognition for video segmentation, the Amazon Nova Pro model for scene analysis, and Amazon Polly for text-to-speech, you can generate a comprehensive audio description track that narrates the key visual elements of a video. This end-to-end automation can significantly reduce the time and cost required to make video content accessible for visually impaired audiences, helping businesses and organizations meet their accessibility goals. With the power of AWS AI services, this solution provides a scalable and efficient way to improve accessibility and inclusion for video-based media.

This solution isn’t limited to using it for TV shows and movies. Any visual media that requires accessibility can be a candidate! For more information about the new Amazon Nova model family and the amazing things these models can do, see Introducing Amazon Nova foundation models: Frontier intelligence and industry leading price performance.

In addition to the steps described in this post, additional actions you might need to take include:

- Removing a video segment analysis’s introductory text from Amazon Nova. When Amazon Nova returns a response, it might begin with something like “In this video…” or something similar. You probably want just the video description itself without this introductory text. If there is introductory text in your scene descriptions, then Amazon Polly will speak it aloud and impact the quality of your audio transcriptions. You can account for this in a few ways.

- For example, prior to sending it to Amazon Polly, you can modify the generated scene descriptions by programmatically removing that type of text from them.

- Alternatively, you can use prompt engineering to request that Amazon Bedrock return only the scene descriptions in a structured format or without any additional commentary.

- The third option is to define and use a tool when performing inference on Amazon Bedrock. This can be a more comprehensive technique of defining the format of the output that you want Amazon Bedrock to return. Using tools to shape model output, is known as function calling. For more information, see Use a tool to complete an Amazon Bedrock model response.

- You should also be mindful of the architectural components of the solution. In a production environment, being mindful of any potential scaling, security, and storage elements is important because the architecture might begin to resemble something more complex than the basic solution architecture diagram that this post began with.

About the Authors

Dylan Martin is an AWS Solutions Architect, working primarily in the generative AI space helping AWS Technical Field teams build AI/ML workloads on AWS. He brings his experience as both a security solutions architect and software engineer. Outside of work he enjoys motorcycling, the French Riviera and studying languages.

Dylan Martin is an AWS Solutions Architect, working primarily in the generative AI space helping AWS Technical Field teams build AI/ML workloads on AWS. He brings his experience as both a security solutions architect and software engineer. Outside of work he enjoys motorcycling, the French Riviera and studying languages.

Ankit Patel is an AWS Solutions Developer, part of the Prototyping And Customer Engineering (PACE) team. Ankit helps customers bring their innovative ideas to life by rapid prototyping; using the AWS platform to build, orchestrate, and manage custom applications.

Ankit Patel is an AWS Solutions Developer, part of the Prototyping And Customer Engineering (PACE) team. Ankit helps customers bring their innovative ideas to life by rapid prototyping; using the AWS platform to build, orchestrate, and manage custom applications.

Source: Read MoreÂ