Large reasoning models, often powered by large language models, are increasingly used to solve high-level problems in mathematics, scientific analysis, and code generation. The central idea is to simulate two types of cognition: rapid responses for simpler reasoning and deliberate, slower thought for more complex problems. This dual-mode thinking reflects how humans transition from intuitive reactions to analytical thinking depending on task complexity, a principle that drives innovations in cognitive modeling and AI reasoning frameworks.

One persistent issue arises from the model’s inability to self-regulate these shifts between fast and slow thinking. Rather than aligning with task demands, models tend to default to fixed patterns, leading to either premature conclusions or excessive processing. This inefficiency becomes particularly evident when handling tasks that demand a delicate balance of deliberation and swiftness. The failure to optimize this transition has limited the reasoning accuracy of these models, often leading to errors or unnecessary computation, particularly in high-stakes applications such as competitive math problems or real-time code analysis.

To tackle this, previous solutions have introduced test-time scaling approaches. Parallel scaling strategies utilize multiple outputs from a model and then select the best one using metrics like self-consistency or perplexity. In contrast, sequential scaling alters how the model reasons over time by either restricting or encouraging the formation of prolonged chains of thought. One example is the Chain of Draft method, which limits reasoning steps to a strict word count to reduce overthinking. Another approach, S1, extends slow reasoning near the end by adding “wait” tokens. However, these methods often lack synchronization between the duration of reasoning and the scheduling of slow-to-fast thinking transitions, failing to offer a universal solution that effectively adapts reasoning processes.

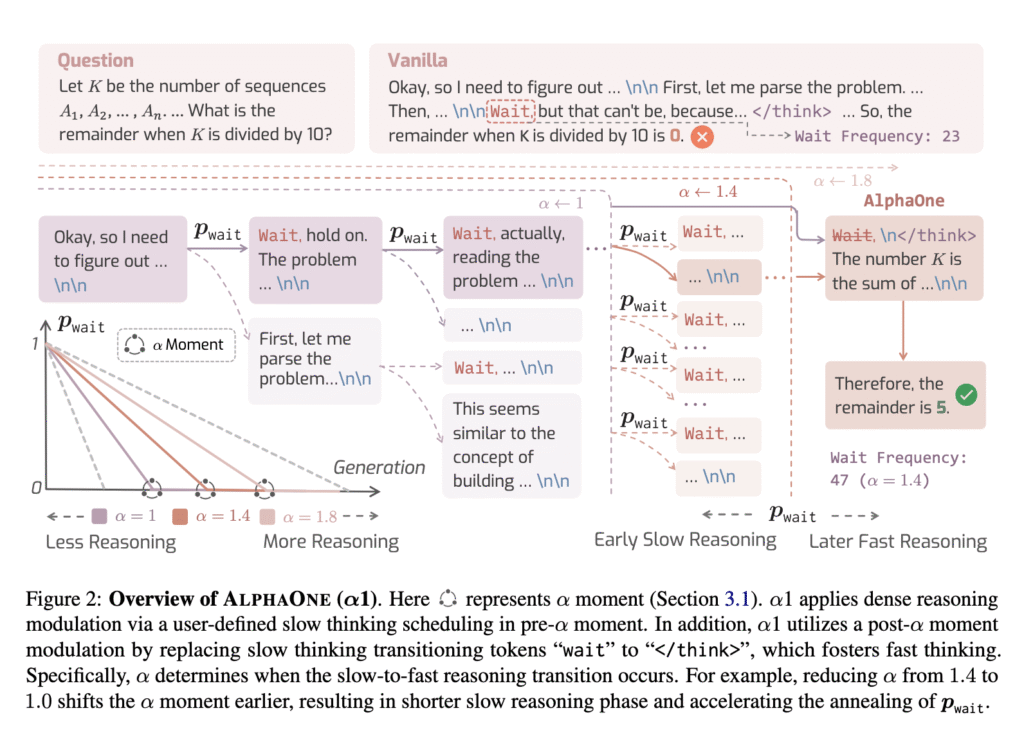

Researchers from the University of Illinois Urbana-Champaign and UC Berkeley have introduced ALPHAONE, which brings a novel modulation system to control reasoning dynamics during test time. ALPHAONE introduces a concept called the “alpha moment,” controlled by a universal parameter α, that defines when the model transitions from slow to fast reasoning. This framework modifies the reasoning process by adjusting both the duration and structure of thought, making it possible to unify and extend prior methods with a more adaptable strategy for handling complex reasoning tasks.

The mechanism is divided into two core phases. In the pre-alpha phase, ALPHAONE initiates slow reasoning using a probabilistic schedule that inserts the token “wait” after structural breaks like “nn,” governed by a Bernoulli process. This insertion is not static but based on a user-defined function that adjusts over time—for example, using a linear annealing pattern to taper off slow thinking. Once the model hits the alpha moment, the post-alpha phase begins by replacing “wait” tokens with the explicit end-of-thinking token “</think>.” This ensures a decisive shift to fast thinking, mitigating inertia caused by prolonged slow reasoning and enabling the efficient generation of answers.

ALPHAONE demonstrated superior results across six benchmarks in mathematics, science, and code generation. For example, using the DeepSeek-R1-Distill-Qwen-1.5B model, ALPHAONE boosted accuracy in AMC23 from 57.5% to 70.0% while reducing average token length from 5339 to 4952. Similar gains were noted with larger models: with the 7B model, performance on OlympiadBench rose from 50.4% to 55.7%, and with the 32B Qwen QwQ model, performance in AIME24 jumped from 40.0% to 53.3%. On average, across all models and tasks, ALPHAONE improved accuracy by +6.15% and used fewer tokens compared to standard models and other baselines like S1 and Chain of Draft.

These results confirm that managing the flow between slow and fast reasoning is crucial for achieving better performance in complex problem-solving. By enabling structured modulation via a universal framework, ALPHAONE resolves previous inefficiencies and opens up a scalable, efficient path forward for reasoning models. The approach showcases how thoughtful scheduling of cognition-like behaviors in AI can yield practical, measurable benefits in performance and resource efficiency.

Check out the Paper, GitHub Page and Project Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 98k+ ML SubReddit and Subscribe to our Newsletter.

The post ALPHAONE: A Universal Test-Time Framework for Modulating Reasoning in AI Models appeared first on MarkTechPost.

Source: Read MoreÂ