Large Language Models (LLMs) generate step-by-step responses known as Chain-of-Thoughts (CoTs), where each token contributes to a coherent and logical narrative. To improve the quality of reasoning, various reinforcement learning techniques have been employed. These methods allow the model to learn from feedback mechanisms by aligning generated outputs with correctness criteria. As LLMs grow in complexity and capacity, researchers have begun probing the internal structure of token generation to discern patterns that enhance or limit performance. One area gaining attention is the token entropy distribution, a measurement of uncertainty in token prediction, which is now being linked to the model’s ability to make meaningful logical decisions during reasoning.

A core issue in training reasoning models using reinforcement learning is treating all output tokens equally. When models are optimized using reinforcement learning with verifiable rewards (RLVR), the update process traditionally includes every token in the generated sequence, regardless of its functional role. This uniform treatment fails to distinguish tokens that lead to significant reasoning shifts from those that merely extend existing linguistic structures. As a result, a large portion of training resources may be directed at tokens that offer minimal contribution to the model’s reasoning capabilities. Without prioritizing the few tokens that play decisive roles in navigating different logic paths, these methods miss opportunities for focused and effective optimization.

Most RLVR frameworks, including Proximal Policy Optimization (PPO), Group Relative Policy Optimization (GRPO), and Dynamic sAmpling Policy Optimization (DAPO), function by evaluating entire sequences of token outputs against reward functions that assess correctness. PPO relies on stabilizing policy updates through a clipped objective function. GRPO improves upon this by estimating advantage values using grouped responses, rather than a separate value network. DAPO introduces additional enhancements, such as the clip-higher mechanism and overlong reward shaping. These methods, however, do not factor in token-level entropy or distinguish the importance of individual tokens in the reasoning chain, instead applying uniform gradient updates across the board.

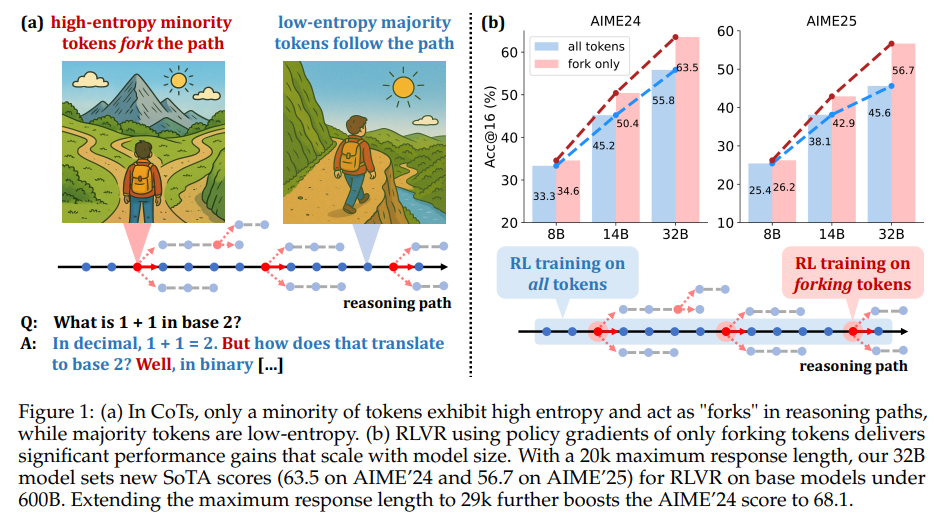

In an attempt to refine how RLVR training impacts LLM reasoning, researchers from Alibaba Inc. and Tsinghua University presented a new methodology focused on token entropy patterns. They observed that in the CoT sequences generated by Qwen3 models, a small subset of tokens, roughly 20%, display significantly higher entropy. These tokens, labeled “forking tokens,” often correspond to moments where the model must decide between multiple reasoning paths. The remaining 80% of tokens typically exhibit low entropy and act as extensions of prior statements. By limiting policy gradient updates solely to these high-entropy tokens, the research team was able not only to maintain but, in many cases, improve performance on challenging reasoning benchmarks.

To quantify token entropy, the researchers used the entropy formula based on the probability distribution over possible token choices at each step. They found that over half of all generated tokens had entropy values below 0.01, indicating near-deterministic behavior. Only 20% exceeded an entropy of 0.672, marking them as the decision-making hubs within CoTs. High-entropy tokens often include logical operators and connective words such as “assume,” “since,” or “thus,” which introduce new conditions or transitions in logic. In contrast, low-entropy tokens included predictable symbols, suffixes, or code fragments. Through controlled experiments, it became clear that manipulating the entropy of these forking tokens directly influenced the model’s reasoning performance, while altering low-entropy tokens had little effect.

The research team conducted extensive experiments across three model sizes: Qwen3-8B, Qwen3-14B, and Qwen3-32B. When training only the top 20% high-entropy tokens, the Qwen3-32B model achieved a score of 63.5 on AIME’24 and 56.7 on AIME’25, both setting new performance benchmarks for models under 600B parameters. Furthermore, increasing the maximum response length from 20k to 29k raised the AIME’24 score to 68.1. In comparison, training on the bottom 80% of low-entropy tokens caused performance to drop significantly. The Qwen3-14B model showed gains of +4.79 on AIME’25 and +5.21 on AIME’24, while the Qwen3-8B maintained competitive results relative to full-token training. An ablation study further confirmed the importance of retaining the 20% threshold. Decreasing the fraction to 10% omitted essential decision points, and increasing it to 50% or 100% diluted the effect by including too many low-entropy tokens, thereby reducing entropy diversity and hindering exploration.

In essence, the research provides a new direction for enhancing the reasoning abilities of language models by identifying and selectively training on the minority of tokens that disproportionately contribute to reasoning success. It avoids inefficient training and instead proposes a scalable approach that aligns reinforcement learning objectives with actual decision-making moments in token sequences. The success of this strategy lies in using entropy as a guide to distinguish useful tokens from filler.

Several Key takeaways from the research include:

- Around 20% of tokens exhibit high entropy and serve as forking points that direct reasoning paths.

- Training only on these high-entropy tokens delivers performance equal to or better than training on the full token set.

- Qwen3-32B achieved scores of 63.5 on AIME’24 and 56.7 on AIME’25, outperforming larger models trained traditionally.

- Extending response length from 20k to 29k further pushed the AIME’24 score to 68.1.

- Training on the remaining 80% of low-entropy tokens led to sharp performance degradation.

- Retaining the 20% threshold for high-entropy tokens optimally balances exploration and performance.

- Larger models gain more from this strategy due to their capacity to benefit from enhanced exploration.

- The strategy scales well and could guide more efficient training of next-generation reasoning models.

In conclusion, this research effectively rethinks the application of reinforcement learning to language models by introducing a focus on token-level entropy. By optimizing only the minority that influences reasoning paths, the method enhances performance while reducing computational overhead. It provides a practical roadmap for future efforts to improve reasoning in LLMs without unnecessary complexity.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 98k+ ML SubReddit and Subscribe to our Newsletter.

The post High-Entropy Token Selection in Reinforcement Learning with Verifiable Rewards (RLVR) Improves Accuracy and Reduces Training Cost for LLMs appeared first on MarkTechPost.

Source: Read MoreÂ