Recent advances in reasoning-focused language models have marked a major change in AI by scaling test-time computation. Reinforcement learning (RL) is crucial in developing reasoning capabilities and mitigating reward hacking pitfalls. However, a fundamental debate remains: whether RL provides new reasoning capabilities from a base model or just helps optimize sampling efficiency of existing solutions. Current research faces two critical limitations: (a) heavy dependency on specialized domains, such as mathematics, where models are often overtrained and restrict exploration potential, and (b) premature termination of RL training before models can fully develop new reasoning capabilities, typically limiting training to hundreds of steps.

Reasoning models represent specialized AI systems that engage in detailed, long CoT processes before generating final answers. DeepSeek and Kimi have detailed methodologies for training reasoning models using reinforcement learning with verifiable rewards (RLVR), making algorithms like GRPO, Mirror Descent, and RLOO popular. Recently, methods like AlphaGo and AlphaZero have demonstrated that AI agents can indefinitely improve their performance, showing that RL training helps agents develop novel techniques not present in their base models. Moreover, existing works question whether RL training truly improves reasoning capacity in LLMs, arguing that RLVR fails to extend reasoning capacity, as evidenced by pass@k metrics showing no improvement compared to base models.

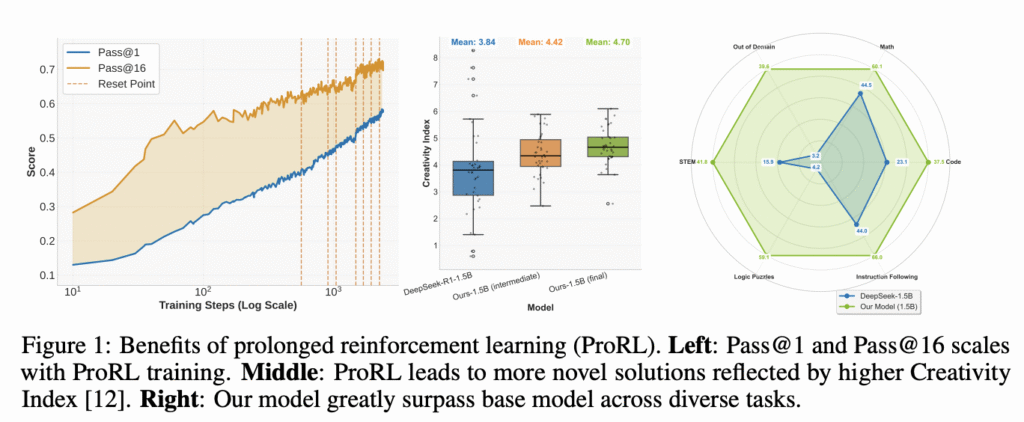

Researchers from NVIDIA have proposed ProRL, a method designed to enable extended RL training periods, helping deeper exploration of reasoning strategies. ProRL supports over 2,000 training steps and scales training data across diverse tasks, such as math, coding, science problems, logic puzzles, and following instructions. Using ProRL, the researchers developed Nemotron-Research-Reasoning-Qwen-1.5B, the world’s best 1.5B reasoning model, which outperforms its base model, DeepSeek-R1-1.5B, and excels over DeepSeek-R1-7B across diverse benchmarks. It demonstrates that RL can discover truly new solution pathways not present in base models when given sufficient training time and applied to novel reasoning tasks, suggesting a genuine expansion of reasoning capabilities beyond the initial training.

Researchers built a diverse and verifiable training dataset spanning 136,000 examples across five task domains: mathematics, code, STEM, logical puzzles, and instruction following. The training utilizes verl framework for RL implementation, adopting enhancements of the GRPO method proposed by DAPO. A wide range of evaluation benchmarks are used across multiple domains to test the proposed model: mathematics evaluation includes AIME2024, AIME2025, AMC, MATH, Minerva Math, and Olympiad Bench; coding assessment uses PRIME validation set, HumanevalPlus, and LiveCodeBench; logic puzzles evaluation reserves 100 samples from reasoning gym tasks, while STEM reasoning and instruction following capabilities are evaluated using curated subsets from GPQA Diamond and IFEval respectively.

In mathematics, Nemotron-Research-Reasoning-Qwen-1.5B achieves an average improvement of 15.7% across benchmarks, while competitive programming tasks show 14.4% improvement in pass@1 accuracy. STEM reasoning and instruction following domains result in 25.9% gains on GPQA Diamond and 22.0% on IFEval. The model shows an improvement of 54.8% in reward, showing high accuracy on Reasoning Gym logic puzzles. Out-of-distribution evaluation reveals significant improvements on three unseen Reasoning Gym tasks, highlighting effective generalization beyond the training distribution. Compared to domain-specialized models DeepScaleR-1.5B and DeepCoder-1.5B, the ProRL-trained model achieves superior pass@1 scores on both math (+4.6%) and code (+6.5%) benchmarks.

In this paper, researchers introduced ProRL, which provides evidence that extended, stable RL training develops novel reasoning patterns beyond a base model’s initial capabilities. Based on this method, researchers developed Nemotron-Research-Reasoning-Qwen-1.5B, the world’s best 1.5B reasoning model. ProRL demonstrates its ability to solve tasks where base models initially struggle, showing that extended RL training helps models internalize abstract reasoning patterns, transferable beyond training distributions. These results challenge previous assumptions about RL limitations and establish that sufficient training time with proper techniques can increase reasoning boundaries, paving the way for developing more capable reasoning models.

Check out the Paper and Model Page . All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post NVIDIA Introduces ProRL: Long-Horizon Reinforcement Learning Boosts Reasoning and Generalization appeared first on MarkTechPost.

Source: Read MoreÂ