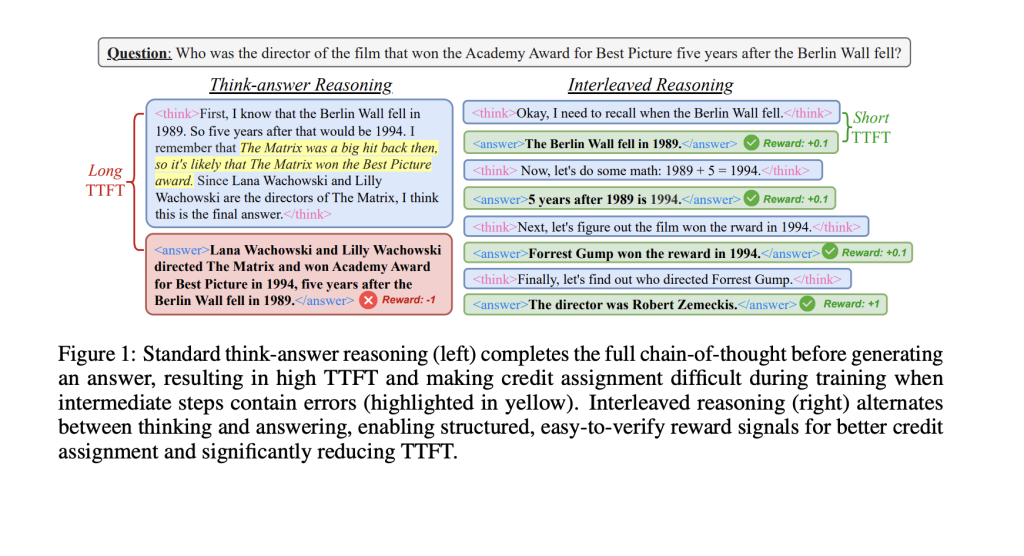

Long CoT reasoning improves large language models’ performance on complex tasks but comes with drawbacks. The typical “think-then-answer” method slows down response times, disrupting real-time interactions like those in chatbots. It also risks inaccuracies, as errors in earlier reasoning steps can lead to a misleading final answer. Unlike humans, who often share partial thoughts or conclusions during conversations, LLMs delay responses until all reasoning is complete. While RL is commonly used to train reasoning models, it mainly rewards final answers, overlooking useful intermediate insights. There is growing interest in teaching models that alternate between thinking and answering, but this remains a challenge.

RL has become a popular method to enhance reasoning in LLMs, building on its success in aligning models with human preferences. Two common reward types guide RL: outcome-based rewards (ORM), which focus on the final answer, and process-based rewards (PRM), which provide feedback on intermediate reasoning steps. While PRMs offer more detailed supervision, they often rely on human annotation and additional models, making them complex and prone to issues like reward hacking. Separately, efforts to improve LLM reasoning have explored prompting strategies, structured reasoning, tool integration, and methods to reduce latency and improve efficiency.

Researchers from Apple and Duke University introduce Interleaved Reasoning, a new RL approach that enables language models to alternate between thinking and answering when solving complex, multi-step questions. Instead of waiting until the end to respond, models provide informative intermediate answers, which improves feedback for users and guides their reasoning. Using a straightforward rule-based reward, the model is trained to produce helpful reasoning steps, leading to over 80% faster responses and up to 19.3% better accuracy. Trained only on QA and logic datasets, the method demonstrates strong generalization to more challenging benchmarks, such as MATH, GPQA, and MMLU.

The study proposes a reinforcement learning framework to train LLMs for Interleaved Reasoning, where models alternate between internal thinking and user-facing intermediate answers. Each intermediate step, or “sub-answer,” is shared once the model reaches a meaningful milestone in reasoning. A specialized training template with <think> and <answer> tags is used. The approach utilizes rule-based rewards—specifically, format, final accuracy, and conditional intermediate accuracy—to guide learning. Notably, intermediate rewards are applied only when specific criteria are met, ensuring the model prioritizes overall correctness. They also test different reward schemes, such as all-or-none, partial credit, and time-discounted rewards, to optimize the quality of reasoning.

The interleaved reasoning approach was evaluated on both familiar and unfamiliar datasets using Qwen2.5 models (1.5B and 7B). Unlike traditional methods that separate thinking and answering, the interleaved method provides answers incrementally, improving both speed and usefulness. When combined with intermediate rewards, it significantly enhances model performance while reducing response delays by over 80%. Even without exposure to new domains during training, the model adapts well, showing strong generalization. These results highlight the value of interleaved reasoning in making AI systems more responsive and effective in real-world, multi-step reasoning tasks.

In conclusion, the study explores how interleaved reasoning—where models alternate between reasoning and generating intermediate answers—can significantly improve performance and responsiveness. Using the Qwen2.5-1.5B model, the authors show that providing timely intermediate feedback during training boosts accuracy and accelerates response generation. Different RL strategies were tested, with PPO showing stable results, and conditional, time-discounted rewards proving to be the most effective. The method scales well to complex tasks and outperforms traditional think-then-answer baselines. Unlike token-level reward models, this approach employs simple rule-based rewards after completing full reasoning steps, thereby avoiding reward hacking. Ultimately, interleaved reasoning enhances reasoning quality and efficiency without relying on external tools.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Apple and Duke Researchers Present a Reinforcement Learning Approach That Enables LLMs to Provide Intermediate Answers, Enhancing Speed and Accuracy appeared first on MarkTechPost.

Source: Read MoreÂ