Agentic Retrieval Augmented Generation (RAG) applications represent an advanced approach in AI that integrates foundation models (FMs) with external knowledge retrieval and autonomous agent capabilities. These systems dynamically access and process information, break down complex tasks, use external tools, apply reasoning, and adapt to various contexts. They go beyond simple question answering by performing multi-step processes, making decisions, and generating complex outputs.

In this post, we demonstrate an example of building an agentic RAG application using the LlamaIndex framework. LlamaIndex is a framework that connects FMs with external data sources. It helps ingest, structure, and retrieve information from databases, APIs, PDFs, and more, enabling the agent and RAG for AI applications.

This application serves as a research tool, using the Mistral Large 2 FM on Amazon Bedrock generate responses for the agent flow. The example application interacts with well-known websites, such as Arxiv, GitHub, TechCrunch, and DuckDuckGo, and can access knowledge bases containing documentation and internal knowledge.

This application can be further expanded to accommodate broader use cases requiring dynamic interaction with internal and external APIs, as well as the integration of internal knowledge bases to provide more context-aware responses to user queries.

Solution overview

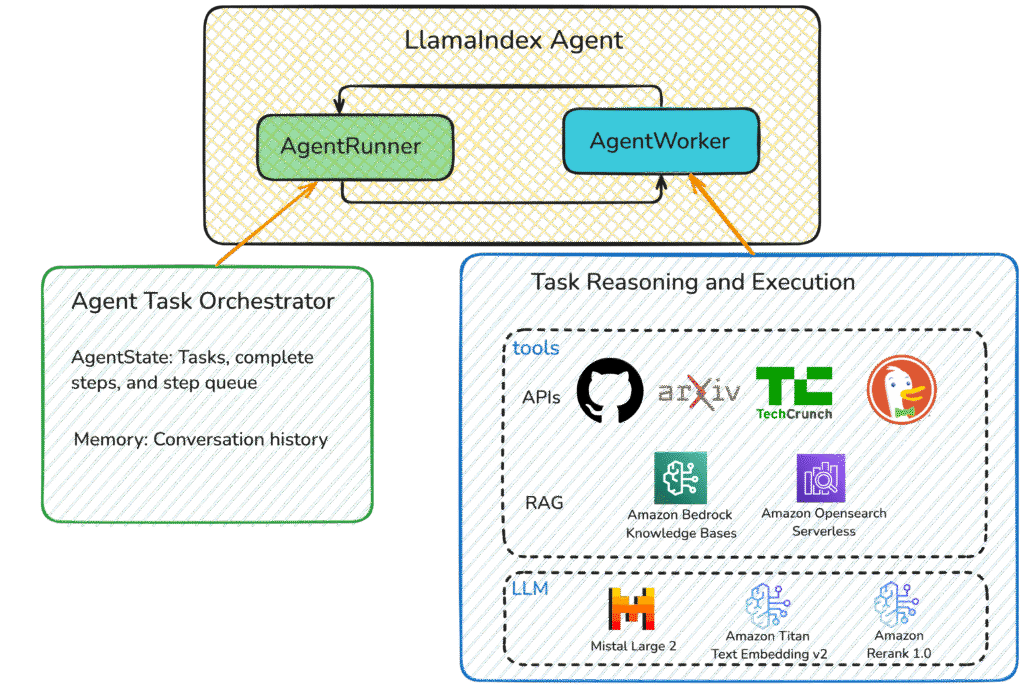

This solution uses the LlamaIndex framework to build an agent flow with two main components: AgentRunner and AgentWorker. The AgentRunner serves as an orchestrator that manages conversation history, creates and maintains tasks, executes task steps, and provides a user-friendly interface for interactions. The AgentWorker handles the step-by-step reasoning and task execution.

For reasoning and task planning, we use Mistral Large 2 on Amazon Bedrock. You can use other text generation FMs available from Amazon Bedrock. For the full list of supported models, see Supported foundation models in Amazon Bedrock. The agent integrates with GitHub, arXiv, TechCrunch, and DuckDuckGo APIs, while also accessing internal knowledge through a RAG framework to provide context-aware answers.

In this solution, we present two options for building the RAG framework:

- Document integration with Amazon OpenSearch Serverless – The first option involves using LlamaIndex to programmatically load and process documents. It splits the documents into chunks using various chunking strategies and then stores these chunks in an Amazon OpenSearch Serverless vector store for future retrieval.

- Document integration with Amazon Bedrock Knowledge Bases – The second option uses Amazon Bedrock Knowledge Bases, a fully managed service that handles the loading, processing, and chunking of documents. This service can quickly create a new vector store on your behalf with a few configurations and clicks. You can choose from Amazon OpenSearch Serverless, Amazon Aurora PostgreSQL-Compatible Edition Serverless, and Amazon Neptune Analytics. Additionally, the solution includes a document retrieval rerank feature to enhance the relevance of the responses.

You can select the RAG implementation option that best suits your preference and developer skill level.

The following diagram illustrates the solution architecture.

In the following sections, we present the steps to implement the agentic RAG application. You can also find the sample code in the GitHub repository.

Prerequisites

The solution has been tested in the AWS Region us-west-2. Complete the following steps before proceeding:

- Set up the following resources:

- Create an Amazon SageMaker

- Create a SageMaker domain user profile.

- Launch Amazon SageMaker Studio, select JupyterLab, and create a space.

- Select the instance t3.medium and the image SageMaker Distribution 2.3.1, then run the space.

- Request model access:

- On the Amazon Bedrock console, choose Model access in the navigation pane.

- Choose Modify model access.

- Select the models Mistral Large 2 (24.07), Amazon Titan Text Embeddings V2, and Rerank 1.0 from the list, and request access to these models.

- Configure AWS Identity and Access Management (IAM) permissions:

- In the SageMaker console, go to the SageMaker user profile details and find the execution role that the SageMaker notebook uses. It should look like

AmazonSageMaker-ExecutionRole-20250213T123456.

- In the SageMaker console, go to the SageMaker user profile details and find the execution role that the SageMaker notebook uses. It should look like

- In the IAM console, create an inline policy for this execution role. that your role can perform the following actions:

- Access to Amazon Bedrock services including:

- Reranking capabilities

- Retrieving information

- Invoking models

- Listing available foundation models

- IAM permissions to:

- Create policies

- Attach policies to roles within your account

- Full access to Amazon OpenSearch Serverless service

- Access to Amazon Bedrock services including:

- Run the following command in the JupyterLab notebook terminal to download the sample code from GitHub:

- Finally, install the required Python packages by running the following command in the terminal:

Initialize the models

Initialize the FM used for orchestrating the agentic flow with Amazon Bedrock Converse API. This API provides a unified interface for interacting with various FMs available on Amazon Bedrock. This standardization simplifies the development process, allowing developers to write code one time and seamlessly switch between different models without adjusting for model-specific differences. In this example, we use the Mistral Large 2 model on Amazon Bedrock.

Next, initialize the embedding model from Amazon Bedrock, which is used for converting document chunks into embedding vectors. For this example, we use Amazon Titan Text Embeddings V2. See the following code:

Integrate API tools

Implement two functions to interact with the GitHub and TechCrunch APIs. The APIs shown in this post don’t require credentials. To provide clear communication between the agent and the foundation model, follow Python function best practices, including:

- Type hints for parameter and return value validation

- Detailed docstrings explaining function purpose, parameters, and expected returns

- Clear function descriptions

The following code sample shows the function that integrates with the GitHub API. After the function is created, use the FunctionTool.from_defaults() method to wrap the function as a tool and integrate it seamlessly into the LlamaIndex workflow.

See the code repository for the full code samples of the function that integrates with the TechCrunch API.

For arXiv and DuckDuckGo integration, we use LlamaIndex’s pre-built tools instead of creating custom functions. You can explore other available pre-built tools in the LlamaIndex documentation to avoid duplicating existing solutions.

RAG option 1: Document integration with Amazon OpenSearch Serverless

Next, programmatically build the RAG component using LlamaIndex to load, process, and chunk documents. store the embedding vectors in Amazon OpenSearch Serverless. This approach offers greater flexibility for advanced scenarios, such as loading various file types (including .epub and .ppt) and selecting advanced chunking strategies based on file types (such as HTML, JSON, and code).

Before moving forward, you can download some PDF documents for testing from the AWS website using the following command, or you can use your own documents. The following documents are AWS guides that help in choosing the right generative AI service (such as Amazon Bedrock or Amazon Q) based on use case, customization needs, and automation potential. They also assist in selecting AWS machine learning (ML) services (such as SageMaker) for building models, using pre-trained AI, and using cloud infrastructure.

Load the PDF documents using SimpleDirectoryReader() in the following code. For a full list of supported file types, see the LlamaIndex documentation.

Next, create an Amazon OpenSearch Serverless collection as the vector database. Check the utils.py file for details on the create_collection() function.

After you create the collection, create an index to store embedding vectors:

Next, use the following code to implement a document search system using LlamaIndex integrated with Amazon OpenSearch Serverless. It first sets up AWS authentication to securely access OpenSearch Service, then configures a vector client that can handle 1024-dimensional embeddings (specifically designed for the Amazon Titan Embedding V2 model). The code processes input documents by breaking them into manageable chunks of 1,024 tokens with a 20-token overlap, converts these chunks into vector embeddings, and stores them in the OpenSearch Serverless vector index. You can select a different or more advanced chunking strategy by modifying the transformations parameter in the VectorStoreIndex.from_documents() method. For more information and examples, see the LlamaIndex documentation.

You can add a reranking step in the RAG pipeline, which improves the quality of information retrieved by making sure that the most relevant documents are presented to the language model, resulting in more accurate and on-topic responses:

Use the following code to test the RAG framework. You can compare results by enabling or disabling the reranker model.

Next, convert the vector store into a LlamaIndex QueryEngineTool, which requires a tool name and a comprehensive description. This tool is then combined with other API tools to create an agent worker that executes tasks in a step-by-step manner. The code initializes an AgentRunner to orchestrate the entire workflow, analyzing text inputs and generating responses. The system can be configured to support parallel tool execution for improved efficiency.

You have now completed building the agentic RAG application using LlamaIndex and Amazon OpenSearch Serverless. You can test the chatbot application with your own questions. For example, ask about the latest news and features regarding Amazon Bedrock, or inquire about the latest papers and most popular GitHub repositories related to generative AI.

RAG option 2: Document integration with Amazon Bedrock Knowledge Bases

In this section, you use Amazon Bedrock Knowledge Bases to build the RAG framework. You can create an Amazon Bedrock knowledge base on the Amazon Bedrock console or follow the provided notebook example to create it programmatically. Create a new Amazon Simple Storage Service (Amazon S3) bucket for the knowledge base, then upload the previously downloaded files to this S3 bucket. You can select different embedding models and chunking strategies that work better for your data. After you create the knowledge base, remember to sync the data. Data synchronization might take a few minutes.

To enable your newly created knowledge base to invoke the rerank model, you need to modify its permissions. First, open the Amazon Bedrock console and locate the service role that matches the one shown in the following screenshot.

Choose the role and add the following provided IAM permission policy as an inline policy. This additional authorization grants your knowledge base the necessary permissions to successfully invoke the rerank model on Amazon Bedrock.

Use the following code to integrate the knowledge base into the LlamaIndex framework. Specific configurations can be provided in the retrieval_config parameter, where numberOfResults is the maximum number of retrieved chunks from the vector store, and overrideSearchType has two valid values: HYBRID and SEMANTIC. In the rerankConfiguration, you can optionally provide a rerank modelConfiguration and numberOfRerankedResults to sort the retrieved chunks by relevancy scores and select only the defined number of results. For the full list of available configurations for retrieval_config, refer to the Retrieve API documentation.

Like the first option, you can create the knowledge base as a QueryEngineTool in LlamaIndex and combine it with other API tools. Then, you can create a FunctionCallingAgentWorker using these combined tools and initialize an AgentRunner to interact with them. By using this approach, you can chat with and take advantage of the capabilities of the integrated tools.

Now you have built the agentic RAG solution using LlamaIndex and Amazon Bedrock Knowledge Bases.

Clean up

When you finish experimenting with this solution, use the following steps to clean up the AWS resources to avoid unnecessary costs:

- In the Amazon S3 console, delete the S3 bucket and data created for this solution.

- In the OpenSearch Service console, delete the collection that was created for storing the embedding vectors.

- In the Amazon Bedrock Knowledge Bases console, delete the knowledge base you created.

- In the SageMaker console, navigate to your domain and user profile, and launch SageMaker Studio to stop or delete the JupyterLab instance.

Conclusion

This post demonstrated how to build a powerful agentic RAG application using LlamaIndex and Amazon Bedrock that goes beyond traditional question answering systems. By integrating Mistral Large 2 as the orchestrating model with external APIs (GitHub, arXiv, TechCrunch, and DuckDuckGo) and internal knowledge bases, you’ve created a versatile technology discovery and research tool.

We showed you two complementary approaches to implement the RAG framework: a programmatic implementation using LlamaIndex with Amazon OpenSearch Serverless, providing maximum flexibility for advanced use cases, and a managed solution using Amazon Bedrock Knowledge Bases that simplifies document processing and storage with minimal configuration. You can try out the solution using the following code sample.

For more relevant information, see Amazon Bedrock, Amazon Bedrock Knowledge Bases, Amazon OpenSearch Serverless, and Use a reranker model in Amazon Bedrock. Refer to Mistral AI in Amazon Bedrock to see the latest Mistral models that are available on both Amazon Bedrock and AWS Marketplace.

About the Authors

Ying Hou, PhD, is a Sr. Specialist Solution Architect for Gen AI at AWS, where she collaborates with model providers to onboard the latest and most intelligent AI models onto AWS platforms. With deep expertise in Gen AI, ASR, computer vision, NLP, and time-series forecasting models, she works closely with customers to design and build cutting-edge ML and GenAI applications. Outside of architecting innovative AI solutions, she enjoys spending quality time with her family, getting lost in novels, and exploring the UK’s national parks.

Ying Hou, PhD, is a Sr. Specialist Solution Architect for Gen AI at AWS, where she collaborates with model providers to onboard the latest and most intelligent AI models onto AWS platforms. With deep expertise in Gen AI, ASR, computer vision, NLP, and time-series forecasting models, she works closely with customers to design and build cutting-edge ML and GenAI applications. Outside of architecting innovative AI solutions, she enjoys spending quality time with her family, getting lost in novels, and exploring the UK’s national parks.

Preston Tuggle is a Sr. Specialist Solutions Architect with the Third-Party Model Provider team at AWS. He focuses on working with model providers across Amazon Bedrock and Amazon SageMaker, helping them accelerate their go-to-market strategies through technical scaling initiatives and customer engagement.

Preston Tuggle is a Sr. Specialist Solutions Architect with the Third-Party Model Provider team at AWS. He focuses on working with model providers across Amazon Bedrock and Amazon SageMaker, helping them accelerate their go-to-market strategies through technical scaling initiatives and customer engagement.

Source: Read MoreÂ