Human reasoning naturally operates through abstract, non-verbal concepts rather than strictly relying on discrete linguistic tokens. However, current LLMs are limited to reasoning within the boundaries of natural language, producing one token at a time through predefined vocabulary. This token-by-token approach not only restricts the expressive capacity of the model but also limits the breadth of reasoning paths it can explore, especially in ambiguous or complex scenarios. Standard Chain-of-Thought (CoT) methods exemplify this limitation, forcing the model to commit to a single path at each step. In contrast, human cognition is more flexible and parallel, allowing for simultaneous consideration of multiple ideas and delaying verbalization until concepts are fully formed. This makes human reasoning more adaptable and robust in dealing with uncertainty.

To address these limitations, researchers have proposed transitioning from token-based reasoning to reasoning within a continuous concept space, representing reasoning steps as token embeddings combinations. This approach allows models to explore multiple reasoning trajectories in parallel and integrate richer conceptual representations. Prior studies have demonstrated the potential of manipulating hidden states to influence reasoning outcomes or introduce latent planning. However, applying continuous-space reasoning to larger models presents challenges. In models under 7B parameters, shared weights between input and output layers allow hidden states to align with token embeddings, facilitating continuous reasoning. However, in larger models, where input and output spaces are decoupled, directly using hidden states as inputs causes mismatches that are hard to resolve. Attempts to retrain these models to bridge this gap often result in overfitting or degraded performance, highlighting the difficulty of enabling effective continuous reasoning at scale.

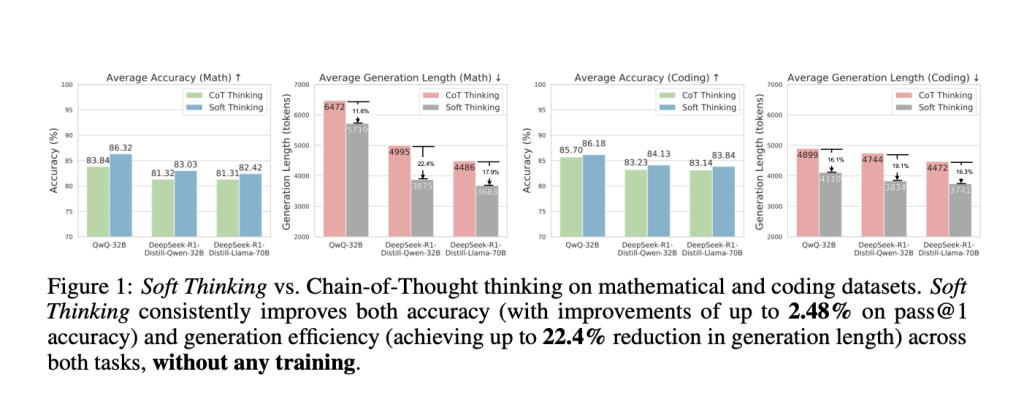

Researchers from the University of California, Santa Barbara, University of California, Santa Cruz, University of California, Los Angeles, Purdue University, LMSYS Org, and Microsoft introduce Soft Thinking. This training-free approach enhances reasoning in large language models by operating in a continuous concept space. Instead of choosing one discrete token at each step, the model generates concept tokens—probability-weighted mixtures of all token embeddings—enabling parallel reasoning over multiple paths. This results in richer, more abstract representations. The method includes a Cold Stop mechanism to improve efficiency. Evaluations on mathematical and coding tasks show up to 2.48% higher accuracy and 22.4% fewer tokens used than standard Chain-of-Thought reasoning.

The Soft Thinking method enhances standard CoT reasoning by replacing discrete token sampling with concept tokens—probability distributions over the entire vocabulary. These distributions compute weighted embeddings, allowing the model to reason in a continuous concept space. This preserves uncertainty and enables parallel exploration of multiple reasoning paths. A Cold Stop mechanism monitors entropy to halt reasoning when the model becomes confident, improving efficiency and preventing collapse. Theoretical analysis shows that Soft Thinking approximates the full marginalization over all reasoning paths through linearization, offering a more expressive and computationally tractable alternative to discrete CoT.

The study evaluates the Soft Thinking method on eight benchmarks in math and programming using three open-source LLMs of varying sizes and architectures. Compared to standard and greedy CoT methods, Soft Thinking consistently improves accuracy (Pass@1) while significantly reducing the number of tokens generated, indicating more efficient reasoning. The approach uses concept tokens and a Cold Start controller without modifying model weights or requiring extra training. Experiments show that soft thinking balances higher accuracy with lower computational cost, outperforming baselines by enabling richer, more abstract reasoning in fewer steps across diverse tasks and models.

In conclusion, Soft Thinking is a training-free approach that enables large language models to reason using continuous concept tokens instead of traditional discrete tokens. By combining weighted token embeddings, Soft Thinking allows models to explore multiple reasoning paths simultaneously, improving accuracy and efficiency. Tested on math and coding benchmarks, it consistently boosts pass@1 accuracy while reducing the number of generated tokens, all without extra training or architectural changes. The method maintains interpretability and concise reasoning. Future research may focus on training adaptations to enhance robustness, especially for out-of-distribution inputs. The code is publicly accessible.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post LLMs Can Now Reason Beyond Language: Researchers Introduce Soft Thinking to Replace Discrete Tokens with Continuous Concept Embeddings appeared first on MarkTechPost.

Source: Read MoreÂ