While large reasoning models (LRMs) have shown impressive capabilities in short-context reasoning through reinforcement learning (RL), these gains do not generalize well to long-context scenarios. Applications such as multi-document QA, research synthesis, and legal or financial analysis require models to process and reason over sequences exceeding 100K tokens. However, RL optimization in such regimes is plagued by slower reward convergence, unstable policy updates due to KL divergence fluctuations, and reduced exploration resulting from entropy collapse. These bottlenecks reveal a fundamental gap in transitioning LRMs from short-context proficiency to long-context generalization.

QwenLong-L1: A Structured RL Framework for Long-Context Adaptation

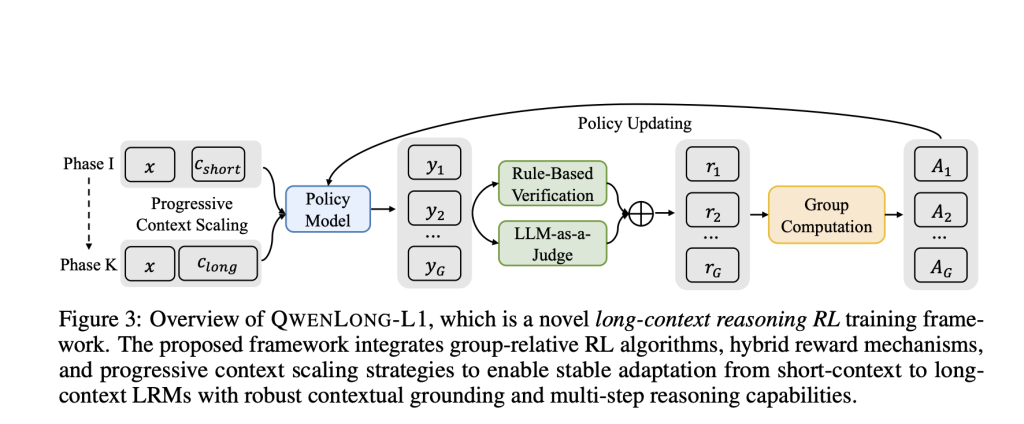

To address these limitations, the Qwen Research team introduces QwenLong-L1, a novel RL framework designed to adapt LRMs to long-context reasoning tasks. The framework is structured into three key stages:

- Warm-up Supervised Fine-Tuning (SFT): Provides a stable initialization for the policy model by training on curated question-context-answer triplets, ensuring basic competence in contextual comprehension and answer extraction.

- Curriculum-Guided Phased Reinforcement Learning: Introduces a staged training process with gradually increasing context lengths. This progression enables the model to incrementally acquire long-context reasoning behaviors without destabilizing policy updates.

- Difficulty-Aware Retrospective Sampling: Enhances exploration by maintaining and reusing hard examples from previous phases, weighted by their difficulty, to encourage deeper reasoning and robustness across diverse inputs.

These stages are complemented by hybrid reward mechanisms—combining rule-based exact match verification with semantic evaluation by a lightweight LLM—ensuring both precision and recall during policy training.

Technical Design and Methodological Advantages

QwenLong-L1 integrates recent advances in group-relative RL optimization, specifically GRPO and DAPO, to mitigate the computational overhead associated with long-context value estimation:

- GRPO estimates advantage by normalizing rewards within sampled groups, eliminating the need for a separate value network and encouraging diverse generation patterns.

- DAPO incorporates mechanisms such as dynamic sampling, overlength penalty shaping, and asymmetric clipping thresholds to prevent entropy collapse and mitigate length biases during training.

The reward function is defined as the maximum of two signals: a deterministic rule-based match and a semantic judgment from a compact evaluator model (e.g., Qwen2.5-1.5B). This hybrid approach avoids overfitting to rigid formats while maintaining answer correctness across varied notations and phrasings.

Moreover, the framework is optimized via progressive context scaling, where the RL process transitions from 20K-token to 60K-token input lengths in controlled phases, stabilizing training dynamics and facilitating policy generalization.

Experimental Results and Benchmark Performance

QwenLong-L1 was evaluated on seven long-context document QA benchmarks, including DocMath, Frames, 2WikiMultihopQA, HotpotQA, Musique, NarrativeQA, and Qasper. The 32B variant, QwenLong-L1-32B, demonstrated strong empirical performance:

- It outperformed baseline models such as R1-Distill-Qwen-32B by 5.1 points and exceeded leading proprietary systems like OpenAI-o3-mini and Qwen3-235B-A22B.

- Its performance was comparable to Claude-3.7-Sonnet-Thinking, indicating competitive reasoning capabilities under extreme context lengths.

- Pass@K analysis revealed consistent improvements with increased sampling, achieving a Pass@2 average of 73.7, surpassing DeepSeek-R1 and OpenAI-o1-preview, even at low sampling rates.

Ablation studies further validated the individual contributions of SFT, phased RL, and retrospective sampling. Notably, RL played a decisive role in enabling emergent reasoning behaviors such as grounding, subgoal setting, verification, and backtracking—traits not effectively induced by supervised fine-tuning alone.

Conclusion

QwenLong-L1 represents a systematic approach to equipping LRMs with robust long-context reasoning capabilities through reinforcement learning. Its design effectively bridges the gap between short-context expertise and the demands of information-dense environments by combining supervised initialization, curriculum-driven context scaling, and hybrid evaluation strategies. The framework not only achieves state-of-the-art results across long-context benchmarks but also demonstrates the emergence of interpretable reasoning patterns during training.

Check out the Paper, Model on Hugging Face and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Qwen Researchers Proposes QwenLong-L1: A Reinforcement Learning Framework for Long-Context Reasoning in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ