As businesses increasingly integrate AI assistants, assessing how effectively these systems perform real-world tasks, particularly through voice-based interactions, is essential. Existing evaluation methods concentrate on broad conversational skills or limited, task-specific tool usage. However, these benchmarks fall short when measuring an AI agent’s ability to manage complex, specialized workflows across various domains. This gap highlights the need for more comprehensive evaluation frameworks that reflect the challenges AI assistants face in practical enterprise settings, ensuring they can truly support intricate, voice-driven operations in real-world environments.

To address the limitations of existing benchmarks, Salesforce AI Research & Engineering developed a robust evaluation system tailored to assess AI agents in complex enterprise tasks across both text and voice interfaces. This internal tool supports the development of products like Agentforce. It offers a standardized framework to evaluate AI assistant performance in four key business areas: managing healthcare appointments, handling financial transactions, processing inbound sales, and fulfilling e-commerce orders. Using carefully curated, human-verified test cases, the benchmark requires agents to complete multi-step operations, use domain-specific tools, and adhere to strict security protocols across both communication modes.

Traditional AI benchmarks often focus on general knowledge or basic instructions, but enterprise settings require more advanced capabilities. AI agents in these contexts must integrate with multiple tools and systems, follow strict security and compliance procedures, and understand specialized terms and workflows. Voice-based interactions add another layer of complexity due to potential speech recognition and synthesis errors, especially in multi-step tasks. Addressing these needs, the benchmark guides AI development toward more dependable and effective assistants tailored for enterprise use.

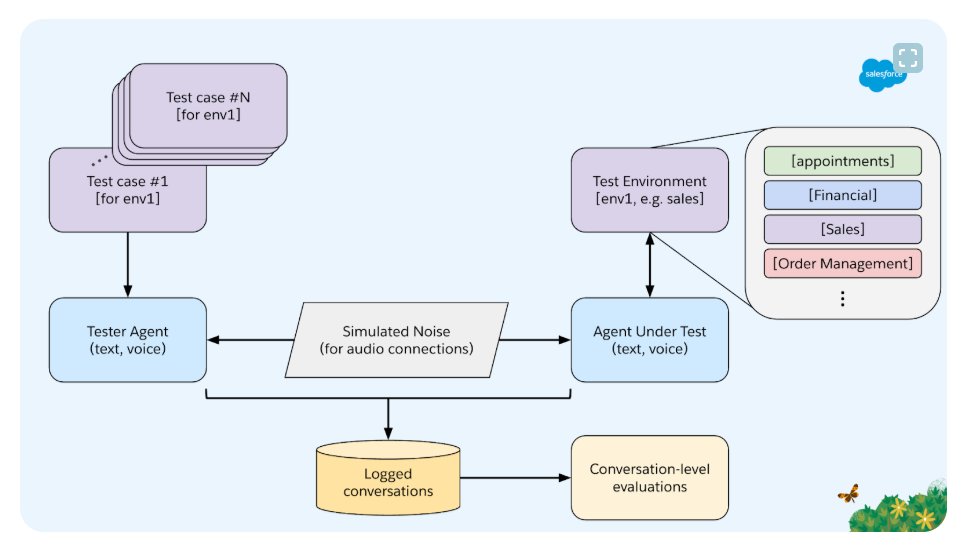

Salesforce’s benchmark uses a modular framework with four key components: domain-specific environments, predefined tasks with clear goals, simulated interactions that reflect real-world conversations, and measurable performance metrics. It evaluates AI across four enterprise domains: healthcare appointment management, financial services, sales, and e-commerce. Tasks range from simple requests to complex operations involving conditional logic and multiple system calls. With human-verified test cases, the benchmark ensures realistic challenges that test an agent’s reasoning, precision, and tool handling in both text and voice interfaces.

The evaluation framework measures AI agent performance based on two main criteria: accuracy, how correctly the agent completes the task, and efficiency, which are evaluated through conversational length and token usage. Both text and voice interactions are assessed, with the option to add audio noise to test system resilience. Implemented in Python, the modular benchmark supports realistic client-agent dialogues, multiple AI model providers, and configurable voice processing using built-in speech-to-text and text-to-speech components. An open-source release is planned, enabling developers to extend the framework to new use cases and communication formats.

Initial testing across top models like GPT-4 variants and Llama showed that financial tasks were the most error-prone due to strict verification requirements. Voice-based tasks also saw a 5–8% drop in performance compared to text. Accuracy declined further on multi-step tasks, especially those requiring conditional logic. These findings highlight ongoing challenges in tool-use chaining, protocol compliance, and speech processing. While robust, the benchmark lacks personalization, real-world user behavior diversity, and multilingual capabilities. Future work will address these gaps by expanding domains, introducing user modeling, and incorporating more subjective and cross-lingual evaluations.

Check out the Technical details. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Evaluating Enterprise-Grade AI Assistants: A Benchmark for Complex, Voice-Driven Workflows appeared first on MarkTechPost.

Source: Read MoreÂ