Improving response quality for user queries is essential for AI-driven applications, especially those focusing on user satisfaction. For example, an HR chat-based assistant should strictly follow company policies and respond using a certain tone. A deviation from that can be corrected by feedback from users. This post demonstrates how Amazon Bedrock, combined with a user feedback dataset and few-shot prompting, can refine responses for higher user satisfaction. By using Amazon Titan Text Embeddings v2, we demonstrate a statistically significant improvement in response quality, making it a valuable tool for applications seeking accurate and personalized responses.

Recent studies have highlighted the value of feedback and prompting in refining AI responses. Prompt Optimization with Human Feedback proposes a systematic approach to learning from user feedback, using it to iteratively fine-tune models for improved alignment and robustness. Similarly, Black-Box Prompt Optimization: Aligning Large Language Models without Model Training demonstrates how retrieval augmented chain-of-thought prompting enhances few-shot learning by integrating relevant context, enabling better reasoning and response quality. Building on these ideas, our work uses the Amazon Titan Text Embeddings v2 model to optimize responses using available user feedback and few-shot prompting, achieving statistically significant improvements in user satisfaction. Amazon Bedrock already provides an automatic prompt optimization feature to automatically adapt and optimize prompts without additional user input. In this blog post, we showcase how to use OSS libraries for a more customized optimization based on user feedback and few-shot prompting.

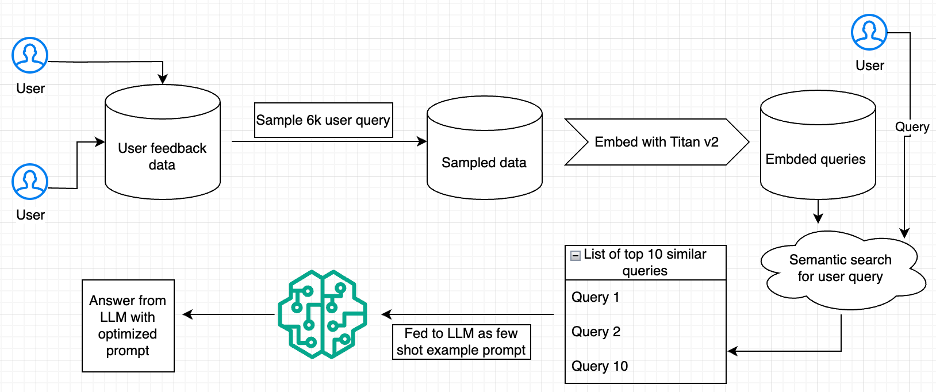

We’ve developed a practical solution using Amazon Bedrock that automatically improves chat assistant responses based on user feedback. This solution uses embeddings and few-shot prompting. To demonstrate the effectiveness of the solution, we used a publicly available user feedback dataset. However, when applying it inside a company, the model can use its own feedback data provided by its users. With our test dataset, it shows a 3.67% increase in user satisfaction scores. The key steps include:

- Retrieve a publicly available user feedback dataset (for this example, Unified Feedback Dataset on Hugging Face).

- Create embeddings for queries to capture semantic similar examples, using Amazon Titan Text Embeddings.

- Use similar queries as examples in a few-shot prompt to generate optimized prompts.

- Compare optimized prompts against direct large language model (LLM) calls.

- Validate the improvement in response quality using a paired sample t-test.

The following diagram is an overview of the system.

The key benefits of using Amazon Bedrock are:

- Zero infrastructure management – Deploy and scale without managing complex machine learning (ML) infrastructure

- Cost-effective – Pay only for what you use with the Amazon Bedrock pay-as-you-go pricing model

- Enterprise-grade security – Use AWS built-in security and compliance features

- Straightforward integration – Integrate seamlessly existing applications and open source tools

- Multiple model options – Access various foundation models (FMs) for different use cases

The following sections dive deeper into these steps, providing code snippets from the notebook to illustrate the process.

Prerequisites

Prerequisites for implementation include an AWS account with Amazon Bedrock access, Python 3.8 or later, and configured Amazon credentials.

Data collection

We downloaded a user feedback dataset from Hugging Face, llm-blender/Unified-Feedback. The dataset contains fields such as conv_A_user (the user query) and conv_A_rating (a binary rating; 0 means the user doesn’t like it and 1 means the user likes it). The following code retrieves the dataset and focuses on the fields needed for embedding generation and feedback analysis. It can be run in an Amazon Sagemaker notebook or a Jupyter notebook that has access to Amazon Bedrock.

Data sampling and embedding generation

To manage the process effectively, we sampled 6,000 queries from the dataset. We used Amazon Titan Text Embeddings v2 to create embeddings for these queries, transforming text into high-dimensional representations that allow for similarity comparisons. See the following code:

Few-shot prompting with similarity search

For this part, we took the following steps:

- Sample 100 queries from the dataset for testing. Sampling 100 queries helps us run multiple trials to validate our solution.

- Compute cosine similarity (measure of similarity between two non-zero vectors) between the embeddings of these test queries and the stored 6,000 embeddings.

- Select the top k similar queries to the test queries to serve as few-shot examples. We set K = 10 to balance between the computational efficiency and diversity of the examples.

See the following code:

This code provides a few-shot context for each test query, using cosine similarity to retrieve the closest matches. These example queries and feedback serve as additional context to guide the prompt optimization. The following function generates the few-shot prompt:

The get_optimized_prompt function performs the following tasks:

- The user query and similar examples generate a few-shot prompt.

- We use the few-shot prompt in an LLM call to generate an optimized prompt.

- Make sure the output is in the following format using Pydantic.

See the following code:

The make_llm_call_with_optimized_prompt function uses an optimized prompt and user query to make the LLM (Anthropic’s Claude Haiku 3.5) call to get the final response:

Comparative evaluation of optimized and unoptimized prompts

To compare the optimized prompt with the baseline (in this case, the unoptimized prompt), we defined a function that returned a result without an optimized prompt for all the queries in the evaluation dataset:

The following function generates the query response using similarity search and intermediate optimized prompt generation for all the queries in the evaluation dataset:

This code compares responses generated with and without few-shot optimization, setting up the data for evaluation.

LLM as judge and evaluation of responses

To quantify response quality, we used an LLM as a judge to score the optimized and unoptimized responses for alignment with the user query. We used Pydantic here to make sure the output sticks to the desired pattern of 0 (LLM predicts the response won’t be liked by the user) or 1 (LLM predicts the response will be liked by the user):

LLM-as-a-judge is a functionality where an LLM can judge the accuracy of a text using certain grounding examples. We have used that functionality here to judge the difference between the result received from optimized and un-optimized prompt. Amazon Bedrock launched an LLM-as-a-judge functionality in December 2024 that can be used for such use cases. In the following function, we demonstrate how the LLM acts as an evaluator, scoring responses based on their alignment and satisfaction for the full evaluation dataset:

In the following example, we repeated this process for 20 trials, capturing user satisfaction scores each time. The overall score for the dataset is the sum of the user satisfaction score.

Result analysis

The following line chart shows the performance improvement of the optimized solution over the unoptimized one. Green areas indicate positive improvements, whereas red areas show negative changes.

As we gathered the result of 20 trials, we saw that the mean of satisfaction scores from the unoptimized prompt was 0.8696, whereas the mean of satisfaction scores from the optimized prompt was 0.9063. Therefore, our method outperforms the baseline by 3.67%.

Finally, we ran a paired sample t-test to compare satisfaction scores from the optimized and unoptimized prompts. This statistical test validated whether prompt optimization significantly improved response quality. See the following code:

After running the t-test, we got a p-value of 0.000762, which is less than 0.05. Therefore, the performance boost of optimized prompts over unoptimized prompts is statistically significant.

Key takeaways

We learned the following key takeaways from this solution:

- Few-shot prompting improves query response – Using highly similar few-shot examples leads to significant improvements in response quality.

- Amazon Titan Text Embeddings enables contextual similarity – The model produces embeddings that facilitate effective similarity searches.

- Statistical validation confirms effectiveness – A p-value of 0.000762 indicates that our optimized approach meaningfully enhances user satisfaction.

- Improved business impact – This approach delivers measurable business value through improved AI assistant performance. The 3.67% increase in satisfaction scores translates to tangible outcomes: HR departments can expect fewer policy misinterpretations (reducing compliance risks), and customer service teams might see a significant reduction in escalated tickets. The solution’s ability to continuously learn from feedback creates a self-improving system that increases ROI over time without requiring specialized ML expertise or infrastructure investments.

Limitations

Although the system shows promise, its performance heavily depends on the availability and volume of user feedback, especially in closed-domain applications. In scenarios where only a handful of feedback examples are available, the model might struggle to generate meaningful optimizations or fail to capture the nuances of user preferences effectively. Additionally, the current implementation assumes that user feedback is reliable and representative of broader user needs, which might not always be the case.

Next steps

Future work could focus on expanding this system to support multilingual queries and responses, enabling broader applicability across diverse user bases. Incorporating Retrieval Augmented Generation (RAG) techniques could further enhance context handling and accuracy for complex queries. Additionally, exploring ways to address the limitations in low-feedback scenarios, such as synthetic feedback generation or transfer learning, could make the approach more robust and versatile.

Conclusion

In this post, we demonstrated the effectiveness of query optimization using Amazon Bedrock, few-shot prompting, and user feedback to significantly enhance response quality. By aligning responses with user-specific preferences, this approach alleviates the need for expensive model fine-tuning, making it practical for real-world applications. Its flexibility makes it suitable for chat-based assistants across various domains, such as ecommerce, customer service, and hospitality, where high-quality, user-aligned responses are essential.

To learn more, refer to the following resources:

- Black-Box Prompt Optimization: Aligning Large Language Models without Model Training

- Approaches to Few-Shot Learning

- Recent Advances in LLM Feedback Integration

- Frameworks for Query Optimization

About the Authors

Tanay Chowdhury is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Tanay Chowdhury is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Parth Patwa is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Parth Patwa is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Yingwei Yu is an Applied Science Manager at the Generative AI Innovation Center at Amazon Web Services.

Yingwei Yu is an Applied Science Manager at the Generative AI Innovation Center at Amazon Web Services.

Source: Read MoreÂ