Large Reasoning Models (LRMs) like OpenAI’s o1 and o3, DeepSeek-R1, Grok 3.5, and Gemini 2.5 Pro have shown strong capabilities in long CoT reasoning, often displaying advanced behaviors such as self-correction, backtracking, and verification—collectively known as “aha moments.” These behaviors have been observed to emerge through outcome-driven RL without the need for supervised fine-tuning. Models like DeepSeek-R1 and its open-source replications (e.g., TinyZero and Logic-RL) have demonstrated that carefully designed RL pipelines—using rule-based rewards, curriculum learning, and structured training—can induce such reflective reasoning abilities. However, these emergent behaviors tend to be unpredictable and inconsistent, limiting their practical reliability and scalability.

To address this, researchers have explored structured RL frameworks that target specific reasoning types, such as deduction, abduction, and induction. These approaches involve aligning specialist models, merging them in parameter space, and applying domain-specific continual RL. Tools like Logic-RL use rule-conditioned RL to solve logic puzzles, improving transferability to tasks like math reasoning. Meanwhile, other works propose mechanisms to enhance reasoning robustness, such as training models to reason both forwards and backwards, or iteratively self-critiquing their outputs. Studies analyzing “aha moments” suggest that these behaviors stem from internal shifts in uncertainty, latent representation, and self-assessment, offering new insights into engineering more reliable reasoning models.

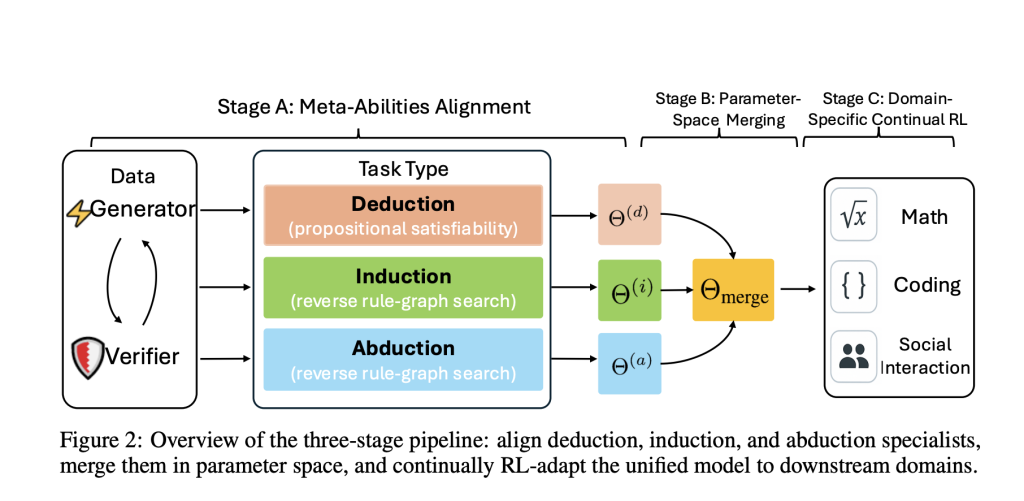

Researchers from the National University of Singapore, Tsinghua University, and Salesforce AI Research address the limitations of relying on spontaneous “aha moments” in large language models by explicitly aligning them with three core reasoning abilities: deduction, induction, and abduction. They introduce a three-stage pipeline—individual meta-ability alignment, parameter-space merging, and domain-specific reinforcement learning—significantly enhancing model performance. Using a programmatically generated, self-verifiable task suite, their approach boosts accuracy over instruction-tuned baselines by over 10%, with further gains from domain-specific RL. This structured alignment framework offers a scalable, generalizable method for improving reasoning across math, coding, and science domains.

The researchers designed tasks aligned with deduction, induction, and abduction by using a structured “given two, infer the third” format based on hypothesis (H), rule (R), and observation (O). Deduction is framed as satisfiability checking, induction as masked-sequence prediction, and abduction as reverse rule-graph inference. These tasks are synthetically generated and automatically verified. The training pipeline includes three stages: (A) independently training models for each reasoning type using REINFORCE++ with structured rewards, (B) merging models through weighted parameter interpolation, and (C) fine-tuning the unified model on domain-specific data via reinforcement learning, isolating the benefit of meta-ability alignment.

The study evaluates models aligned with meta-abilities—deduction, induction, and abduction—using a curriculum learning setup across difficulty levels. Models trained on synthetic tasks strongly generalize to seven unseen math, code, and science benchmarks. At both 7B and 32B scales, meta-ability–aligned and merged models consistently outperform instruction-tuned baselines, with the merged model offering the highest gains. Continued domain-specific RL from these merged checkpoints (Domain-RL-Meta) leads to further improvements over standard RL finetuning (Domain-RL-Ins), especially in math benchmarks. Overall, the alignment strategy enhances reasoning abilities, and its benefits scale with model size, significantly boosting performance ceilings across tasks.

In conclusion, the study shows that large reasoning models can develop advanced problem-solving skills without depending on unpredictable “aha moments.” By aligning models with three core reasoning abilities—deduction, induction, and abduction—using self-verifiable tasks, the authors create specialist agents that can be effectively combined into a single model. This merged model outperforms instruction-tuned baselines by over 10% on diagnostic tasks and up to 2% on real-world benchmarks. When used as a starting point for domain-specific reinforcement learning, it raises performance by another 4%. This modular, systematic training approach offers a scalable and controllable foundation for building reliable, interpretable reasoning systems.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Beyond Aha Moments: Structuring Reasoning in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ