Over time, the pursuit of better performance of language models has pushed researchers to scale them up, which typically involves increasing the number of parameters or extending their computational capacity. As a result, the development and deployment of language models now heavily depend on the availability of substantial computational resources and memory.

Despite the advances, increasing model size or generating more tokens to enhance reasoning capabilities leads to significant challenges. Parameter scaling methods like Dense Scaling and Mixture-of-Experts Scaling, which involve increasing the number of trainable weights, demand much larger memory resources. Meanwhile, Inference-time scaling, on the other hand, requires models to generate longer sequences or conduct multiple reasoning steps, which introduces latency and makes deployment slower. While effective, these approaches are not adaptable across all scenarios and fail to address deployment efficiency for low-resource settings such as mobile devices or embedded systems.

Researchers from Zhejiang University and Alibaba Group proposed a new approach termed PARSCALE, which stands for Parallel Scaling. This method shifts focus from increasing model size or output length to increasing the model’s parallel computations during training and inference. By applying multiple learnable transformations to the input, the model executes several forward passes in parallel and aggregates their outputs dynamically. PARSCALE retains the model’s original parameter count and boosts computational diversity, making it an adaptable solution for various tasks and model architectures without requiring specialized datasets or changes in training protocols.

At the technical level, the PARSCALE appends several distinct, learnable prefixes to the same input, producing multiple parallel versions. The model processes these simultaneously, and the outputs are aggregated using a dynamic weighted sum calculated by a multilayer perceptron. This structure introduces only about 0.2% extra parameters per stream, a minor addition compared to full parameter scaling. The model uses prefix tuning to distinguish each parallel stream via unique key-value caches, allowing for efficient memory reuse. The approach also benefits from GPU-friendly parallelization, which helps to keep latency low despite the additional computation. This design ensures scalability without modifying the core architecture and enables application even in frozen pretrained models by only training the new prefix and aggregation parameters.

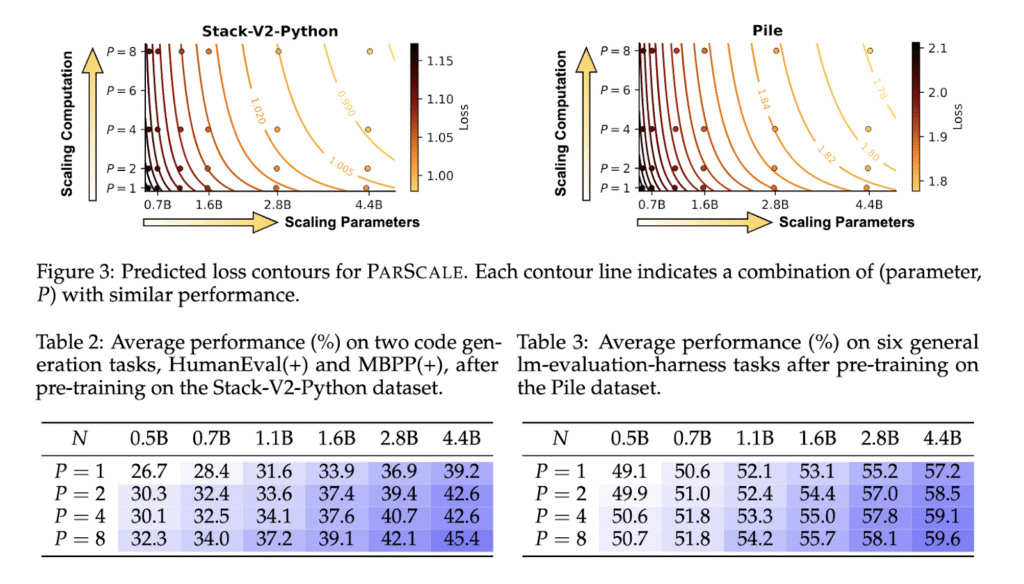

The researchers conducted extensive experiments on models ranging from 0.5B to 4.4B parameters with parallel streams P set from 1 to 8. When training with 42 billion tokens, models with P = 8 demonstrated performance equivalent to models with up to 4.4 billion parameters, but required significantly less memory and latency. Specifically, on a 1.6B model, PARSCALE used 22× less memory increase and 6× less latency increase compared to parameter scaling for the same performance. On downstream tasks, PARSCALE yielded up to a 34% improvement on GSM8K and 23% on MMLU. Coding performance improved significantly—models with 1.6B parameters and P = 8 achieved results comparable to those of a 4.4B parameter model. The method also proved effective during post-training and parameter-efficient fine-tuning, maintaining high performance even when core model parameters remained unchanged.

This paper introduced a strategy that rethinks how language models can be scaled. Instead of inflating model size or inference steps, it focuses on efficiently reusing existing computation. The researchers’ approach addresses time and memory inefficiencies while maintaining or improving performance. This demonstrates a compelling shift in scaling methods and sets a direction for deploying advanced models in constrained environments using parallel computation effectively.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post This AI Paper Introduces PARSCALE (Parallel Scaling): A Parallel Computation Method for Efficient and Scalable Language Model Deployment appeared first on MarkTechPost.

Source: Read MoreÂ