Large language models are now being used for evaluation and judgment tasks, extending beyond their traditional role of text generation. This has led to “LLM-as-a-Judge,” where models assess outputs from other language models. Such evaluations are essential in reinforcement learning pipelines, benchmark testing, and system alignment. These judge models rely on internal chain-of-thought reasoning, mirroring human judgment processes. Unlike conventional reward models that provide direct scores, these models simulate thoughtful evaluation, making them better suited for complex tasks such as math problem-solving, ethical reasoning, and user intent interpretation. Their ability to interpret and validate responses across languages and domains enhances automation and scalability in language model development.

However, current AI judgment systems face issues with inconsistency and shallow reasoning. Many rely on basic metrics or static annotations, which are inadequate for evaluating subjective or open-ended prompts. A common problem is position bias, where the order of answers affects the final decision, compromising fairness. Also, collecting human-annotated data at scale is costly and time-consuming, limiting the generalizability of these models.

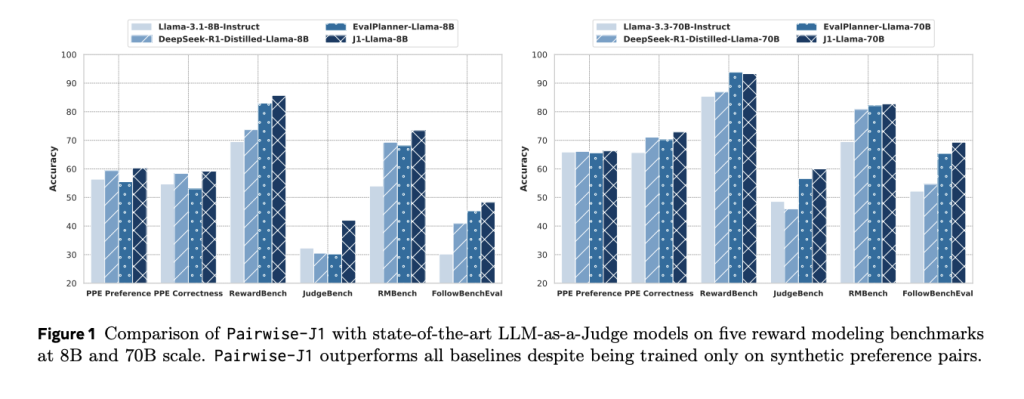

Several existing approaches have addressed these challenges, but with limited success. Systems like EvalPlanner and DeepSeek-GRM rely on human-labeled data or rigid training schemes, which limit adaptability across task types. Others, like DeepSeek-R1, depend on distillation from large models but perform poorly on ambiguous prompts. Static datasets and offline tuning strategies hinder dynamic reasoning, while newer methods using score formatting or structured prompts have shown minimal accuracy improvements. Despite larger datasets and models, performance gains in traditional systems have stalled.

Researchers from Meta’s GenAI and FAIR teams introduced J1 to address the above limitations. J1 trains judgment models through a reinforcement learning-based framework, making them capable of learning through verifiable reward signals. The team used synthetic data to create high-quality and low-quality responses to a prompt, transforming subjective tasks into verifiable pairwise judgments. This synthetic dataset included 22,000 preference pairs, split between 17,000 prompts from the WildChat corpus and 5,000 mathematical queries. These were used to train two versions of J1: J1-Llama-8B and J1-Llama-70B, initialized from the Llama-3.1-8B-Instruct and Llama-3.3-70B-Instruct base models, respectively. The models were trained using Group Relative Policy Optimization (GRPO), a reinforcement algorithm that eliminates the need for critic models and accelerates convergence.

At the training strategy’s core is position-agnostic learning, where both (x, a, b) and (x, b, a) input formats are used in training to prevent position bias. Also, consistency-based rewards are applied only when the model delivers correct verdicts across both answer orderings. This structure allows the judge to be fair and reliable regardless of prompt or answer order. The training framework supports multiple variations: models can output final verdicts, numeric scores for each answer, or both. A pointwise judging variant is included, which evaluates single responses using scores from 0 to 10. These formats make J1 a versatile and generalizable system capable of judging various tasks.

The results obtained using the J1 models reveal substantial performance improvements over existing systems. On the widely used Preference Proxy Evaluations (PPE) benchmark, J1-Llama-70B achieved an overall accuracy of 69.6%, outperforming models trained with over ten times more data. In contrast, models like DeepSeek-GRM-27B and EvalPlanner-Llama-70B scored 67.2% and 65.6%, respectively. Even the smaller J1-Llama-8B model exceeded baseline systems like EvalPlanner-Llama-8B, scoring 62.2% versus 55.5%. J1 also showed top-tier performance on other critical benchmarks such as RewardBench, RM-Bench, JudgeBench, and FollowBenchEval, demonstrating robust generalization across verifiable and subjective tasks. These improvements are not just marginal but significant, considering the limited training data used in J1 compared to the expansive datasets in other models.

Several Key Takeaways from the Research on J1:

- J1 is trained using 22,000 synthetic preference pairs, including 17K from WildChat and 5K from MATH tasks.

- The training uses GRPO, which streamlines RL by avoiding the need for separate critic models.

- It introduces position-agnostic learning, reducing position bias through consistency-based rewards.

- Two main model variants, J1-Llama-8B and J1-Llama-70B, were trained on modest data but outperformed large-scale models.

- J1-Llama-70B scored 69.6% on PPE, exceeding DeepSeek-GRM-27B (67.2%) and EvalPlanner-Llama-70B (65.6%).

- Supports multiple judgment formats: pairwise with verdicts, pairwise with scores, and pointwise scores.

- Surpasses models distilled from DeepSeek-R1 and OpenAI’s o1-mini on several tasks.

- Demonstrates that reasoning quality, not just dataset size, is critical for accurate judgments.

- J1’s framework makes it a generalist judge applicable to verifiable and non-verifiable tasks.

In conclusion, the J1 approach fundamentally redefines how judgment models are trained and evaluated. Synthetic data and reinforcement learning bypass the traditional need for costly annotations while promoting fair, logical, and consistent evaluations. This work illustrates that reasoning-driven judging can outperform larger models that rely heavily on data volume and static alignment techniques. It also validates the notion that judgment models should be thinkers first, and scorers second. With performance that rivals and often surpasses state-of-the-art systems, J1 sets a new benchmark in training LLM-as-a-Judge systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Meta Researchers Introduced J1: A Reinforcement Learning Framework That Trains Language Models to Judge With Reasoned Consistency and Minimal Data appeared first on MarkTechPost.

Source: Read MoreÂ