LLM-based agents are increasingly used across various applications because they handle complex tasks and assume multiple roles. A key component of these agents is memory, which stores and recalls information, reflects on past knowledge, and makes informed decisions. Memory plays a vital role in tasks involving long-term interaction or role-playing by capturing past experiences and helping maintain role consistency. It supports the agent’s ability to remember past interactions with the environment and use this information to guide future behavior, making it an essential module in such systems.

Despite the growing focus on improving memory mechanisms in LLM-based agents, current models are often developed with different implementation strategies and lack a standardized framework. This fragmentation creates challenges for developers and researchers, who face difficulties testing or comparing models due to inconsistent designs. In addition, common functionalities such as data retrieval and summarization are frequently reimplemented across models, leading to inefficiencies. Many academic models are also deeply embedded within specific agent architectures, making them hard to reuse or adapt for other systems. This highlights the need for a unified, modular framework for memory in LLM agents.

Researchers from Renmin University and Huawei have developed MemEngine, a unified and modular library designed to support developing and deploying advanced memory models for LLM-based agents. MemEngine organizes memory systems into three hierarchical levels—functions, operations, and models—enabling efficient and reusable design. It supports many existing memory models, allowing users to switch, configure, and extend them easily. The framework also includes tools for adjusting hyperparameters, saving memory states, and integrating with popular agents like AutoGPT. With comprehensive documentation and open-source access, MemEngine aims to streamline memory model research and promote widespread adoption.

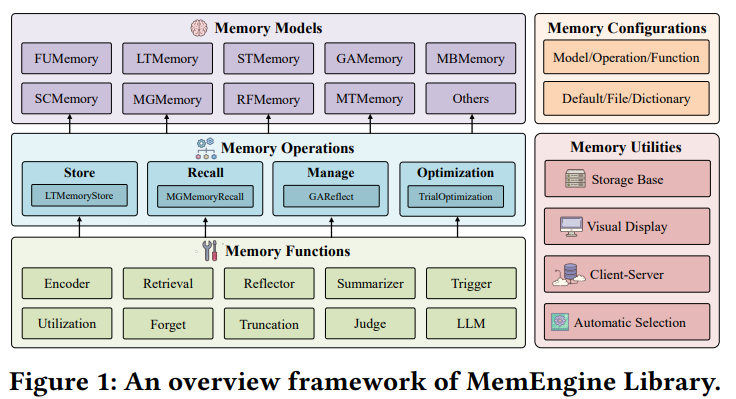

MemEngine is a unified and modular library designed to enhance the memory capabilities of LLM-based agents. Its architecture consists of three layers: a foundational layer with basic functions, a middle layer that manages core memory operations (like storing, recalling, managing, and optimizing information), and a top layer that includes a collection of advanced memory models inspired by recent research. These include models like FUMemory (long-context memory), LTMemory (semantic retrieval), GAMemory (self-reflective memory), and MTMemory (tree-structured memory), among others. Each model is implemented using standardized interfaces, making it easy to switch or combine them. The library also provides utilities such as encoders, summarizers, retrievers, and judges, which are used to build and customize memory operations. Additionally, MemEngine includes tools for visualization, remote deployment, and automatic model selection, offering both local and server-based usage options.

Unlike many existing libraries that only support basic memory storage and retrieval, MemEngine distinguishes itself by supporting advanced features like reflection, optimization, and customizable configurations. It has a robust configuration module allows developers to fine-tune hyperparameters and prompts at various levels, either through static files or dynamic inputs. Developers can choose from default settings, manually configure parameters, or rely on automatic selection tailored to their task. The library also supports integration with tools like VLLM and AutoGPT. MemEngine enables customization at the function, operation, and model level for those building new memory models, offering extensive documentation and examples. MemEngine provides a more comprehensive and research-aligned memory framework than other agents and memory libraries.

In conclusion, MemEngine is a unified and modular library designed to support the development of advanced memory models for LLM-based agents. While large language model agents have seen growing use across industries, their memory systems remain a critical focus. Despite numerous recent advancements, no standardized framework for implementing memory models exists. MemEngine addresses this gap by offering a flexible and extensible platform that integrates various state-of-the-art memory approaches. It supports easy development and plug-and-play usage. Looking ahead, the authors aim to extend the framework to include multi-modal memory, such as audio and visual data, for broader applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Researchers from Renmin University and Huawei Propose MemEngine: A Unified Modular AI Library for Customizing Memory in LLM-Based Agents appeared first on MarkTechPost.

Source: Read MoreÂ