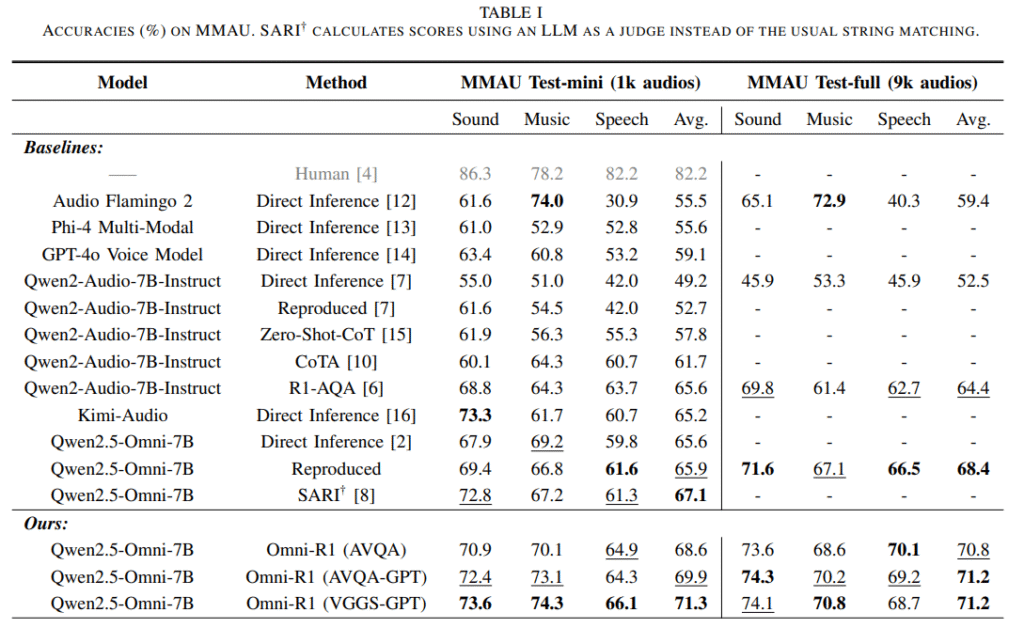

Recent developments have shown that RL can significantly enhance the reasoning abilities of LLMs. Building on this progress, the study aims to improve Audio LLMs—models that process audio and text to perform tasks like question answering. The MMAU benchmark is a widely used dataset designed to evaluate these models, featuring multiple-choice questions on sounds, speech, and music, some of which require external knowledge. A prior approach, R1-AQA, used GRPO (Group Relative Policy Optimization) to fine-tune the Qwen2-Audio model on the AVQA dataset, achieving state-of-the-art (SOTA) results on MMAU. Inspired by this, the authors applied GRPO to fine-tune Qwen2.5-Omni-7B, a newer multimodal model, further improving performance. Additionally, they introduced a method to automatically generate audio QA data, leading to even better outcomes.

Compared to methods like SARI, which uses a more complex mix of supervised fine-tuning and RL with structured reasoning, the authors’ approach is simpler, relying solely on RL without explicit reasoning steps. They also conducted experiments with text-only inputs to investigate the role of GRPO in performance gains. Surprisingly, fine-tuning the models using just text data yielded nearly the same improvements as training with audio and text. This finding suggests that GRPO primarily enhances the model’s reasoning ability through text, significantly contributing to its improved performance in audio QA tasks.

Researchers from MIT CSAIL, Goethe University, IBM Research, and others introduce Omni-R1, a fine-tuned version of the multi-modal LLM Qwen2.5-Omni using the GRPO reinforcement learning method. Trained on the AVQA dataset, Omni-R1 sets new state-of-the-art results on the MMAU benchmark across all audio categories. Surprisingly, much of the improvement stems from enhanced text-based reasoning rather than audio input. Fine-tuning with text-only data also led to notable performance gains. Additionally, the team generated large-scale audio QA datasets using ChatGPT, further boosting accuracy. Their work highlights the significant impact of text reasoning in audio LLM performance and promises the public release of all resources.

The Omni-R1 model fine-tunes Qwen2.5-Omni using the GRPO reinforcement learning method with a simple prompt format that allows direct answer selection, making it memory-efficient for 48GB GPUs. GRPO avoids a value function by comparing grouped outputs using a reward based solely on answer correctness. Researchers used audio captions from Qwen-2 Audio to expand training data and prompted ChatGPT to generate new question-answer pairs. This method produced two datasets—AVQA-GPT and VGGS-GPT—covering 40k and 182k audios, respectively. Training on these automatically generated datasets improved performance, with VGGS-GPT helping Omni-R1 achieve state-of-the-art accuracy on the MMAU benchmark.

The researchers fine-tuned Qwen2.5-Omni using GRPO on AVQA, AVQA-GPT, and VGGS-GPT datasets. Results show notable performance gains, with the best average score of 71.3% on the MAU Test-mini from VGGS-GPT. Qwen2.5-Omni outperformed baselines, including SARI, and showed strong reasoning even without audio, suggesting robust text-based understanding. GRPO fine-tuning improved Qwen2-Audio more significantly due to its weaker initial text reasoning. Surprisingly, fine-tuning without audio boosted performance, while text-only datasets like ARC-Easy yielded comparable results. Improvements mainly stem from enhanced text reasoning, though audio-based fine-tuning remains slightly superior for optimal performance.

In conclusion, Omni-R1 is an Audio LLM developed by fine-tuning Qwen2.5-Omni using the GRPO reinforcement learning method for enhanced audio question answering. Omni-R1 achieves new state-of-the-art results on the MMAU benchmark across sounds, speech, music, and overall performance. Two new large-scale datasets, AVQA-GPT and VGGS-GPT, were created using automatically generated questions, further boosting model accuracy. Experiments show that GRPO mainly enhances text-based reasoning, significantly contributing to performance. Surprisingly, fine-tuning with only text (without audio) improved audio-based performance, highlighting the value of strong base language understanding. These findings offer cost-effective strategies for developing audio-capable language models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit.

The post Omni-R1: Advancing Audio Question Answering with Text-Driven Reinforcement Learning and Auto-Generated Data appeared first on MarkTechPost.

Source: Read MoreÂ