Recent advancements in LM agents have shown promising potential for automating intricate real-world tasks. These agents typically operate by proposing and executing actions through APIs, supporting applications such as software engineering, robotics, and scientific experimentation. As these tasks become more complex, LM agent frameworks have evolved to include multiple agents, multi-step retrieval, and tailored scaffolding to optimize performance. A central challenge lies in effectively exploring and understanding the environment, which has prompted the development of engineered scaffolds using tools, memory mechanisms, and custom pipelines. However, most existing methods assume partial observability, requiring agents to collect observations incrementally. While this assumption holds in dynamic or unfamiliar environments, it is less applicable in fully observable settings like SWE-bench, where all relevant information is accessible from the start.

In software engineering, research on LM agents has focused on two main strategies: agent-based frameworks and structured pipelines. Agent-based systems, such as SWE-Agent and OpenHands CodeAct, allow LMs to interact autonomously with codebases, often through custom interfaces and retrieval tools. Other models like Moatless and AutoCodeRover enhance localization through search techniques, while SpecRover refines scaffolding design. Alternatively, structured pipelines—such as Agentless and CodeMonkey—decompose tasks into sequential phases like localization, repair, and validation. While these approaches depend on engineered components for performance, the current study proposes leveraging Long-Context LMs (LCLMs) to directly interpret the entire task environment. Advances in LCLM architecture and infrastructure now allow these models to outperform retrieval-augmented systems in many contexts, reducing reliance on complex external scaffolding.

Researchers from Stanford, IBM, and the University of Toronto explored whether complex scaffolding is necessary for LM agents tackling tasks like SWE-bench. They show that simply using LCLMs, such as Gemini-1.5-Pro, with proper prompting and no scaffolding, can achieve competitive performance—reaching 38% on SWE-Bench-Verified. Gemini-2.5-Pro, using the same simple setup, reaches 50.8%. Their work suggests that many complex agentic designs could be replaced with a single powerful LCLM, simplifying architecture and training. Additionally, a hybrid two-stage approach using Gemini-1.5-Pro and Claude-3.7 achieves a 48.6% solve rate, further supporting this simplified direction.

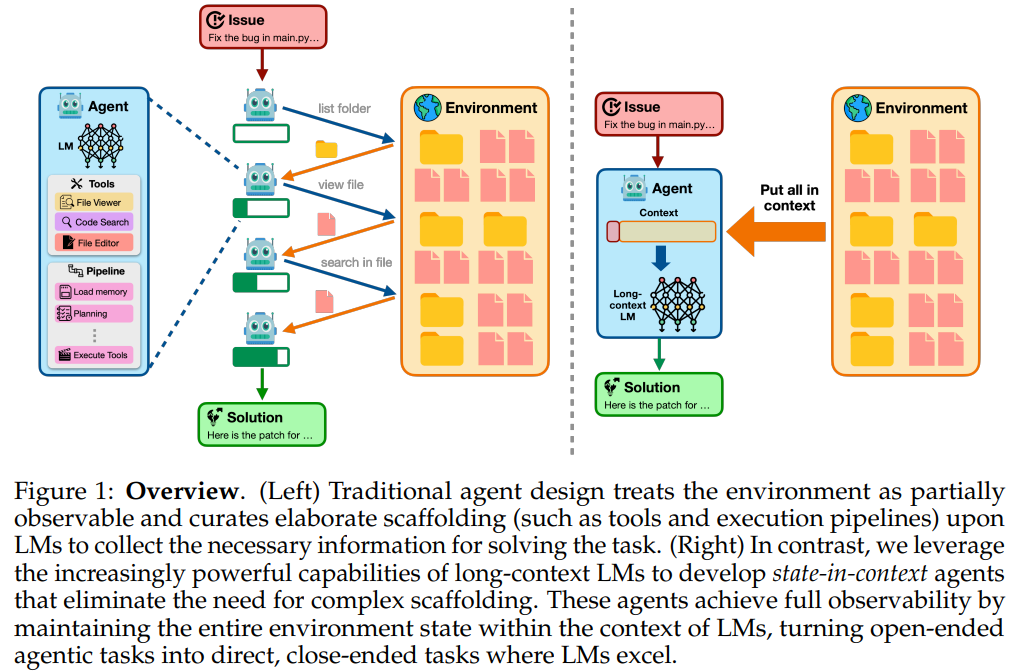

Traditional LM agents rely on interactive exploration due to partial observability, but many tasks, like software debugging, allow full observability. The study proposes state-in-context agents that leverage LCLMs to directly process full or compressed environment states, bypassing the need for complex agentic scaffolding. For large codebases, a ranking-based compression selects relevant files to fit within context limits. Two methods are introduced: DIRECTSOLVE, where LCLMs solve tasks using the full context; and SELECTSOLVE, where LCLMs localize relevant files for short-context LMs (SCLMs) to solve. Both use targeted patch formats and validation to ensure accuracy and reduce hallucination.

The experiments evaluate a simplified agent framework using LLMs on the SWE-bench Verified benchmark, which includes 500 real-world software engineering tasks. The proposed methods, DIRECTSOLVE and SELECTSOLVE, utilize LCLMs like Gemini-1.5-Pro and Gemini-2.5-Pro, and in SELECTSOLVE, an additional SCLM (Claude-3.7-Sonnet) for patch generation. Results show that DIRECTSOLVE outperforms complex agentic approaches like Agentless and CodeAct with minimal engineering. SELECTSOLVE further improves accuracy by leveraging stronger models for patching. Ablation studies highlight the importance of CoT prompting, code restatement, and token-efficient context design. Additionally, positioning relevant files at the start of the prompt improves performance, underscoring limitations in long-context processing.

In conclusion, the cost of using LCLM-based methods is currently higher than existing approaches like Agentless and CodeAct, averaging $2.60 per instance compared to $0.25 and $0.87, respectively. However, rapid drops in inference costs and increasing context lengths make LCLMs more practical. Techniques like KV caching significantly lower costs after initial runs, reducing it to about $0.725. Although slight codebase changes still limit caching benefits, further improvements could help. The study also suggests that LCLMs can handle long interaction histories, reducing the need for complex memory and retrieval mechanisms. Notably, unscaffolded LCLM models can perform competitively on SWE-bench tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

The post SWE-Bench Performance Reaches 50.8% Without Tool Use: A Case for Monolithic State-in-Context Agents appeared first on MarkTechPost.

Source: Read MoreÂ