VLMs have become central to building general-purpose AI systems capable of understanding and interacting in digital and real-world settings. By integrating visual and textual data, VLMs have driven advancements in multimodal reasoning, image editing, GUI agents, robotics, and more, influencing sectors like education and healthcare. Despite this progress, VLMs still lag behind human capabilities, particularly in tasks involving 3D reasoning, object counting, creative visual interpretation, and interactive gameplay. A challenge lies in the scarcity of rich, diverse multimodal datasets, unlike the abundant textual resources available to LLMs. Additionally, multimodal data complexity poses significant training and evaluation hurdles.

Researchers at ByteDance have developed Seed1.5-VL, a compact yet powerful vision-language foundation model featuring a 532 M-parameter vision encoder and a 20 B-parameter Mixture-of-Experts LLM. Despite its efficient architecture, Seed1.5-VL achieves top results on 38 out of 60 public VLM benchmarks, excelling in tasks like GUI control, video understanding, and visual reasoning. It is trained on trillions of multimodal tokens using advanced data synthesis and post-training techniques, including human feedback. Innovations in training, such as hybrid parallelism and vision token redistribution, optimize performance. The model’s efficiency and strong reasoning capabilities suit real-world interactive applications like chatbots.

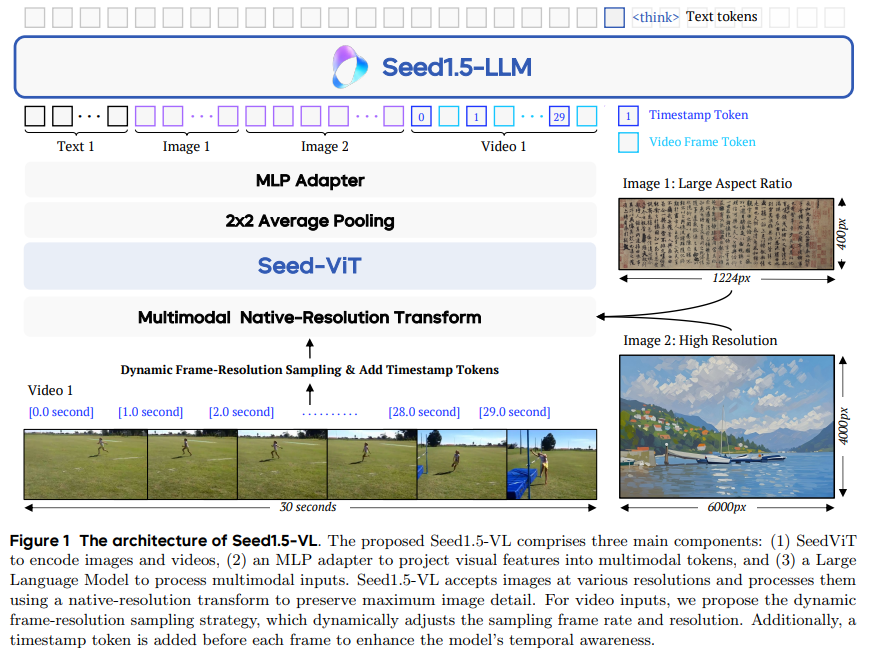

The Seed1.5-VL architecture features a vision encoder, an MLP adapter, and an LLM. Its custom vision encoder, Seed-ViT, supports native-resolution image input using 2D RoPE and processes images through 14×14 patches, followed by average pooling and an MLP. Pretraining involves masked image modeling, contrastive learning, and omni-modal alignment using images, text, and video-audio-caption pairs. The model uses a Dynamic Frame-Resolution Sampling approach for video encoding that adapts frame rates and resolutions based on content complexity, balancing efficiency and detail. This method enables effective spatial-temporal understanding within a token budget, ensuring comprehensive video representation across varied lengths and complexities.

The pre-training of Seed1.5-VL involved curating 3 trillion high-quality tokens across diverse domains. Image-text pairs from the web were filtered using CLIP scores, size/aspect ratio checks, and deduplication to reduce noise. Using domain-based sampling and duplication strategies, rare visual concepts were overrepresented to address class imbalance. Specialized datasets were added for OCR using annotated and synthetic text-rich images, charts, and tables—object grounding and counting tasks utilized bounding boxes, points, and auto-labeled web data. Additional tasks included 3D spatial understanding using depth annotations, and video understanding through multi-frame captioning, QA, and temporal grounding to support dynamic content analysis.

The evaluation highlights Seed-ViT and Seed1.5-VL’s competitive performance across vision-language tasks. Seed-ViT, despite having significantly fewer parameters, matches or outperforms larger models like InternVL-C and EVA-CLIP on zero-shot image classification tasks, showing high accuracy and robustness on datasets such as ImageNet-A and ObjectNet. Seed1.5-VL demonstrates strong capabilities in multimodal reasoning, general VQA, document understanding, and grounding. It achieves state-of-the-art benchmarks, particularly in complex reasoning, counting, and chart interpretation tasks. The model’s “thinking” mode, which incorporates longer reasoning chains, further enhances performance, indicating its strong ability in detailed visual understanding and task generalization.

In conclusion, Seed1.5-VL is a vision-language foundation model featuring a 532 M-parameter vision encoder and a 20 B-parameter Mixture-of-Experts language model. Despite its compact size, it achieves state-of-the-art results on 38 of 60 public benchmarks and excels in complex reasoning, OCR, diagram interpretation, 3D spatial understanding, and video analysis. It also performs well in agent-driven tasks like GUI control and gameplay, surpassing models like OpenAI CUA and Claude 3.7. The model shows strong generalization to tasks beyond its training scope. The study outlines its architecture, data pipeline, and training methods and identifies future directions, including enhancing tool-use and visual reasoning capabilities.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

The post ByteDance Introduces Seed1.5-VL: A Vision-Language Foundation Model Designed to Advance General-Purpose Multimodal Understanding and Reasoning appeared first on MarkTechPost.

Source: Read MoreÂ