Semantic retrieval focuses on understanding the meaning behind text rather than matching keywords, allowing systems to provide results that align with user intent. This ability is essential across domains that depend on large-scale information retrieval, such as scientific research, legal analysis, and digital assistants. Traditional keyword-based methods fail to capture the nuance of human language, often retrieving irrelevant or imprecise results. Modern approaches rely on converting text into high-dimensional vector representations, enabling more meaningful comparisons between queries and documents. These embeddings aim to preserve semantic relationships and provide more contextually relevant outcomes during retrieval.

Among many, the primary challenge in semantic retrieval is the efficient handling of long documents and complex queries. Many models are restricted by fixed-length token windows, commonly around 512 or 1024 tokens, which limits their application in domains that require processing full-length articles or multi-paragraph documents. As a result, crucial information that appears later in a document may be ignored or truncated. Furthermore, real-time performance is often compromised due to the computational cost of embedding and comparing large documents, especially when indexing and querying must occur at scale. Scalability, accuracy, and generalization to unseen data remain persistent challenges in deploying these models in dynamic environments.

In earlier research, models like ModernBERT and other sentence-transformer-based tools have dominated the semantic embedding space. They often use mean pooling or simple aggregation techniques to generate sentence vectors over contextual embeddings. While such methods work for short and moderate-length documents, they struggle to maintain precision when faced with longer input sequences. These models also rely on dense vector comparisons, which become computationally expensive when handling millions of documents. Also, even though they perform well on standard benchmarks like MS MARCO, they show reduced generalization to diverse datasets, and re-tuning for specific contexts is frequently required.

Researchers from LightOn AI introduced GTE-ModernColBERT-v1. This model builds upon the ColBERT architecture, integrating the ModernBERT foundation developed by Alibaba-NLP. By distilling knowledge from a base model and optimizing it on the MS MARCO dataset, the team aimed to overcome limitations related to context length and semantic preservation. The model was trained using 300-token document inputs but demonstrated the ability to handle inputs as large as 8192 tokens. This makes it suitable for indexing and retrieving longer documents with minimal information loss. Their work was deployed through PyLate, a library that simplifies the indexing and querying of documents using dense vector models. The model supports token-level semantic matching using the MaxSim operator, which evaluates similarity between individual token embeddings rather than compressing them into a single vector.

GTE-ModernColBERT-v1 transforms text into 128-dimensional dense vectors and utilizes the MaxSim function for computing semantic similarity between query and document tokens. This method preserves granular context and allows fine-tuned retrieval. It integrates with PyLate’s Voyager indexing system, which manages large-scale embeddings using an efficient HNSW (Hierarchical Navigable Small World) index. Once documents are embedded and stored, users can retrieve top-k relevant documents using the ColBERT retriever. The process supports full pipeline indexing and lightweight reranking for first-stage retrieval systems. PyLate provides flexibility in modifying document length during inference, enabling users to handle texts much longer than the model was originally trained on, an advantage rarely seen in standard embedding models.

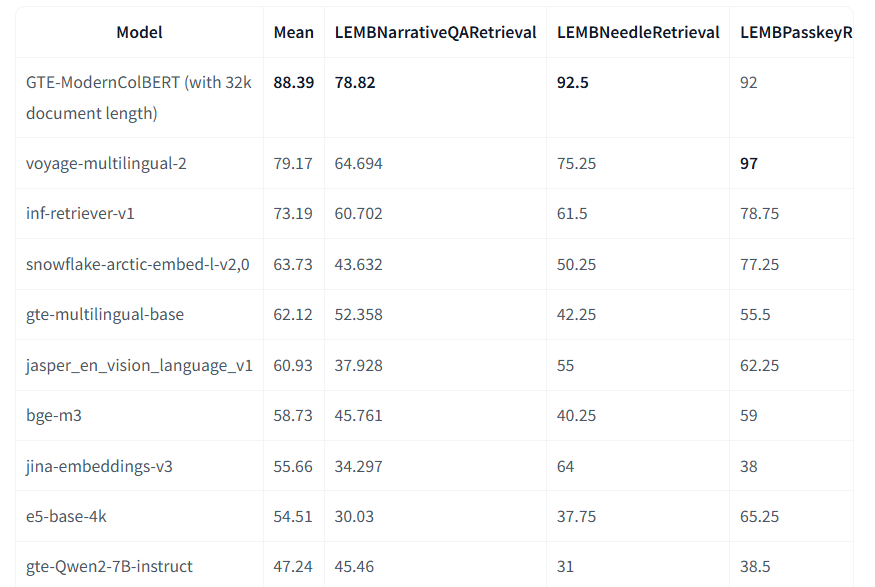

On the NanoClimate dataset, the model achieved a MaxSim Accuracy@1 of 0.360, Accuracy@5 of 0.780, and Accuracy@10 of 0.860. Precision and recall scores were consistent, with MaxSim Recall@3 reaching 0.289 and Precision@3 at 0.233. These scores reflect the model’s ability to retrieve accurate results even in longer-context retrieval scenarios. When evaluated on the BEIR benchmark, GTE-ModernColBERT outperformed previous models, including ColBERT-small. For example, it scored 54.89 on the FiQA2018 dataset, 48.51 on NFCorpus, and 83.59 on the TREC-COVID task. The average performance across these tasks was significantly higher than baseline ColBERT variants. Notably, in the LongEmbed benchmark, the model scored 88.39 in Mean score and 78.82 in LEMB Narrative QA Retrieval, surpassing other leading models such as voyage-multilingual-2 (79.17) and bge-m3 (58.73).

These results suggest that the model offers robust generalization and effective handling of long-context documents, outperforming many contemporaries by almost 10 points on long-context tasks. It is also highly adaptable to different retrieval pipelines, supporting indexing and reranking implementations. Such versatility makes it an attractive solution for scalable semantic search.

Several Key Highlights from the Research on GTE-ModernColBERT-v1 include:

- GTE-ModernColBERT-v1 uses 128-dimensional dense vectors with token-level MaxSim similarity, based on ColBERT and ModernBERT foundations.

- Though trained on 300-token documents, the model generalizes to documents up to 8192 tokens, showing adaptability for long-context retrieval tasks.

- Accuracy@10 reached 0.860, Recall@3 was 0.289, and Precision@3 was 0.233, demonstrating strong retrieval accuracy.

- On the BEIR benchmark, the model scored 83.59 on TREC-COVID and 54.89 on FiQA2018, outperforming ColBERT-small and other baselines.

- Achieved a mean score of 88.39 in the LongEmbed benchmark and 78.82 in LEMB Narrative QA, surpassing previous SOTA by nearly 10 points.

- Integrates with PyLate’s Voyager index, supports reranking and retrieval pipelines, and is compatible with efficient HNSW indexing.

- The model can be deployed in pipelines requiring fast and scalable document search, including academic, enterprise, and multilingual applications.

In conclusion, this research provides a meaningful contribution to long-document semantic retrieval. By combining the strengths of token-level matching with scalable architecture, GTE-ModernColBERT-v1 addresses several bottlenecks that current models face. It introduces a reliable method for processing and retrieving semantically rich information from extended contexts, significantly improving precision and recall.

Check out the Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

Here’s a brief overview of what we’re building at Marktechpost:

- ML News Community – r/machinelearningnews (92k+ members)

- Newsletter– airesearchinsights.com/(30k+ subscribers)

- miniCON AI Events – minicon.marktechpost.com

- AI Reports & Magazines – magazine.marktechpost.com

- AI Dev & Research News – marktechpost.com (1M+ monthly readers)

- Partner with us

The post LightOn AI Released GTE-ModernColBERT-v1: A Scalable Token-Level Semantic Search Model for Long-Document Retrieval and Benchmark-Leading Performance appeared first on MarkTechPost.

Source: Read MoreÂ