Large language models are now central to various applications, from coding to academic tutoring and automated assistants. However, a critical limitation persists in how these models are designed; they are trained on static datasets that become outdated over time. This creates a fundamental challenge because the language models cannot update their knowledge or validate responses against fresh, real-world data. As a result, while these models demonstrate strong performance on reasoning tasks or structured queries, their answers can still include fabricated or obsolete information, reducing their reliability in real-world usage. To maintain credibility, especially for applications requiring updated knowledge such as news, research, or product reviews, models must interact with external data sources in a timely and cost-efficient manner.

The core problem lies in teaching these models to effectively retrieve and incorporate external information. While fine-tuned pretraining helps develop a strong baseline understanding, the capacity to conduct meaningful, dynamic searches is missing. Equipping language models with this ability introduces practical constraints. Search engines used for external information retrieval provide varying document quality that introduces inconsistency in model training. Moreover, integrating reinforcement learning to simulate real-world searching requires large-scale interactions with live APIs, running up hundreds of thousands of calls, which becomes prohibitively expensive. This results in a bottleneck for academic research and commercial deployment, where cost and training scalability are critical.

Various methods have been developed to enhance language models’ search and retrieval capabilities. Some early techniques relied on prompt-based instructions that guided the model through processes like generating sub-queries or managing multi-step searches. These methods, however, heavily relied on manual tuning and often required extensive computational resources to ensure consistent outputs. Other approaches leaned on supervised fine-tuning for smaller models to perform more targeted retrieval, with models like Self-RAG and RetroLLM emerging in this space. There have also been experiments with techniques like Monte Carlo Tree Search to expand possible answer paths during inference dynamically. Reinforcement learning-based solutions like Search-R1 and DeepResearcher allowed models to interact directly with real search engines, offering a training experience closer to how users behave. However, these innovations still suffer from either complexity, high computational demand, or financial cost due to live interaction constraints.

Researchers from Tongyi Lab at Alibaba Group introduced an innovative solution called ZeroSearch. This reinforcement learning framework removes the need for live API-based search entirely. Instead, it uses another language model to simulate the behavior of a search engine. The simulation model is fine-tuned through supervised training to generate documents that either help or mislead the policy model, depending on whether the content is designed to be relevant or noisy. This allows complete control over the document quality and cost while enabling a realistic retrieval training experience. A key innovation lies in using curriculum-based learning during training, which means gradually introducing harder retrieval tasks by adjusting how much noise is present in the generated documents. This progression helps the policy model develop resilience and better reasoning skills over time without ever making a real search query.

The structure of ZeroSearch involves distinct phases in the reasoning process. The model first thinks internally using designated tags, then generates queries if it determines that additional information is needed. Finally, it outputs an answer only when sufficient context is acquired. This structured approach enforces clarity in decision-making and has been shown to improve transparency and answer quality. A minimal change in prompts guides document generation for the simulated search engine that controls whether the document appears helpful or misleading. The simulated LLM is fine-tuned using interaction data where each retrieval trajectory is labeled based on the correctness of the final answer. The policy model is taught to handle straightforward and complex search conditions by systematically varying document quality. A performance scaling function determines how much noise is introduced at each training stage, increasing the model’s ability to navigate uncertainty over time.

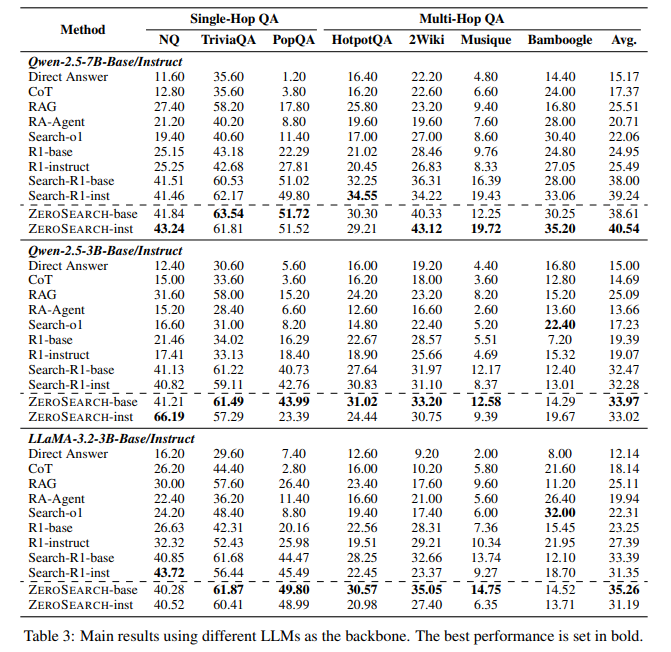

A 3-billion parameter model was able to simulate the retrieval process for training purposes effectively. The results became particularly notable with larger models. A 7B retrieval module was performed at a level comparable to Google Search regarding response quality. A 14B model even surpassed Google Search benchmarks. ZeroSearch also showed flexibility, functioning effectively across base and instruction-tuned LLMs of different sizes. It integrates well with a range of reinforcement learning algorithms, including PPO, GRPO, and Reinforce++, and it uses a reward design based on the F1 score rather than exact match to discourage the model from generating excessively long answers just to increase keyword overlap. Furthermore, ZeroSearch uses a masking mechanism during backpropagation to ensure that gradients are only computed on the policy model’s outputs, stabilizing training without sacrificing performance.

The research demonstrates a clear and efficient alternative to real-time search engine reliance. Using simulation-driven document generation removes the need for high-cost APIs, and the quality of training input is controlled with precision. The method also boosts model reasoning capability by introducing progressive noise and uncertainty, effectively mimicking how real-world data retrieval might fail or mislead. The policy model is trained to extract the most useful information. These traits make ZeroSearch a scalable and practical solution for commercial-grade applications.

This approach successfully identifies and addresses the twin challenges of document quality variability and economic cost that have limited real-time search integration in language model training. It combines document simulation, structured interaction, and reinforcement learning to ensure effectiveness and scalability. By relying solely on simulated data generation, the researchers achieved superior or comparable results to existing methods while removing all dependency on costly APIs.

Several Key Takeaways from the Research include the following:

- A 3B model simulated realistic document retrieval effectively with zero API cost.

- A 7B retrieval module matched Google Search performance in benchmark tests.

- The 14B model exceeded real search engine performance.

- Reinforcement learning was performed with a curriculum-based rollout that gradually introduced noise.

- A simulation LLM generated both relevant and noisy documents via lightweight supervised fine-tuning.

- Structured interaction phases (<think>, <search>, <answer>) improved model clarity and accuracy.

- F1-based rewards discouraged reward hacking by penalizing irrelevant answer length.

- Compatible with major RL algorithms including PPO, GRPO, and Reinforce++.

- Training was stabilized using a gradient masking mechanism to prevent instability from simulated tokens.

Check out the Paper and Model on Hugging Face. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

- ML News Community – r/machinelearningnews (92k+ members)

- Newsletter– airesearchinsights.com/(30k+ subscribers)

- miniCON AI Events – minicon.marktechpost.com

- AI Reports & Magazines – magazine.marktechpost.com

- AI Dev & Research News – marktechpost.com (1M+ monthly readers)

- Partner with us

The post ZeroSearch from Alibaba Uses Reinforcement Learning and Simulated Documents to Teach LLMs Retrieval Without Real-Time Search appeared first on MarkTechPost.

Source: Read MoreÂ