Language processing in enterprise environments faces critical challenges as business workflows increasingly depend on synthesising information from diverse sources, including internal documentation, code repositories, research reports, and real-time data streams. While recent advances in large language models have delivered impressive capabilities, this progress comes with significant downsides: skyrocketing per-request costs, constant hardware upgrade requirements, and increased data privacy risks.

Pursuing ever-larger model architectures has demonstrated diminishing returns, with the accelerating energy demands potentially constraining future AI development. Modern enterprises now require balanced solutions that deliver comprehensive long-context comprehension while maintaining efficient processing, predictable low-cost serving capabilities, and robust privacy guarantees—a combination that small language models are uniquely positioned to provide despite the complex, high-volume inference demands characteristic of today’s business applications.

Traditional approaches to extending language model capabilities beyond their inherent context limitations have relied on several workaround methods. Retrieval-augmented generation (RAG) systems pull relevant information from external knowledge bases to supplement model inputs. External tool calls enable models to access specialised functions outside their parameters. Memory mechanisms artificially persist information across conversation turns. While functional, these techniques represent brittle “stitching” solutions that add complexity and potential failure points to processing pipelines.

Context window extensions in larger models attempted to address these limitations but introduced significant computational overhead. Each method fundamentally acknowledges the same critical need: genuine long-context processing capabilities that allow models to handle entire documents, sustained conversations, code repositories, and research reports in a single forward pass rather than through fragmented processing. These stopgap approaches highlight why native extended context is essential—it eliminates architectural complexity while maintaining information coherence throughout processing.

Salesforce AI Research has developed xGen-small, an enterprise-ready compact language model for efficient long-context processing. This solution combines domain-focused data curation, scalable pre-training, length-extension techniques, instruction fine-tuning, and reinforcement learning to deliver high-performance enterprise AI capabilities with predictable low costs, addressing the critical balance businesses require between capability and operational efficiency.

xGen-small’s architecture employs a “small but long” strategy that fundamentally inverts the traditional scale-up paradigm. Rather than increasing parameter counts, this approach deliberately shrinks model size while precisely refining data distributions toward enterprise-relevant domains and training protocols. This architectural philosophy demands comprehensive expertise across multiple development stages and components working in concert through a vertically integrated pipeline.

The framework begins with meticulous raw data curation followed by scalable pre-training optimised for efficient processing. Sophisticated length-extension mechanisms enable the compact model to handle extensive contexts while targeted post-training and reinforcement learning techniques enhance performance in enterprise-specific tasks. This architecture delivers strategic advantages for business applications by providing cost efficiency, robust privacy safeguards, and long-context understanding without the resource requirements of larger models, creating a sustainable pathway for deploying Enterprise AI at scale with predictable operational characteristics.

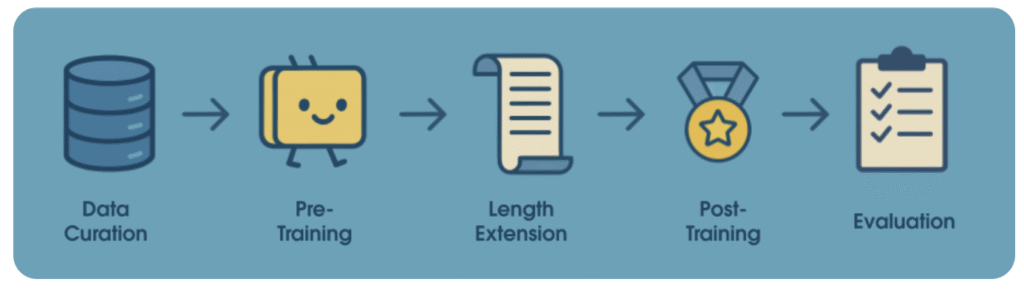

xGen-small’s development pipeline integrates multiple stages into a streamlined workflow. Starting with a multi-trillion-token corpus, the process applies rigorous filtering and quality controls before large-scale TPU pre-training with optimised learning schedules. Targeted length-extension techniques expand context capacity, while task-specific post-training and reward-based reinforcement learning refine model capabilities.

Data curation for xGen-small began with harvesting a corpus substantially larger than the final eight trillion training tokens. The pipeline applied fast heuristic filters to remove spam, followed by a two-stage quality assessment using classifier ensembles. Exact hashing and fuzzy fingerprinting eliminated near-duplicates, while careful balancing of general data with specialised content for code, mathematics, and natural language optimised performance. Extensive ablation studies refined this curation approach to maximise factual accuracy and overall usefulness.

Pre-training of xGen-small utilises TPU v5p pods with Jaxformer v8 library, implementing FSDP, sequence-parallel attention, and splash kernels for maximum efficiency. The multi-phase learning rate schedule optimises training dynamics. At the same time, a carefully balanced data mixture combines code corpora, natural language examples, mathematical texts, and high-quality filtered content to capture both diversity and domain expertise.

xGen-small demonstrates competitive performance against leading baselines in its size class. The strategic blending of diverse data types—including low-entropy code, high-entropy natural language, mathematical content, and classifier-filtered high-quality subsets—delivers exceptional results across evaluation metrics while maintaining the model’s compact, efficient architecture. This approach successfully balances processing efficiency with robust performance capabilities required for enterprise applications.

Performance evaluations demonstrate xGen-small’s exceptional long-context capabilities, with the 9B model achieving state-of-the-art results on the RULER benchmark and the 4B model securing second place in its class. Unlike competitors whose performance degrades significantly at extended context lengths, xGen maintains consistent performance from 4K to 128K tokens. This stability comes from a sophisticated length-extension strategy using two-stage extension (32K then 128K), over-length training to 256K, and sequence parallelism to manage memory constraints efficiently, delivering reliable performance across the entire context spectrum.

Post-training transforms xGen-small base models into comprehensive instruction models through a two-stage process. First, supervised fine-tuning uses a diverse, high-quality instruction dataset spanning mathematics, coding, safety, and general-purpose domains to establish core behaviours and alignment. Subsequently, large-scale reinforcement learning refines the model’s policy, particularly enhancing reasoning capabilities. This approach delivers exceptional performance in complex reasoning domains like mathematics, coding, and STEM applications while maintaining consistent instruction-following abilities across general tasks.

The development of xGen-small demonstrates that deliberately constraining model size while extending context capacity creates optimal solutions for enterprise AI applications. This “small but long” approach significantly reduces inference costs and hardware requirements while enabling seamless processing of extensive internal knowledge sources without external retrieval dependencies. Through an integrated pipeline of meticulous data curation, scalable pre-training, targeted length-extension, and reinforcement learning, these compact models match or exceed larger counterparts’ performance. This architecture provides businesses with a predictable, sustainable, cost-effective, and privacy-preserving framework for deploying AI at enterprise scale.

Check out the Model on Hugging Face and Technical details. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

- ML News Community – r/machinelearningnews (92k+ members)

- Newsletter– airesearchinsights.com/(30k+ subscribers)

- miniCON AI Events – minicon.marktechpost.com

- AI Reports & Magazines – magazine.marktechpost.com

- AI Dev & Research News – marktechpost.com (1M+ monthly readers)

- Partner with us

The post Enterprise AI Without GPU Burn: Salesforce’s xGen-small Optimizes for Context, Cost, and Privacy appeared first on MarkTechPost.

Source: Read MoreÂ