LLMs have shown advancements in reasoning capabilities through Reinforcement Learning with Verifiable Rewards (RLVR), which relies on outcome-based feedback rather than imitating intermediate reasoning steps. Current RLVR works face critical scalability challenges as they heavily depend on manually curated collections of questions and answers for training. As reasoning models advance, constructing large-scale, high-quality datasets becomes increasingly unsustainable, similar to bottlenecks identified in LLM pretraining. Moreover, exclusive dependency on human-designed tasks may constrain AI systems’ capacity for autonomous learning and development, especially as they evolve beyond human intellectual capabilities.

Researchers have explored various approaches to enhance LLM reasoning capabilities. STaR pioneered self-bootstrapping using expert iteration and rejection sampling of outcome-verified responses to improve CoT reasoning. The o1 model deployed this concept at scale, achieving state-of-the-art results, and R1 later became the first open-weight model to match or surpass o1’s performance by introducing the “zero” setting where RL is applied directly to the base LLM. Further, self-play paradigms have evolved from Schmidhuber’s early two-agent setups to more complex implementations like AlphaGo and AlphaZero. Recent methods such as SPIN, Self-Rewarding Language Models, SPC, and SPAG have applied self-play to language models for alignment and reasoning.

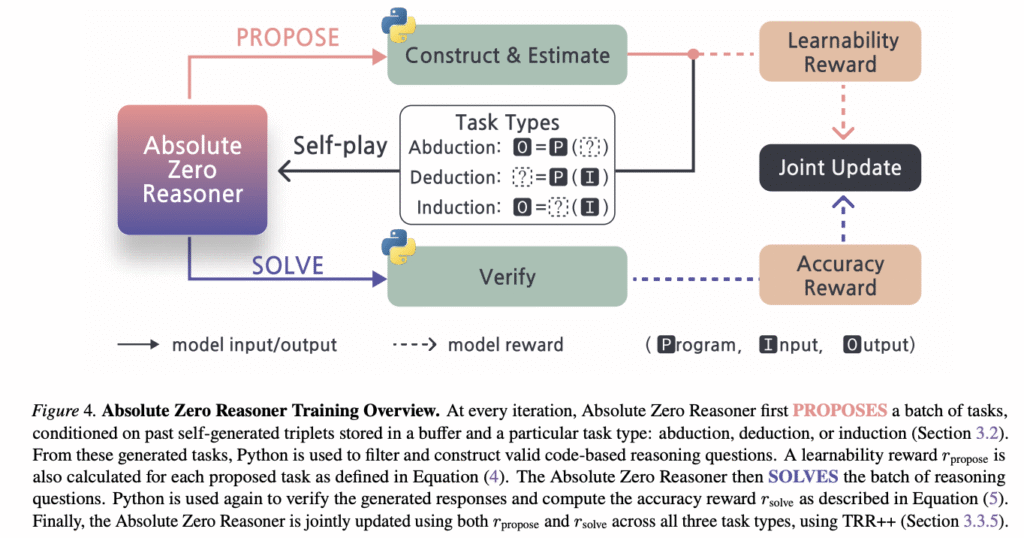

Researchers from Tsinghua University, Beijing Institute for General Artificial Intelligence, and Pennsylvania State University have proposed an RLVR paradigm called Absolute Zero to enable a single model to autonomously generate and solve tasks that maximize its own learning progress without relying on any external data. Under this method, researchers have introduced the Absolute Zero Reasoner (AZR) that self-evolves its training curriculum and reasoning ability through a code executor that validates proposed code reasoning tasks and verifies answers, providing a unified source of verifiable reward to guide open-ended yet grounded learning. AZR can be effectively implemented across different model scales and remains compatible with various model classes, suggesting broad applicability.

LLMs provide an ideal framework for implementing AZR in multitask learning contexts. During each online rollout iteration in the absolute zero setting’s objective equation, AZR proposes new reasoning tasks based on task type and past self-generated examples, with explicit prompting to generate diverse tasks and then attempts to solve them, receiving grounded feedback for its model responses. AZR utilizes a code executor as both a flexible interface and verifiable environment, enabling automatic construction, execution, and validation of code reasoning tasks. Lastly, the AZR Algorithm includes buffer initialization, Task Proposal Inputs and Buffer Management, valid task construction, solution validation, and advantage estimator calculation through Task-Relative REINFORCE++.

The Absolute Zero Reasoner-Coder-7B has achieved state-of-the-art performance in the 7B overall average and coding average categories, surpassing previous best models by 1.8 absolute percentage points despite being entirely out-of-distribution for both math and code reasoning benchmarks. It outperforms models trained with expert-curated human data in coding by 0.3 absolute percentage points while never accessing such data itself. Scaling analysis reveals that AZR delivers greater gains on larger models, with the 7B and 14B models continuing to improve beyond 200 training steps while the 3B model plateaus. Out-of-distribution performance gains increase with model size: +5.7, +10.2, and +13.2 for 3B, 7B, and 14B, respectively.

In conclusion, researchers introduced the Absolute Zero paradigm to address data limitations in existing RLVR frameworks. Under this method, researchers present AZR, which trains models to propose and solve code-related reasoning tasks grounded by a code executor. However, there is a limitation regarding safety management in self-improving systems. The team observed several instances of safety-concerning CoT reasoning from the Llama-3.1-8B model, termed “uh-oh moments.” The findings indicate that while the Absolute Zero paradigm reduces human intervention needs in task curation, ongoing oversight remains necessary to address lingering safety concerns, highlighting a critical direction for future research.

Check out the Paper, Model on Hugging Face and GitHub Page. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

- ML News Community – r/machinelearningnews (92k+ members)

- Newsletter– airesearchinsights.com/(30k+ subscribers)

- miniCON AI Events – minicon.marktechpost.com

- AI Reports & Magazines – magazine.marktechpost.com

- AI Dev & Research News – marktechpost.com (1M+ monthly readers)

- Partner with us

The post AI That Teaches Itself: Tsinghua University’s ‘Absolute Zero’ Trains LLMs With Zero External Data appeared first on MarkTechPost.

Source: Read MoreÂ