Recent advancements in LLMs have significantly improved natural language understanding, reasoning, and generation. These models now excel at diverse tasks like mathematical problem-solving and generating contextually appropriate text. However, a persistent challenge remains: LLMs often generate hallucinations—fluent but factually incorrect responses. These hallucinations undermine the reliability of LLMs, especially in high-stakes domains, prompting an urgent need for effective detection mechanisms. While using LLMs to detect hallucinations seems promising, empirical evidence suggests they fall short compared to human judgment and typically require external, annotated feedback to perform better. This raises a fundamental question: Is the task of automated hallucination detection intrinsically difficult, or could it become more feasible as models improve?

Theoretical and empirical studies have sought to answer this. Building on classic learning theory frameworks like Gold-Angluin and recent adaptations to language generation, researchers have analyzed whether reliable and representative generation is achievable under various constraints. Some studies highlight the intrinsic complexity of hallucination detection, linking it to limitations in model architectures, such as transformers’ struggles with function composition at scale. On the empirical side, methods like SelfCheckGPT assess response consistency, while others leverage internal model states and supervised learning to flag hallucinated content. Although supervised approaches using labeled data significantly improve detection, current LLM-based detectors still struggle without robust external guidance. These findings suggest that while progress is being made, fully automated hallucination detection may face inherent theoretical and practical barriers.

Researchers at Yale University present a theoretical framework to assess whether hallucinations in LLM outputs can be detected automatically. Drawing from the Gold-Angluin model for language identification, they show that hallucination detection is equivalent to identifying whether an LLM’s outputs belong to a correct language K. Their key finding is that detection is fundamentally impossible when training uses only correct (positive) examples. However, when negative examples—explicitly labeled hallucinations—are included, detection becomes feasible. This underscores the necessity of expert-labeled feedback and supports methods like reinforcement learning with human feedback for improving LLM reliability.

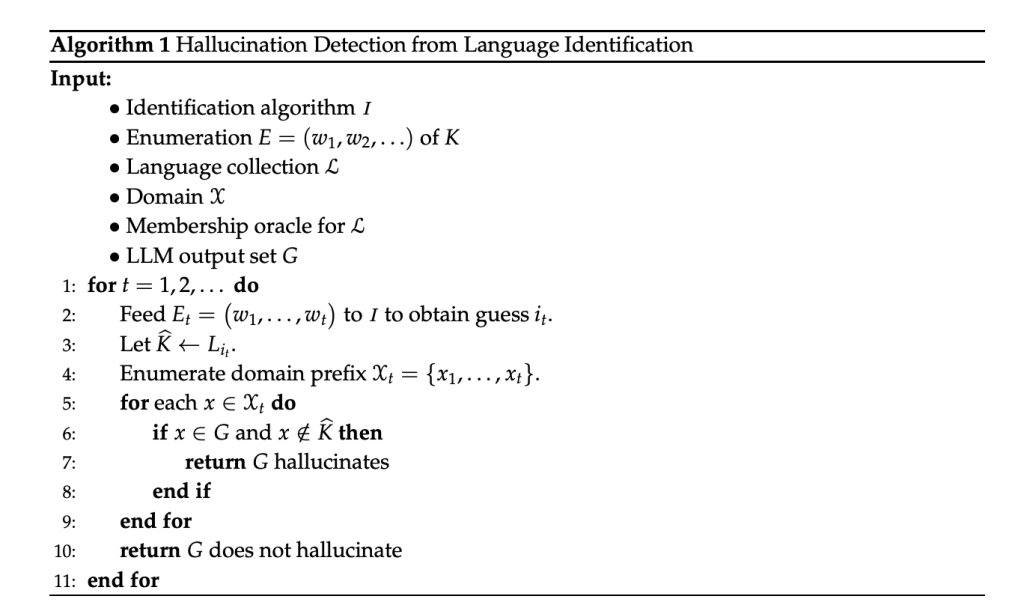

The approach begins by showing that any algorithm capable of identifying a language in the limit can be transformed into one that detects hallucinations in the limit. This involves using a language identification algorithm to compare the LLM’s outputs against a known language over time. If discrepancies arise, hallucinations are detected. Conversely, the second part proves that language identification is no harder than hallucination detection. Combining a consistency-checking method with a hallucination detector, the algorithm identifies the correct language by ruling out inconsistent or hallucinating candidates, ultimately selecting the smallest consistent and non-hallucinating language.

The study defines a formal model where a learner interacts with an adversary to detect hallucinations—statements outside a target language—based on sequential examples. Each target language is a subset of a countable domain, and the learner observes elements over time while querying a candidate set for membership. The main result shows that detecting hallucinations within the limit is as hard as identifying the correct language, which aligns with Angluin’s characterization. However, if the learner also receives labeled examples indicating whether items belong to the language, hallucination detection becomes universally achievable for any countable collection of languages.

In conclusion, the study presents a theoretical framework to analyze the feasibility of automated hallucination detection in LLMs. The researchers prove that detecting hallucinations is equivalent to the classic language identification problem, which is typically infeasible when using only correct examples. However, they show that incorporating labeled incorrect (negative) examples makes hallucination detection possible across all countable languages. This highlights the importance of expert feedback, such as RLHF, in improving LLM reliability. Future directions include quantifying the amount of negative data required, handling noisy labels, and exploring relaxed detection goals based on hallucination density thresholds.

Check out the Paper. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

ML News Community – r/machinelearningnews (92k+ members)

Newsletter– airesearchinsights.com/(30k+ subscribers)

miniCON AI Events – minicon.marktechpost.com

AI Reports & Magazines – magazine.marktechpost.com

AI Dev & Research News – marktechpost.com (1M+ monthly readers)

The post Is Automated Hallucination Detection in LLMs Feasible? A Theoretical and Empirical Investigation appeared first on MarkTechPost.

Source: Read MoreÂ