With Amazon Bedrock Evaluations, you can evaluate foundation models (FMs) and Retrieval Augmented Generation (RAG) systems, whether hosted on Amazon Bedrock or another model or RAG system hosted elsewhere, including Amazon Bedrock Knowledge Bases or multi-cloud and on-premises deployments. We recently announced the general availability of the large language model (LLM)-as-a-judge technique in model evaluation and the new RAG evaluation tool, also powered by an LLM-as-a-judge behind the scenes. These tools are already empowering organizations to systematically evaluate FMs and RAG systems with enterprise-grade tools. We also mentioned that these evaluation tools don’t have to be limited to models or RAG systems hosted on Amazon Bedrock; with the bring your own inference (BYOI) responses feature, you can evaluate models or applications if you use the input formatting requirements for either offering.

The LLM-as-a-judge technique powering these evaluations enables automated, human-like evaluation quality at scale, using FMs to assess quality and responsible AI dimensions without manual intervention. With built-in metrics like correctness (factual accuracy), completeness (response thoroughness), faithfulness (hallucination detection), and responsible AI metrics such as harmfulness and answer refusal, you and your team can evaluate models hosted on Amazon Bedrock and knowledge bases natively, or using BYOI responses from your custom-built systems.

Amazon Bedrock Evaluations offers an extensive list of built-in metrics for both evaluation tools, but there are times when you might want to define these evaluation metrics in a different way, or make completely new metrics that are relevant to your use case. For example, you might want to define a metric that evaluates an application response’s adherence to your specific brand voice, or want to classify responses according to a custom categorical rubric. You might want to use numerical scoring or categorical scoring for various purposes. For these reasons, you need a way to use custom metrics in your evaluations.

Now with Amazon Bedrock, you can develop custom evaluation metrics for both model and RAG evaluations. This capability extends the LLM-as-a-judge framework that drives Amazon Bedrock Evaluations.

In this post, we demonstrate how to use custom metrics in Amazon Bedrock Evaluations to measure and improve the performance of your generative AI applications according to your specific business requirements and evaluation criteria.

Overview

Custom metrics in Amazon Bedrock Evaluations offer the following features:

- Simplified getting started experience – Pre-built starter templates are available on the AWS Management Console based on our industry-tested built-in metrics, with options to create from scratch for specific evaluation criteria.

- Flexible scoring systems – Support is available for both quantitative (numerical) and qualitative (categorical) scoring to create ordinal metrics, nominal metrics, or even use evaluation tools for classification tasks.

- Streamlined workflow management – You can save custom metrics for reuse across multiple evaluation jobs or import previously defined metrics from JSON files.

- Dynamic content integration – With built-in template variables (for example,

{{prompt}},{{prediction}}, and{{context}}), you can seamlessly inject dataset content and model outputs into evaluation prompts. - Customizable output control – You can use our recommended output schema for consistent results, with advanced options to define custom output formats for specialized use cases.

Custom metrics give you unprecedented control over how you measure AI system performance, so you can align evaluations with your specific business requirements and use cases. Whether assessing factuality, coherence, helpfulness, or domain-specific criteria, custom metrics in Amazon Bedrock enable more meaningful and actionable evaluation insights.

In the following sections, we walk through the steps to create a job with model evaluation and custom metrics using both the Amazon Bedrock console and the Python SDK and APIs.

Supported data formats

In this section, we review some important data formats.

Judge prompt uploading

To upload your previously saved custom metrics into an evaluation job, follow the JSON format in the following examples.

The following code illustrates a definition with numerical scale:

The following code illustrates a definition with string scale:

The following code illustrates a definition with no scale:

For more information on defining a judge prompt with no scale, see the best practices section later in this post.

Model evaluation dataset format

When using LLM-as-a-judge, only one model can be evaluated per evaluation job. Consequently, you must provide a single entry in the modelResponses list for each evaluation, though you can run multiple evaluation jobs to compare different models. The modelResponses field is required for BYOI jobs, but not needed for non-BYOI jobs. The following is the input JSONL format for LLM-as-a-judge in model evaluation. Fields marked with ? are optional.

RAG evaluation dataset format

We updated the evaluation job input dataset format to be even more flexible for RAG evaluation. Now, you can bring referenceContexts, which are expected retrieved passages, so you can compare your actual retrieved contexts to your expected retrieved contexts. You can find the new referenceContexts field in the updated JSONL schema for RAG evaluation:

Variables for data injection into judge prompts

To make sure that your data is injected into the judge prompts in the right place, use the variables from the following table. We have also included a guide to show you where the evaluation tool will pull data from your input file, if applicable. There are cases where if you bring your own inference responses to the evaluation job, we will use that data from your input file; if you don’t use bring your own inference responses, then we will call the Amazon Bedrock model or knowledge base and prepare the responses for you.

The following table summarizes the variables for model evaluation.

| Plain Name | Variable | Input Dataset JSONL Key | Mandatory or Optional |

| Prompt | {{prompt}} |

prompt | Optional |

| Response | {{prediction}} |

For a BYOI job:

If you don’t bring your own inference responses, the evaluation job will call the model and prepare this data for you. |

Mandatory |

| Ground truth response | {{ground_truth}} |

referenceResponse |

Optional |

The following table summarizes the variables for RAG evaluation (retrieve only).

| Plain Name | Variable | Input Dataset JSONL Key | Mandatory or Optional |

| Prompt | {{prompt}} |

prompt |

Optional |

| Ground truth response | {{ground_truth}} |

For a BYOI job:

If you don’t bring your own inference responses, the evaluation job will call the Amazon Bedrock knowledge base and prepare this data for you. |

Optional |

| Retrieved passage | {{context}} |

For a BYOI job:

If you don’t bring your own inference responses, the evaluation job will call the Amazon Bedrock knowledge base and prepare this data for you. |

Mandatory |

| Ground truth retrieved passage | {{reference_contexts}} |

referenceContexts |

Optional |

The following table summarizes the variables for RAG evaluation (retrieve and generate).

| Plain Name | Variable | Input dataset JSONL key | Mandatory or optional |

| Prompt | {{prompt}} |

prompt |

Optional |

| Response | {{prediction}} |

For a BYOI job:

If you don’t bring your own inference responses, the evaluation job will call the Amazon Bedrock knowledge base and prepare this data for you. |

Mandatory |

| Ground truth response | {{ground_truth}} |

referenceResponses |

Optional |

| Retrieved passage | {{context}} |

For a BYOI job:

If you don’t bring your own inference responses, the evaluation job will call the Amazon Bedrock knowledge base and prepare this data for you. |

Optional |

| Ground truth retrieved passage | {{reference_contexts}} |

referenceContexts |

Optional |

Prerequisites

To use the LLM-as-a-judge model evaluation and RAG evaluation features with BYOI, you must have the following prerequisites:

- AWS account and model access:

- An active AWS account

- Selected evaluator and generator models enabled in Amazon Bedrock (verify on the Model access page of the Amazon Bedrock console)

- Confirmed AWS Regions where the models are available and their quotas

- AWS Identity and Access Management (IAM) and Amazon Simple Storage Service (Amazon S3) configuration:

- Completed IAM setup and permissions for both model and RAG evaluation

- Configured S3 bucket with appropriate permissions for accessing and writing output data

- Enabled CORS on your S3 bucket

Create a model evaluation job with custom metrics using Amazon Bedrock Evaluations

Complete the following steps to create a job with model evaluation and custom metrics using Amazon Bedrock Evaluations:

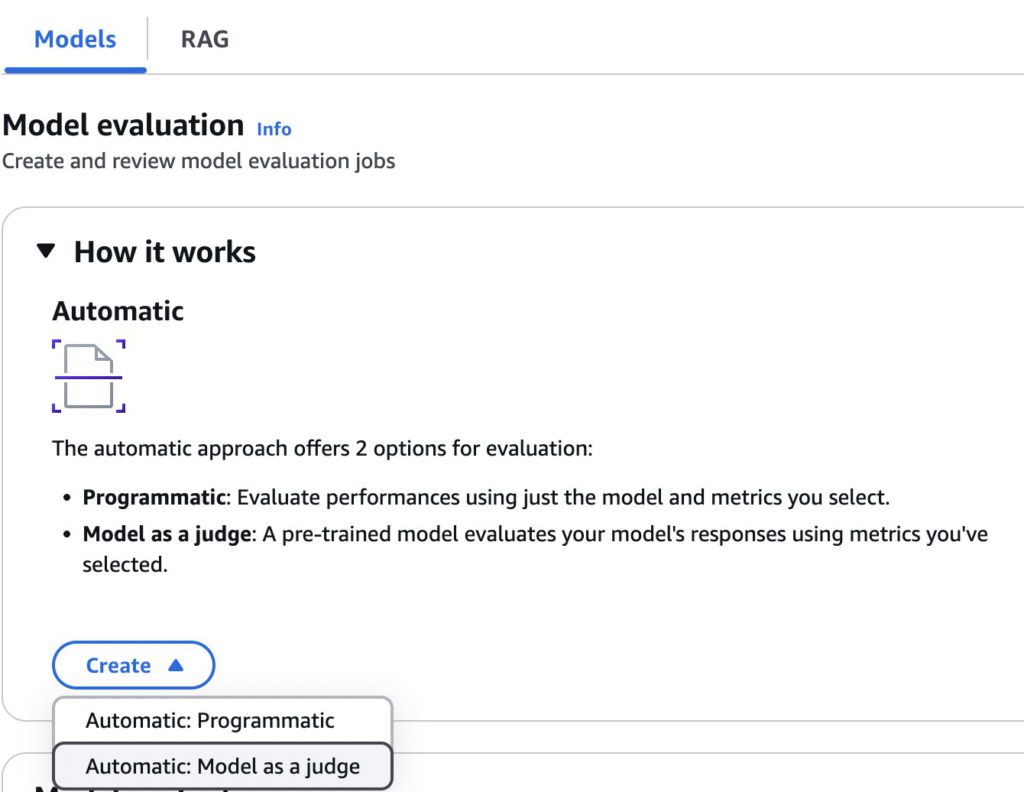

- On the Amazon Bedrock console, choose Evaluations in the navigation pane and choose the Models

- In the Model evaluation section, on the Create dropdown menu, choose Automatic: model as a judge.

- For the Model evaluation details, enter an evaluation name and optional description.

- For Evaluator model, choose the model you want to use for automatic evaluation.

- For Inference source, select the source and choose the model you want to evaluate.

For this example, we chose Claude 3.5 Sonnet as the evaluator model, Bedrock models as our inference source, and Claude 3.5 Haiku as our model to evaluate.

- The console will display the default metrics for the evaluator model you chose. You can select other metrics as needed.

- In the Custom Metrics section, we create a new metric called “Comprehensiveness.” Use the template provided and modify based on your metrics. You can use the following variables to define the metric, where only

{{prediction}}is mandatory:promptpredictionground_truth

The following is the metric we defined in full:

- Create the output schema and additional metrics. Here, we define a scale that provides maximum points (10) if the response is very comprehensive, and 1 if the response is not comprehensive at all.

- For Datasets, enter your input and output locations in Amazon S3.

- For Amazon Bedrock IAM role – Permissions, select Use an existing service role and choose a role.

- Choose Create and wait for the job to complete.

Considerations and best practices

When using the output schema of the custom metrics, note the following:

- If you use the built-in output schema (recommended), do not add your grading scale into the main judge prompt. The evaluation service will automatically concatenate your judge prompt instructions with your defined output schema rating scale and some structured output instructions (unique to each judge model) behind the scenes. This is so the evaluation service can parse the judge model’s results and display them on the console in graphs and calculate average values of numerical scores.

- The fully concatenated judge prompts are visible in the Preview window if you are using the Amazon Bedrock console to construct your custom metrics. Because judge LLMs are inherently stochastic, there might be some responses we can’t parse and display on the console and use in your average score calculations. However, the raw judge responses are always loaded into your S3 output file, even if the evaluation service cannot parse the response score from the judge model.

- If you don’t use the built-in output schema feature (we recommend you use it instead of ignoring it), then you are responsible for providing your rating scale in the judge prompt instructions body. However, the evaluation service will not add structured output instructions and will not parse the results to show graphs; you will see the full judge output plaintext results on the console without graphs and the raw data will still be in your S3 bucket.

Create a model evaluation job with custom metrics using the Python SDK and APIs

To use the Python SDK to create a model evaluation job with custom metrics, follow these steps (or refer to our example notebook):

- Set up the required configurations, which should include your model identifier for the default metrics and custom metrics evaluator, IAM role with appropriate permissions, Amazon S3 paths for input data containing your inference responses, and output location for results:

- To define a custom metric for model evaluation, create a JSON structure with a

customMetricDefinitionInclude your metric’s name, write detailed evaluation instructions incorporating template variables (such as{{prompt}}and{{prediction}}), and define yourratingScalearray with assessment values using either numerical scores (floatValue) or categorical labels (stringValue). This properly formatted JSON schema enables Amazon Bedrock to evaluate model outputs consistently according to your specific criteria. - To create a model evaluation job with custom metrics, use the

create_evaluation_jobAPI and include your custom metric in thecustomMetricConfigsection, specifying both built-in metrics (such asBuiltin.Correctness) and your custom metric in themetricNamesarray. Configure the job with your generator model, evaluator model, and proper Amazon S3 paths for input dataset and output results. - After submitting the evaluation job, monitor its status with

get_evaluation_joband access results at your specified Amazon S3 location when complete, including the standard and custom metric performance data.

Create a RAG system evaluation with custom metrics using Amazon Bedrock Evaluations

In this example, we walk through a RAG system evaluation with a combination of built-in metrics and custom evaluation metrics on the Amazon Bedrock console. Complete the following steps:

- On the Amazon Bedrock console, choose Evaluations in the navigation pane.

- On the RAG tab, choose Create.

- For the RAG evaluation details, enter an evaluation name and optional description.

- For Evaluator model, choose the model you want to use for automatic evaluation. The evaluator model selected here will be used to calculate default metrics if selected. For this example, we chose Claude 3.5 Sonnet as the evaluator model.

- Include any optional tags.

- For Inference source, select the source. Here, you have the option to select between Bedrock Knowledge Bases and Bring your own inference responses. If you’re using Amazon Bedrock Knowledge Bases, you will need to choose a previously created knowledge base or create a new one. For BYOI responses, you can bring the prompt dataset, context, and output from a RAG system. For this example, we chose Bedrock Knowledge Base as our inference source.

- Specify the evaluation type, response generator model, and built-in metrics. You can choose between a combined retrieval and response evaluation or a retrieval only evaluation, with options to use default metrics, custom metrics, or both for your RAG evaluation. The response generator model is only required when using an Amazon Bedrock knowledge base as the inference source. For the BYOI configuration, you can proceed without a response generator. For this example, we selected Retrieval and response generation as our evaluation type and chose Nova Lite 1.0 as our response generator model.

- In the Custom Metrics section, choose your evaluator model. We selected Claude 3.5 Sonnet v1 as our evaluator model for custom metrics.

- Choose Add custom metrics.

- Create your new metric. For this example, we create a new custom metric for our RAG evaluation called

information_comprehensiveness. This metric evaluates how thoroughly and completely the response addresses the query by using the retrieved information. It measures the extent to which the response extracts and incorporates relevant information from the retrieved passages to provide a comprehensive answer. - You can choose between importing a JSON file, using a preconfigured template, or creating a custom metric with full configuration control. For example, you can select the preconfigured templates for the default metrics and change the scoring system or rubric. For our

information_comprehensivenessmetric, we select the custom option, which allows us to input our evaluator prompt directly.

- For Instructions, enter your prompt. For example:

- Enter your output schema to define how the custom metric results will be structured, visualized, normalized (if applicable), and explained by the model.

If you use the built-in output schema (recommended), do not add your rating scale into the main judge prompt. The evaluation service will automatically concatenate your judge prompt instructions with your defined output schema rating scale and some structured output instructions (unique to each judge model) behind the scenes so that your judge model results can be parsed. The fully concatenated judge prompts are visible in the Preview window if you are using the Amazon Bedrock console to construct your custom metrics.

- For Dataset and evaluation results S3 location, enter your input and output locations in Amazon S3.

- For Amazon Bedrock IAM role – Permissions, select Use an existing service role and choose your role.

- Choose Create and wait for the job to complete.

Start a RAG evaluation job with custom metrics using the Python SDK and APIs

To use the Python SDK for creating an RAG evaluation job with custom metrics, follow these steps (or refer to our example notebook):

- Set up the required configurations, which should include your model identifier for the default metrics and custom metrics evaluator, IAM role with appropriate permissions, knowledge base ID, Amazon S3 paths for input data containing your inference responses, and output location for results:

- To define a custom metric for RAG evaluation, create a JSON structure with a

customMetricDefinitionInclude your metric’s name, write detailed evaluation instructions incorporating template variables (such as{{prompt}},{{context}}, and{{prediction}}), and define yourratingScalearray with assessment values using either numerical scores (floatValue) or categorical labels (stringValue). This properly formatted JSON schema enables Amazon Bedrock to evaluate responses consistently according to your specific criteria. - To create a RAG evaluation job with custom metrics, use the

create_evaluation_jobAPI and include your custom metric in thecustomMetricConfigsection, specifying both built-in metrics (Builtin.Correctness) and your custom metric in themetricNamesarray. Configure the job with your knowledge base ID, generator model, evaluator model, and proper Amazon S3 paths for input dataset and output results. - After submitting the evaluation job, you can check its status using the

get_evaluation_jobmethod and retrieve the results when the job is complete. The output will be stored at the Amazon S3 location specified in theoutput_pathparameter, containing detailed metrics on how your RAG system performed across the evaluation dimensions including custom metrics.

Custom metrics are only available for LLM-as-a-judge. At the time of writing, we don’t accept custom AWS Lambda functions or endpoints for code-based custom metric evaluators. Human-based model evaluation has supported custom metric definition since its launch in November 2023.

Clean up

To avoid incurring future charges, delete the S3 bucket, notebook instances, and other resources that were deployed as part of the post.

Conclusion

The addition of custom metrics to Amazon Bedrock Evaluations empowers organizations to define their own evaluation criteria for generative AI systems. By extending the LLM-as-a-judge framework with custom metrics, businesses can now measure what matters for their specific use cases alongside built-in metrics. With support for both numerical and categorical scoring systems, these custom metrics enable consistent assessment aligned with organizational standards and goals.

As generative AI becomes increasingly integrated into business processes, the ability to evaluate outputs against custom-defined criteria is essential for maintaining quality and driving continuous improvement. We encourage you to explore these new capabilities through the Amazon Bedrock console and API examples provided, and discover how personalized evaluation frameworks can enhance your AI systems’ performance and business impact.

About the Authors

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Jesse Manders is a Senior Product Manager on Amazon Bedrock, the AWS Generative AI developer service. He works at the intersection of AI and human interaction with the goal of creating and improving generative AI products and services to meet our needs. Previously, Jesse held engineering team leadership roles at Apple and Lumileds, and was a senior scientist in a Silicon Valley startup. He has an M.S. and Ph.D. from the University of Florida, and an MBA from the University of California, Berkeley, Haas School of Business.

Jesse Manders is a Senior Product Manager on Amazon Bedrock, the AWS Generative AI developer service. He works at the intersection of AI and human interaction with the goal of creating and improving generative AI products and services to meet our needs. Previously, Jesse held engineering team leadership roles at Apple and Lumileds, and was a senior scientist in a Silicon Valley startup. He has an M.S. and Ph.D. from the University of Florida, and an MBA from the University of California, Berkeley, Haas School of Business.

Ishan Singh is a Sr. Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Ishan Singh is a Sr. Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Source: Read MoreÂ