As large language model (LLM) agents gain traction across enterprise and research ecosystems, a foundational gap has emerged: communication. While agents today can autonomously reason, plan, and act, their ability to coordinate with other agents or interface with external tools remains constrained by the absence of standardized protocols. This communication bottleneck not only fragments the agent landscape but also limits scalability, interoperability, and the emergence of collaborative AI systems.

A recent survey by researchers at Shanghai Jiao Tong University and ANP Community offers the first comprehensive taxonomy and evaluation of protocols for AI agents. The work introduces a principled classification scheme, explores existing protocol frameworks, and outlines future directions for scalable, secure, and intelligent agent ecosystems.

The Communication Problem in Modern AI Agents

The deployment of LLM agents has outpaced the development of mechanisms that enable them to interact with each other or with external resources. In practice, most agent interactions rely on ad hoc APIs or brittle function-calling paradigms—approaches that lack generalizability, security guarantees, and cross-vendor compatibility.

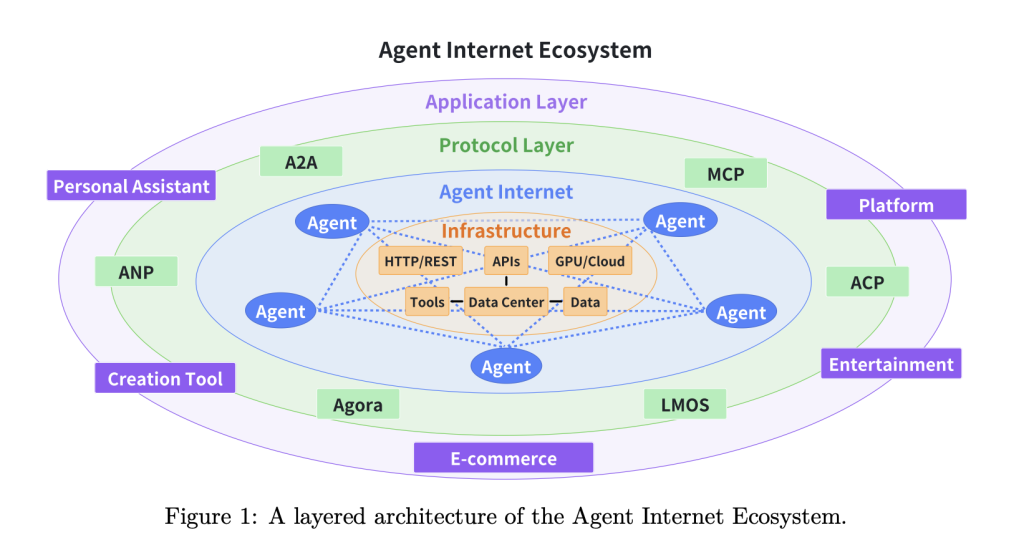

The issue is analogous to the early days of the Internet, where the absence of common transport and application-layer protocols prevented seamless information exchange. Just as TCP/IP and HTTP catalyzed global connectivity, standard protocols for AI agents are poised to serve as the backbone of a future “Internet of Agents.”

A Framework for Agent Protocols: Context vs. Collaboration

The authors propose a two-dimensional classification system that delineates agent protocols along two axes:

- Context-Oriented vs. Inter-Agent Protocols

- Context-Oriented Protocols govern how agents interact with external data, tools, or APIs.

- Inter-Agent Protocols enable peer-to-peer communication, task delegation, and coordination across multiple agents.

- General-Purpose vs. Domain-Specific Protocols

- General-purpose protocols are designed to operate across diverse environments and agent types.

- Domain-specific protocols are optimized for particular applications such as human-agent dialogue, robotics, or IoT systems.

This classification helps clarify the design trade-offs across flexibility, performance, and specialization.

Key Protocols and Their Design Principles

1. Model Context Protocol (MCP) – Anthropic

MCP is a general-purpose context-oriented protocol that facilitates structured interaction between LLM agents and external resources. Its architecture decouples reasoning (host agents) from execution (clients and servers), enhancing security and scalability. Notably, MCP mitigates privacy risks by ensuring that sensitive user data is processed locally, rather than embedded directly into LLM-generated function calls.

2. Agent-to-Agent Protocol (A2A) – Google

Designed for secure and asynchronous collaboration, A2A enables agents to exchange tasks and artifacts in enterprise settings. It emphasizes modularity, multimodal support (e.g., files, streams), and opaque execution, preserving IP while enabling interoperability. The protocol defines standardized entities such as Agent Cards, Tasks, and Artifacts for robust workflow orchestration.

3. Agent Network Protocol (ANP) – Open-Source

ANP envisions a decentralized, web-scale agent network. Built atop decentralized identity (DID) and semantic meta-protocol layers, ANP facilitates trustless, encrypted communication between agents across heterogeneous domains. It introduces layered abstractions for discovery, negotiation, and task execution—positioning itself as a foundation for an open “Internet of Agents.”

Performance Metrics: A Holistic Evaluation Framework

To assess protocol robustness, the survey introduces a comprehensive framework based on seven evaluation criteria:

- Efficiency – Throughput, latency, and resource utilization (e.g., token cost in LLMs)

- Scalability – Support for increasing agents, dense communication, and dynamic task allocation

- Security – Fine-grained authentication, access control, and context desensitization

- Reliability – Robust message delivery, flow control, and connection persistence

- Extensibility – Ability to evolve without breaking compatibility

- Operability – Ease of deployment, observability, and platform-agnostic implementation

- Interoperability – Cross-system compatibility across languages, platforms, and vendors

This framework reflects both classical network protocol principles and agent-specific challenges such as semantic coordination and multi-turn workflows.

Toward Emergent Collective Intelligence

One of the most compelling arguments for protocol standardization lies in the potential for collective intelligence. By aligning communication strategies and capabilities, agents can form dynamic coalitions to solve complex tasks—akin to swarm robotics or modular cognitive systems. Protocols such as Agora take this further by enabling agents to negotiate and adapt new protocols in real time, using LLM-generated routines and structured documents.

Similarly, protocols like LOKA embed ethical reasoning and identity management into the communication layer, ensuring that agent ecosystems can evolve responsibly, transparently, and securely.

The Road Ahead: From Static Interfaces to Adaptive Protocols

Looking forward, the authors outline three stages in protocol evolution:

- Short-Term: Transition from rigid function calls to dynamic, evolvable protocols.

- Mid-Term: Shift from rule-based APIs to agent ecosystems capable of self-organization and negotiation.

- Long-Term: Emergence of layered infrastructures that support privacy-preserving, collaborative, and intelligent agent networks.

These trends signal a departure from traditional software design toward a more flexible, agent-native computing paradigm.

Conclusion

The future of AI will not be shaped solely by model architecture or training data—it will be shaped by how agents communicate, coordinate, and learn from one another. Protocols are not merely technical specifications; they are the connective tissue of intelligent systems. By formalizing these communication layers, we unlock the possibility of a decentralized, secure, and interoperable network of agents—an architecture capable of scaling far beyond the capabilities of any single model or framework.

Check out the model on Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Building the Internet of Agents: A Technical Dive into AI Agent Protocols and Their Role in Scalable Intelligence Systems appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop