Recent progress in large reasoning language models (LRLMs), such as DeepSeek-R1 and GPT-O1, has greatly improved complex problem-solving abilities by extending the length of CoT generation during inference. These models benefit from test-time scaling laws, allowing richer and more diverse reasoning paths. However, generating overly long CoT sequences leads to computational inefficiency and increased latency, making the deployment of real-world systems challenging. Moreover, excessive reasoning often introduces redundant or irrelevant steps, which can cause models to deviate from correct answers, ultimately reducing accuracy. This overthinking problem stems from traditional supervised fine-tuning and reinforcement learning approaches that do not prioritize dynamic control over reasoning length. Research has shown that in many cases, reasoning could be halted earlier, at what the authors call “pearl reasoning” points, without sacrificing correctness. Identifying and stopping at these critical points could significantly improve efficiency while maintaining model performance.

Existing approaches to improve inference efficiency generally fall into three categories: post-training, prompt-based, and output-based methods. Post-training techniques involve retraining models with variable-length CoT examples or length rewards, but they are often computationally intensive and risk overfitting. Prompt-based methods adjust CoT length by modifying the input prompts based on task difficulty, achieving more concise reasoning without sacrificing much accuracy. Output-based methods typically focus on sampling techniques, such as early stopping when multiple outputs converge on the same answer. However, with newer models like R1, reliance on best-of-N sampling has decreased. Recent works have explored early exiting strategies, but they often require separate verification models or are only effective in limited settings. In contrast, the discussed approach aims to empower models to recognize optimal stopping points during their reasoning process, providing a more seamless and generalizable solution.

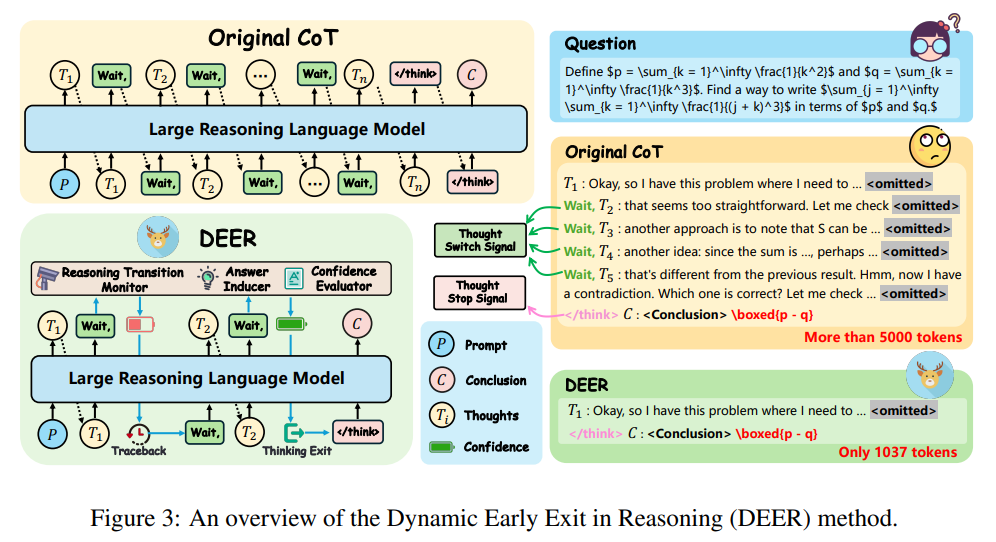

Researchers from the Institute of Information Engineering, the University of Chinese Academy of Sciences, and Huawei Technologies have proposed DEER, a simple, training-free method to enable LRLMs to dynamically exit early during reasoning. DEER monitors key transition points, such as the generation of “Wait” tokens, and prompts the model to produce trial answers at these moments. If the model shows high confidence, reasoning is halted; otherwise, it continues. This approach integrates seamlessly with existing models, such as DeepSeek, and reduces CoT length by 31–43%, while improving accuracy by 1.7–5.7% across benchmarks including MATH-500, AIME 2024, and GPQA Diamond.

The DEER (Dynamic Early Exit in Reasoning) method enables large reasoning language models to exit reasoning early by evaluating their confidence in trial answers at key transition points. It uses three modules: a reasoning transition monitor to detect “thought switch” signals, an answer inducer to prompt a trial conclusion, and a confidence evaluator to assess if the reasoning is sufficient. If confidence exceeds a threshold, reasoning stops; otherwise, it continues. To reduce latency from trial answer generation, DEER also employs branch-parallel decoding with dynamic cache management, thereby improving efficiency without sacrificing accuracy, particularly for tasks such as code generation.

The experiments evaluated models on four major reasoning benchmarks: MATH-500, AMC 2023, AIME 2024, and GPQA Diamond, as well as programming benchmarks HumanEval and BigCodeBench. Tests were conducted using DeepSeek-R1-Distill-Qwen models of varying sizes (1.5B to 32B parameters) under a Zero-shot Chain-of-Thought setup. DEER significantly improved performance by reducing reasoning length by 31–43% while increasing accuracy by 1.7–5.7% compared to standard CoT. A detailed analysis revealed that DEER corrected more responses through early exits, particularly for smaller models and simpler tasks. On programming benchmarks, DEER also reduced reasoning length by over 60% with minimal or no loss in accuracy, demonstrating its robustness across various tasks.

In conclusion, the study validates the idea of using early exits during CoT generation through pilot studies. Based on these findings, it introduces a training-free dynamic early exit method that enables models to stop reasoning once enough information is gathered. Tested across various model sizes and six major reasoning benchmarks, the method achieves better accuracy with fewer tokens, effectively balancing efficiency and performance. Unlike traditional approaches that rely on long CoT for complex tasks, this method dynamically monitors model confidence to determine when to stop reasoning, thereby avoiding unnecessary steps. Experiments show significant reductions in reasoning length while boosting overall accuracy.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post This AI Paper from China Proposes a Novel Training-Free Approach DEER that Allows Large Reasoning Language Models to Achieve Dynamic Early Exit in Reasoning appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop