Designing and evaluating web interfaces is one of the most critical tasks in today’s digital-first world. Every change in layout, element positioning, or navigation logic can influence how users interact with websites. This becomes even more crucial for platforms that rely on extensive user engagement, such as e-commerce or content streaming services. One of the most trusted methods for assessing the impact of design changes is A/B testing. In A/B testing, two or more versions of a webpage are shown to different user groups to measure their behavior and determine which variant performs better. It’s not just about aesthetics but also functional usability. This method enables product teams to gather user-centered evidence before fully rolling out a feature, allowing businesses to optimize user interfaces systematically based on observed interactions.

Despite being a widely accepted tool, the traditional A/B testing process brings several inefficiencies that have proven problematic for many teams. The most significant challenge is the volume of real-user traffic needed to yield statistically valid results. In some scenarios, hundreds of thousands of users must interact with webpage variants to identify meaningful patterns. For smaller websites or early-stage features, securing this level of user interaction can be nearly impossible. The feedback cycle is also notably slow. Even after launching an experiment, it might take weeks to months before results can be confidently assessed due to the requirement of long observation periods. Also, these tests are resource-heavy; only a few variants can be evaluated due to the time and manpower required. Consequently, numerous promising ideas go untested because there’s simply no capacity to explore them all.

Several methods have been explored to overcome these limitations; however, each has its shortcomings. For example, offline A/B testing techniques depend on rich historical interaction logs, which are not always available or reliable. Tools that enable prototyping and experimentation, such as Apparition and Fuse, have accelerated early design exploration but are primarily useful for prototyping physical interfaces. Algorithms that reframe A/B testing as a search problem through evolutionary models help automate some aspects but still depend on historical or real-user deployment data. Other strategies, like cognitive modeling with GOMS or ACT-R frameworks, require high levels of manual configuration and do not easily adapt to the complexities of dynamic web behavior. These tools, although innovative, have not provided the scalability and automation necessary to address the deeper structural limitations in A/B testing workflows.

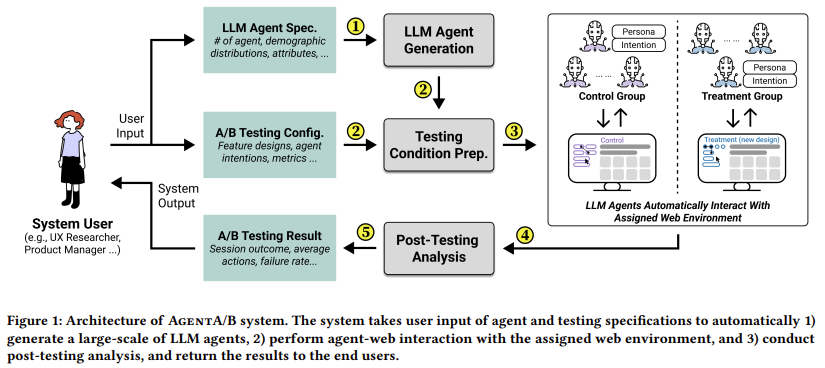

Researchers from Northeastern University, Pennsylvania State University, and Amazon introduced a new automated system named AgentA/B. This system offers an alternative approach to traditional user testing, utilizing Large Language Model (LLM)-based agents. Rather than depending on live user interaction, AgentA/B simulates human behavior using thousands of AI agents. These agents are assigned detailed personas that mimic characteristics such as age, educational background, technical proficiency, and shopping preferences. These personas enable agents to simulate a wide range of user interactions on real websites. The goal is to provide researchers and product managers with an efficient and scalable method for testing multiple design variants without relying on live user feedback or extensive traffic coordination.

The system architecture of AgentA/B is structured into four main components. First, it generates agent personas based on the input demographics and behavioral diversity specified by the user. These personas are fed into the second stage, where testing scenarios are defined—this includes assigning agents to control and treatment groups and specifying which two webpage versions should be tested. The third component executes the interactions: agents are deployed into real browser environments, where they process the content through structured web data (converted into JSON observations) and take action like real users. They can search, filter, click, and even simulate purchases. The fourth and final component involves analyzing the results, where the system provides metrics like the number of clicks, purchases, or interaction durations to assess design effectiveness.

During their testing phase, researchers used Amazon.com to demonstrate the tool’s practical value. A total of 100,000 virtual customer personas were generated, and 1,000 were randomly selected from this pool to act as LLM agents in the simulation. The experiment compared two different webpage layouts: one with all product filter options shown in a left-hand panel and another with only a reduced set of filters. The outcome was compelling. The agents interacting with the reduced-filter version performed more purchases and filter-based actions than those with the full list. Also, these virtual agents were significantly more efficient. Compared with one million real user interactions, LLM agents took fewer actions on average to complete tasks, indicating more goal-oriented behavior. These results mirrored the behavioral direction observed in human A/B tests, strengthening the case for AgentA/B as a valid complement to traditional testing.

This research demonstrates a compelling advancement in interface evaluation. It doesn’t aim to replace live user A/B testing but instead proposes a supplementary method that offers rapid feedback, cost efficiency, and broader experimental coverage. By using AI agents instead of live participants, the system enables product teams to test numerous interface variations that would otherwise be infeasible. This model can significantly compress the design cycle, allowing ideas to be validated or rejected at a much earlier stage. It addresses the practical concerns of long wait times, traffic limitations, and testing resource constraints, making the web design process more data-informed and less prone to bottlenecks.

Some Key Takeaways from the Research on AgentA/B include:

- AgentA/B uses LLM-based agents to simulate realistic user behavior on live webpages.

- The system allows automated A/B testing with no need for live user deployment.

- 100,000 user personas were generated, and 1,000 were selected for live testing simulation.

- The system compared two webpage variants on Amazon.com: full filter panel vs. reduced filters.

- LLM agents in the reduced-filter group made more purchases and performed more filtering actions.

- Compared to 1 million human users, LLM agents showed shorter action sequences and more goal-directed behavior.

- AgentA/B can help evaluate interface changes before real user testing, saving months of development time.

- The system is modular and extensible, allowing it to be adaptable to various web platforms and testing goals.

- It directly addresses three core A/B testing challenges: long cycles, high user traffic needs, and experiment failure rates.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post AgentA/B: A Scalable AI System Using LLM Agents that Simulate Real User Behavior to Transform Traditional A/B Testing on Live Web Platforms appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop