Play against Allie on lichess!

Introduction

In 1948, Alan Turning designed what might be the first chess playing AI, a paper program that Turing himself acted as the computer for. Since then, chess has been a testbed for nearly every generation of AI advancement. After decades of improvement, today’s top chess engines like Stockfish and AlphaZero have far surpassed the capabilities of even the strongest human grandmasters.

However, most chess players are not grandmasters, and these state-of-the-art Chess AIs have been described as playing more like aliens than fellow humans.

The core problem here is that strong AI systems are not human-aligned; they are unable to match the diversity of skill levels of human partners and unable to model human-like behaviors beyond piece movement. Understanding how to make AI systems that can effectively collaborate with and be overseen by humans is a key challenge in AI alignment. Chess provides an ideal testbed for trying out new ideas towards this goal – while modern chess engines far surpass human ability, they are completely incapable of playing in a human-like way or adapting to match their human opponents’ skill levels. In this paper, we introduce Allie, a chess-playing AI designed to bridge the gap between artificial and human intelligence in this classic game.

What is Human-aligned Chess?

When we talk about “human-aligned” chess AI, what exactly do we mean? At its core, we want a system that is both humanlike, defined as making moves that feel natural to human players, as well as skill-calibrated, defined as capable of playing at a similar level against human opponents across the skill spectrum.

Our goal here is quite different from traditional chess engines like Stockfish or AlphaZero, which are optimized solely to play the strongest moves possible. While these engines achieve superhuman performance, their play can feel alien to humans. They may instantly make moves in complex positions where humans would need time to think, or continue playing in completely lost positions where humans would normally resign.

Building Allie

A Transformer model trained on transcripts of real games

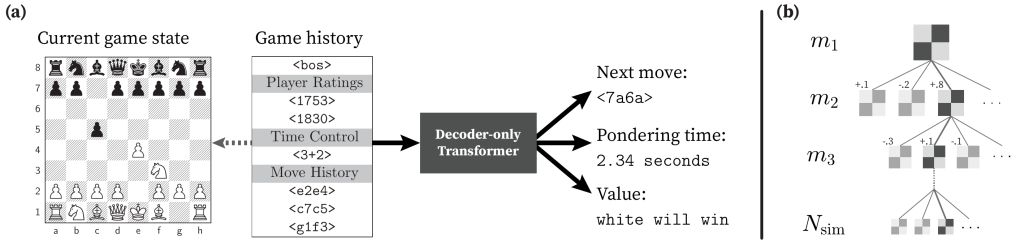

While most prior deep learning approaches build models that input a board state, and output a distribution over possible moves, we instead approach chess like a language modeling task. We use a Transformer architecture that inputs a sequence of moves rather than a single board state. Just as large language models learn to generate human-like text by training on vast text corpora, we hypothesized that a similar architecture could learn human-like chess by training on human game records. We train our chess “language” model on transcripts of over 93M games encompassing a total of 6.6 billion moves, which were played on the chess website Lichess.

Conditioning on Elo score

In chess, Elo scores normally fall in the range of 500 (beginner players) to 3000 (top chess professionals). To calibrate the playing strength of ALLIE to different levels of players, we model gameplay under a conditional generation framework, where encodings of the Elo ratings of both players are prepended to the game sequence. Specifically, we prefix each game with soft control tokens, which interpolate between a weak token, representing 500 Elo, and a strong token, representing 3000 Elo.

For a player with Elo rating (k), we compute a soft token (e_k) by linearly interpolating between the weak and strong tokens:

$$e_k = gamma e_text{weak} + (1-gamma) e_text{strong}$$

where (gamma = frac{3000-k}{2500}). During training, we prefix each game with two soft tokens corresponding to the two players’ strengths.

Learning objectives

On top of the base Transformer model, Allie has three prediction objectives:

- A policy head (p_theta) that outputs a probability distribution over possible next moves

- A pondering-time head (t_theta) that outputs the number of seconds a human player would take to come up with this move

- A value assessment head (v_theta) that outputs a scalar value representing who expects to win the game

All three heads are individually parametrized as linear layers applied to the final hidden state of the decoder. Given a dataset of chess games, represented as a sequence of moves (mathbf{m}), human ponder time before each move (mathbf{t}), and game output (v) we trained Allie to minimize the log-likelihood of next moves and MSE of time and value predictions:

$$mathcal{L}(theta) = sum_{(mathbf{m}, mathbf{t}, v) in mathcal{D}} left( sum_{1 le i le N} left( -log p_theta(m_i ,|, mathbf{m}_{lt i}) + left(t_theta(mathbf{m}_{lt i}) – t_iright)^2 + left(v_theta(mathbf{m}_{lt i}) – vright)^2 right) right) text{.}$$

Adaptive Monte-Carlo Tree Search

At play-time, traditional chess engines like AlphaZero use search algorithms such as Monte-Carlo Tree Search (MCTS) to anticipate many moves into the future, evaluating different possibilities for how the game might go. The search budget (N_mathrm{sim}) is almost always fixed—they will spend the same amount of compute on search regardless of whether the best next move is extremely obvious or pivotal to the outcome of the game.

This fixed budget doesn’t match human behavior; humans naturally spend more time analyzing critical or complex positions compared to simple ones. In Allie, we introduce a time-adaptive MCTS procedure that varies the amount of search based on Allie’s prediction of how long a human would think in each position. If Allie predicts a human would spend more time on a position, it performs more search iterations to better match human depth of analysis. To keep things simple, we just set

How does Allie Play?

To evaluate whether Allie is human-aligned, we evaluate its performance both on an offline dataset and online against real human players.

In offline games, Allie achieves state-of-the-art in move-matching accuracy (defined as the % of moves made that match real human moves). It also models how humans resign, and ponder very well.

Another main insight of our paper is that adaptive search enables remarkable skill calibration against players across the skill spectrum. Against players from 1100 to 2500 Elo, the adaptive search variant of Allie has an average skill gap of only 49 Elo points. In other words, Allie (with adaptive search) wins about 50% of games against opponents that are both beginner and expert level. Notably, none of the other methods (even the non-adpative MCTS baseline) can match the strength of 2500 Elo players.

Limitations and Future Work

Despite strong offline evaluation metrics and generally positive player feedback, Allie still exhibits occasional behaviors that feel non-humanlike. Players specifically noted Allie’s propensity toward late-game blunders and sometimes spending too much time pondering positions where there’s only one reasonable move. These observations suggest there’s still room to improve our understanding of how humans allocate cognitive resources during chess play.

For future work, we identify several promising directions. First, our approach heavily relies on available human data, which is plentiful for fast time controls but more limited for classical chess with longer thinking time. Extending our approach to model human reasoning in slower games, where players make more accurate moves with deeper calculation, represents a significant challenge. With the recent interest in reasoning models that make use of test-time compute, we hope that our adaptive search technique can be applied to improving the efficiency of allocating a limited compute budget.

If you are interested in learning more about this work, please checkout our ICLR paper, Human-Aligned Chess With a Bit of Search.

Source: Read MoreÂ