Despite rapid advances in vision-language modeling, much of the progress in this field has been shaped by models trained on proprietary datasets, often relying on distillation from closed-source systems. This reliance creates barriers to scientific transparency and reproducibility, particularly for tasks involving fine-grained image and video understanding. Benchmark performance may reflect the training data and black-box model capabilities more than architectural or methodological improvements, making it difficult to assess true research progress.

To address these limitations, Meta AI has introduced the Perception Language Model (PLM), a fully open and reproducible framework for vision-language modeling. PLM is designed to support both image and video inputs and is trained without the use of proprietary model outputs. Instead, it draws from large-scale synthetic data and newly collected human-labeled datasets, enabling a detailed evaluation of model behavior and training dynamics under transparent conditions.

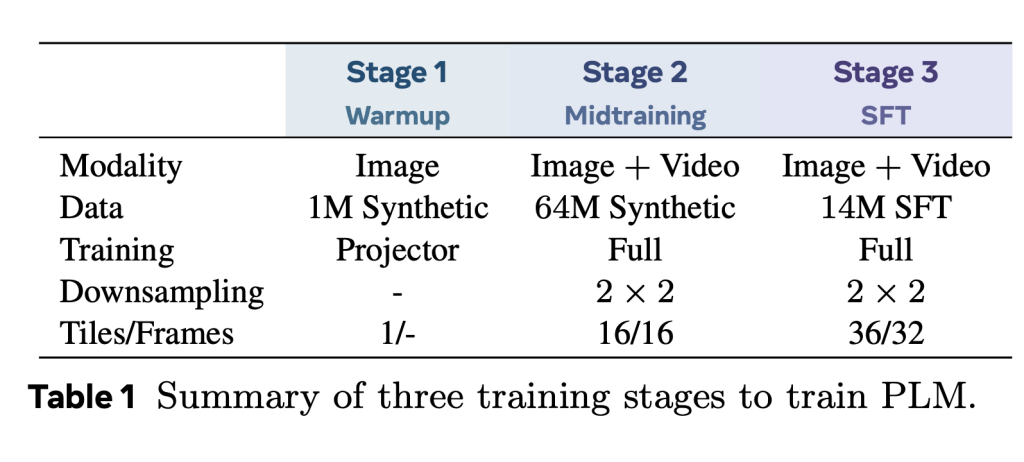

The PLM framework integrates a vision encoder (Perception Encoder) with LLaMA 3 language decoders of varying sizes—1B, 3B, and 8B parameters. It employs a multi-stage training pipeline: initial warm-up with low-resolution synthetic images, large-scale midtraining on diverse synthetic datasets, and supervised fine-tuning using high-resolution data with precise annotations. This pipeline emphasizes training stability and scalability while maintaining control over data provenance and content.

A key contribution of the work is the release of two large-scale, high-quality video datasets addressing existing gaps in temporal and spatial understanding. The PLM–FGQA dataset comprises 2.4 million question-answer pairs capturing fine-grained details of human actions—such as object manipulation, movement direction, and spatial relations—across diverse video domains. Complementing this is PLM–STC, a dataset of 476,000 spatio-temporal captions linked to segmentation masks that track subjects across time, allowing models to reason about “what,” “where,” and “when” in complex video scenes.

Technically, PLM employs a modular architecture that supports high-resolution image tiling (up to 36 tiles) and multi-frame video input (up to 32 frames). A 2-layer MLP projector connects the visual encoder to the LLM, and both synthetic and human-labeled data are structured to support a wide range of tasks including captioning, visual question answering, and dense region-based reasoning. The synthetic data engine, built entirely using open-source models, generates ~64.7 million samples across natural images, charts, documents, and videos—ensuring diversity while avoiding reliance on proprietary sources.

Meta AI also introduces PLM–VideoBench, a new benchmark designed to evaluate aspects of video understanding not captured by existing benchmarks. It includes tasks such as fine-grained activity recognition (FGQA), smart-glasses video QA (SGQA), region-based dense captioning (RDCap), and spatio-temporal localization (RTLoc). These tasks require models to engage in temporally grounded and spatially explicit reasoning.

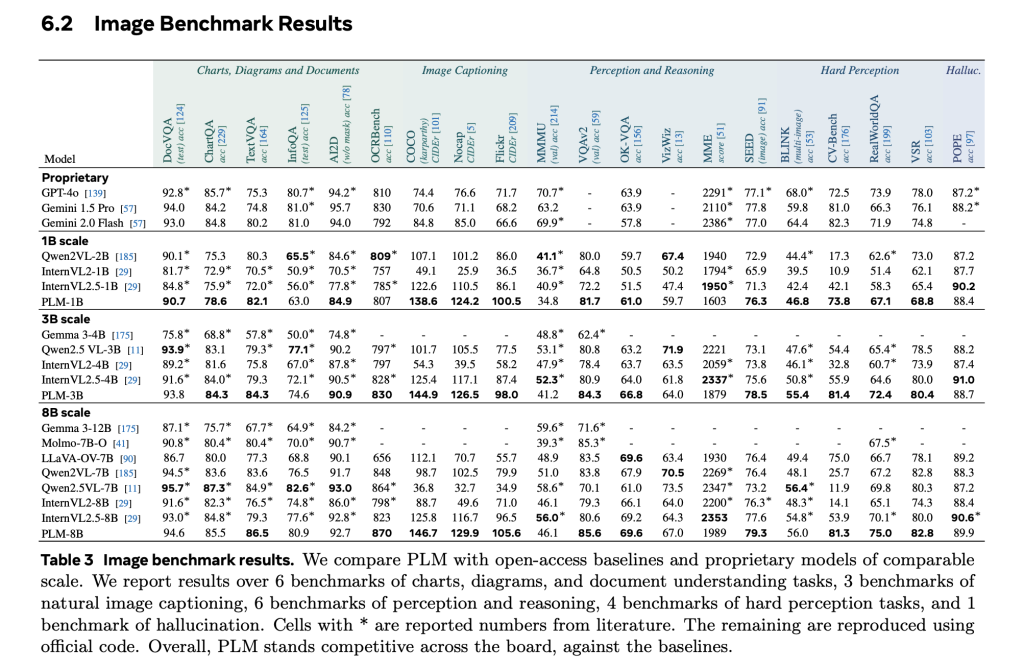

Empirical evaluations show that PLM models, particularly at the 8B parameter scale, perform competitively across 40+ image and video benchmarks. In video captioning, PLM achieves gains of +39.8 CIDEr on average over open baselines. On PLM–VideoBench, the 8B variant closes the gap with human performance in structured tasks such as FGQA and shows improved results in spatio-temporal localization and dense captioning. Notably, all results are obtained without distillation from closed models, underscoring the feasibility of open, transparent VLM development.

In summary, PLM offers a methodologically rigorous and fully open framework for training and evaluating vision-language models. Its release includes not just models and code, but also the largest curated dataset for fine-grained video understanding and a benchmark suite that targets previously underexplored capabilities. PLM is positioned to serve as a foundation for reproducible research in multimodal AI and a resource for future work on detailed visual reasoning in open settings.

Here is the Paper, Model and Code. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Meta AI Released the Perception Language Model (PLM): An Open and Reproducible Vision-Language Model to Tackle Challenging Visual Recognition Tasks appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop