Effective reasoning is crucial for solving complex problems in fields such as mathematics and programming, and LLMs have demonstrated significant improvements through long-chain-of-thought reasoning. However, transformer-based models face limitations due to their quadratic computational complexity and linear memory requirements, making it challenging to process long sequences efficiently. While techniques such as Chain of Thought (CoT) reasoning and adaptive compute allocation have helped boost model performance, these methods also increase computational costs. Additionally, generating multiple outputs and selecting the best one has been explored as a way to enhance reasoning accuracy. However, such methods still depend on transformer-based architectures, which struggle with scalability in large-batch, long-context tasks.

To address these challenges, alternatives to the transformer architecture have been explored, including RNN-based models, state space models (SSMs), and linear attention mechanisms, which offer more efficient memory usage and faster inference. Hybrid models combining self-attention with subquadratic layers have also been developed to improve inference-time scaling. Moreover, knowledge distillation techniques, which transfer capabilities from large models to smaller ones, have shown promise in maintaining reasoning performance while reducing model size. Research into cross-architecture distillation, such as transferring knowledge from transformers to RNNs or SSMs, is ongoing to achieve high reasoning capabilities in smaller, more efficient models.

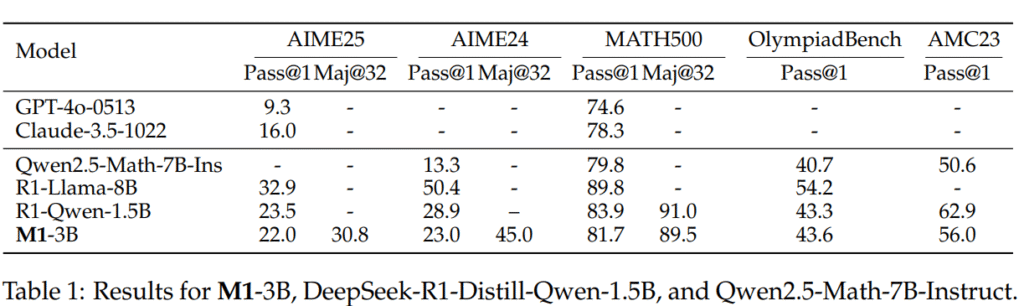

Researchers from TogetherAI, Cornell University, the University of Geneva, and Princeton University present M1, a hybrid linear RNN reasoning model built on the Mamba architecture, which enhances memory-efficient inference. M1 is trained through a combination of distillation, supervised fine-tuning, and reinforcement learning. Experimental results on the AIME and MATH benchmarks show M1 outperforms previous linear RNN models and matches the performance of DeepSeek R1 distilled transformers. Additionally, M1 achieves a 3x speedup in inference compared to transformers of the same size, boosting reasoning accuracy through techniques like self-consistency and verification, making it a powerful model for large-scale inference.

The M1 model is built through a three-stage process: distillation, SFT, and RL. First, a pretrained Transformer model is distilled into the Mamba architecture, with a modified approach to linear projections and additional parameters for better performance. In the SFT stage, the model is fine-tuned on math problem datasets, first with general datasets and then with reasoning-focused datasets from the R1 model series. Finally, RL is applied using GRPO, which enhances the model’s reasoning ability by training with advantage estimates and encouraging diversity in its responses, thereby further boosting its performance.

The experiment uses the Llama3.2-3 B-Instruct models as the target for distillation, with the Mamba layers utilizing a 16-sized SSM state. The evaluation encompasses a range of math benchmarks, including MATH500, AIME25, and Olympiad Bench, assessing model performance based on coverage and accuracy. The pass@k metric is used for coverage, indicating the likelihood of a correct solution among generated samples. The model’s performance is compared with that of various state-of-the-art models, yielding competitive results, particularly in reasoning tasks. The inference speed and test-time scaling are evaluated, demonstrating M1’s efficiency in large-batch generation and longer sequence contexts.

In conclusion, M1 is a hybrid reasoning model based on the Mamba architecture, designed to overcome scalability issues in Transformer models. By employing distillation and fine-tuning techniques, M1 achieves performance comparable to state-of-the-art reasoning models. It offers more than 3x faster inference than similar-sized Transformer models, especially with large batch sizes, making resource-intensive strategies like self-consistency more feasible. M1 outperforms linear RNN models and matches Deepseek R1’s performance on benchmarks such as AIME and MATH. Additionally, it demonstrates superior accuracy under fixed time budgets, making it a strong, efficient alternative to Transformer-based architectures for mathematical reasoning tasks.

Here is the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Do Reasoning Models Really Need Transformers?: Researchers from TogetherAI, Cornell, Geneva, and Princeton Introduce M1—A Hybrid Mamba-Based AI that Matches SOTA Performance at 3x Inference Speed appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop