This post is a joint collaboration between Salesforce and AWS and is being cross-published on both the Salesforce Engineering Blog and the AWS Machine Learning Blog.

The Salesforce AI Model Serving team is working to push the boundaries of natural language processing and AI capabilities for enterprise applications. Their key focus areas include optimizing large language models (LLMs) by integrating cutting-edge solutions, collaborating with leading technology providers, and driving performance enhancements that impact Salesforce’s AI-driven features. The AI Model Serving team supports a wide range of models for both traditional machine learning (ML) and generative AI including LLMs, multi-modal foundation models (FMs), speech recognition, and computer vision-based models. Through innovation and partnerships with leading technology providers, this team enhances performance and capabilities, tackling challenges such as throughput and latency optimization and secure model deployment for real-time AI applications. They accomplish this through evaluation of ML models across multiple environments and extensive performance testing to achieve scalability and reliability for inferencing on AWS.

The team is responsible for the end-to-end process of gathering requirements and performance objectives, hosting, optimizing, and scaling AI models, including LLMs, built by Salesforce’s data science and research teams. This includes optimizing the models to achieve high throughput and low latency and deploying them quickly through automated, self-service processes across multiple AWS Regions.

In this post, we share how the AI Model Service team achieved high-performance model deployment using Amazon SageMaker AI.

Key challenges

The team faces several challenges in deploying models for Salesforce. An example would be balancing latency and throughput while achieving cost-efficiency when scaling these models based on demand. Maintaining performance and scalability while minimizing serving costs is vital across the entire inference lifecycle. Inference optimization is a crucial aspect of this process, because the model and their hosting environment must be fine-tuned to meet price-performance requirements in real-time AI applications. Salesforce’s fast-paced AI innovation requires the team to constantly evaluate new models (proprietary, open source, or third-party) across diverse use cases. They then have to quickly deploy these models to stay in cadence with their product teams’ go-to-market motions. Finally, the models must be hosted securely, and customer data must be protected to abide by Salesforce’s commitment to providing a trusted and secure platform.

Solution overview

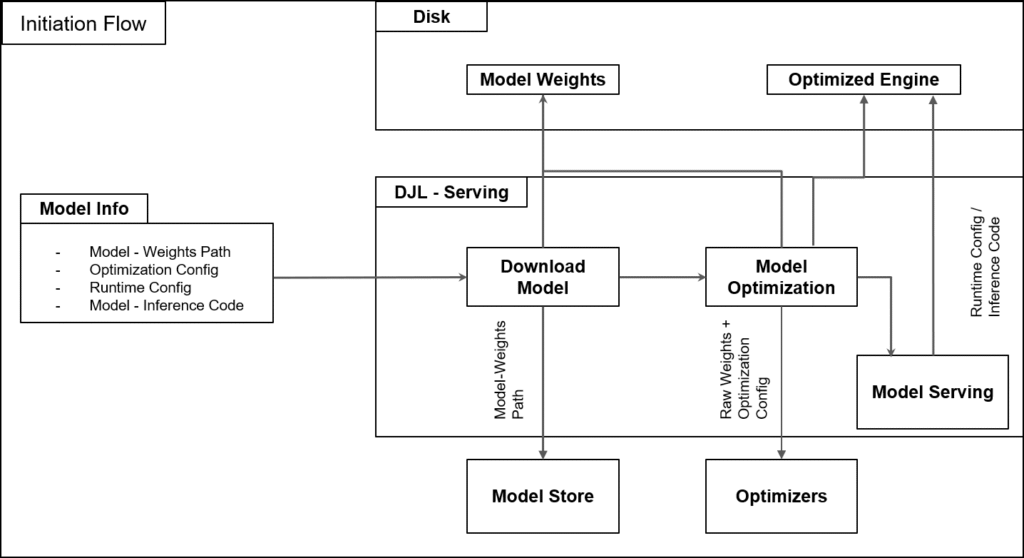

To support such a critical function for Salesforce AI, the team developed a hosting framework on AWS to simplify their model lifecycle, allowing them to quickly and securely deploy models at scale while optimizing for cost. The following diagram illustrates the solution workflow.

Managing performance and scalability

Managing scalability in the project involves balancing performance with efficiency and resource management. With SageMaker AI, the team supports distributed inference and multi-model deployments, preventing memory bottlenecks and reducing hardware costs. SageMaker AI provides access to advanced GPUs, supports multi-model deployments, and enables intelligent batching strategies to balance throughput with latency. This flexibility makes sure performance improvements don’t compromise scalability, even in high-demand scenarios. To learn more, see Revolutionizing AI: How Amazon SageMaker Enhances Einstein’s Large Language Model Latency and Throughput.

Accelerating development with SageMaker Deep Learning Containers

SageMaker AI Deep Learning Containers (DLCs) play a crucial role in accelerating model development and deployment. These pre-built containers come with optimized deep learning frameworks and best-practice configurations, providing a head start for AI teams. DLCs provide optimized library versions, preconfigured CUDA settings, and other performance enhancements that improve inference speeds and efficiency. This significantly reduces the setup and configuration overhead, allowing engineers to focus on model optimization rather than infrastructure concerns.

Best practice configurations for deployment in SageMaker AI

A key advantage of using SageMaker AI is the best practice configurations for deployment. SageMaker AI provides default parameters for setting GPU utilization and memory allocation, which simplifies the process of configuring high-performance inference environments. These features make it straightforward to deploy optimized models with minimal manual intervention, providing high availability and low-latency responses.

The team uses the DLC’s rolling-batch capability, which optimizes request batching to maximize throughput while maintaining low latency. SageMaker AI DLCs expose configurations for rolling batch inference with best-practice defaults, simplifying the implementation process. By adjusting parameters such as max_rolling_batch_size and job_queue_size, the team was able to fine-tune performance without extensive custom engineering. This streamlined approach provides optimal GPU utilization while maintaining real-time response requirements.

SageMaker AI provides elastic load balancing, instance scaling, and real-time model monitoring, and provides Salesforce control over scaling and routing strategies to suit their needs. These measures maintain consistent performance across environments while optimizing scalability, performance, and cost-efficiency.

Because the team supports multiple simultaneous deployments across projects, they needed to make sure enhancements in each project didn’t compromise others. To address this, they adopted a modular development approach. The SageMaker AI DLC architecture is designed with modular components such as the engine abstraction layer, model store, and workload manager. This structure allows the team to isolate and optimize individual components on the container, like rolling batch inference for throughput, without disrupting critical functionality such as latency or multi-framework support. This allows project teams to work on individual projects such as performance tuning while allowing others to focus on enabling other functionalities such as streaming in parallel.

This cross-functional collaboration is complemented by comprehensive testing. The Salesforce AI model team implemented continuous integration (CI) pipelines using a mix of internal and external tools such as Jenkins and Spinnaker to detect any unintended side effects early. Regression testing made sure that optimizations, such as deploying models with TensorRT or vLLM, didn’t negatively impact scalability or user experience. Regular reviews, involving collaboration between the development, foundation model operations (FMOps), and security teams, made sure that optimizations aligned with project-wide objectives.

Configuration management is also part of the CI pipeline. To be precise, configuration is stored in git alongside inference code. Configuration management using simple YAML files enabled rapid experimentation across optimizers and hyperparameters without altering the underlying code. These practices made sure that performance or security improvements were well-coordinated and didn’t introduce trade-offs in other areas.

Maintaining security through rapid deployment

Balancing rapid deployment with high standards of trust and security requires embedding security measures throughout the development and deployment lifecycle. Secure-by-design principles are adopted from the outset, making sure that security requirements are integrated into the architecture. Rigorous testing of all models is conducted in development environments alongside performance testing to provide scalable performance and security before production.

To maintain these high standards throughout the development process, the team employs several strategies:

- Automated continuous integration and delivery (CI/CD) pipelines with built-in checks for vulnerabilities, compliance validation, and model integrity

- Employing DJL-Serving’s encryption mechanisms for data in transit and at rest

- Using AWS services like SageMaker AI that provide enterprise-grade security features such as role-based access control (RBAC) and network isolation

Frequent automated testing for both performance and security is employed through small incremental deployments, allowing for early issue identification while minimizing risks. Collaboration with cloud providers and continuous monitoring of deployments maintain compliance with the latest security standards and make sure rapid deployment aligns seamlessly with robust security, trust, and reliability.

Focus on continuous improvement

As Salesforce’s generative AI needs scale, and with the ever-changing model landscape, the team continually works to improve their deployment infrastructure—ongoing research and development efforts are centered on enhancing the performance, scalability, and efficiency of LLM deployments. The team is exploring new optimization techniques with SageMaker, including:

- Advanced quantization methods (INT-4, AWQ, FP8)

- Tensor parallelism (splitting tensors across multiple GPUs)

- More efficient batching using caching strategies within DJL-Serving to boost throughput and reduce latency

The team is also investigating emerging technologies like AWS AI chips (AWS Trainium and AWS Inferentia) and AWS Graviton processors to further improve cost and energy efficiency. Collaboration with open source communities and public cloud providers like AWS makes sure that the latest advancements are incorporated into deployment pipelines while also pushing the boundaries further. Salesforce is collaborating with AWS to include advanced features into DJL, which makes the usage even better and more robust, such as additional configuration parameters, environment variables, and more granular metrics for logging. A key focus is refining multi-framework support and distributed inference capabilities to provide seamless model integration across various environments.

Efforts are also underway to enhance FMOps practices, such as automated testing and deployment pipelines, to expedite production readiness. These initiatives aim to stay at the forefront of AI innovation, delivering cutting-edge solutions that align with business needs and meet customer expectations. They are in close collaboration with the SageMaker team to continue to explore potential features and capabilities to support these areas.

Conclusion

Though exact metrics vary by use case, the Salesforce AI Model Serving team saw substantial improvements in terms of deployment speed and cost-efficiency with their strategy on SageMaker AI. They experienced faster iteration cycles, measured in days or even hours instead of weeks. With SageMaker AI, they reduced their model deployment time by as much as 50%.

To learn more about how SageMaker AI enhances Einstein’s LLM latency and throughput, see Revolutionizing AI: How Amazon SageMaker Enhances Einstein’s Large Language Model Latency and Throughput. For more information on how to get started with SageMaker AI, refer to Guide to getting set up with Amazon SageMaker AI.

About the authors

Sai Guruju is working as a Lead Member of Technical Staff at Salesforce. He has over 7 years of experience in software and ML engineering with a focus on scalable NLP and speech solutions. He completed his Bachelor’s of Technology in EE from IIT-Delhi, and has published his work at InterSpeech 2021 and AdNLP 2024.

Sai Guruju is working as a Lead Member of Technical Staff at Salesforce. He has over 7 years of experience in software and ML engineering with a focus on scalable NLP and speech solutions. He completed his Bachelor’s of Technology in EE from IIT-Delhi, and has published his work at InterSpeech 2021 and AdNLP 2024.

Nitin Surya is working as a Lead Member of Technical Staff at Salesforce. He has over 8 years of experience in software and machine learning engineering, completed his Bachelor’s of Technology in CS from VIT University, with an MS in CS (with a major in Artificial Intelligence and Machine Learning) from the University of Illinois Chicago. He has three patents pending, and has published and contributed to papers at the CoRL Conference.

Nitin Surya is working as a Lead Member of Technical Staff at Salesforce. He has over 8 years of experience in software and machine learning engineering, completed his Bachelor’s of Technology in CS from VIT University, with an MS in CS (with a major in Artificial Intelligence and Machine Learning) from the University of Illinois Chicago. He has three patents pending, and has published and contributed to papers at the CoRL Conference.

Srikanta Prasad is a Senior Manager in Product Management specializing in generative AI solutions, with over 20 years of experience across semiconductors, aerospace, aviation, print media, and software technology. At Salesforce, he leads model hosting and inference initiatives, focusing on LLM inference serving, LLMOps, and scalable AI deployments. Srikanta holds an MBA from the University of North Carolina and an MS from the National University of Singapore.

Srikanta Prasad is a Senior Manager in Product Management specializing in generative AI solutions, with over 20 years of experience across semiconductors, aerospace, aviation, print media, and software technology. At Salesforce, he leads model hosting and inference initiatives, focusing on LLM inference serving, LLMOps, and scalable AI deployments. Srikanta holds an MBA from the University of North Carolina and an MS from the National University of Singapore.

Rielah De Jesus is a Principal Solutions Architect at AWS who has successfully helped various enterprise customers in the DC, Maryland, and Virginia area move to the cloud. In her current role, she acts as a customer advocate and technical advisor focused on helping organizations like Salesforce achieve success on the AWS platform. She is also a staunch supporter of women in IT and is very passionate about finding ways to creatively use technology and data to solve everyday challenges.

Rielah De Jesus is a Principal Solutions Architect at AWS who has successfully helped various enterprise customers in the DC, Maryland, and Virginia area move to the cloud. In her current role, she acts as a customer advocate and technical advisor focused on helping organizations like Salesforce achieve success on the AWS platform. She is also a staunch supporter of women in IT and is very passionate about finding ways to creatively use technology and data to solve everyday challenges.

Source: Read MoreÂ