As language models continue to grow in size and complexity, so do the resource requirements needed to train and deploy them. While large-scale models can achieve remarkable performance across a variety of benchmarks, they are often inaccessible to many organizations due to infrastructure limitations and high operational costs. This gap between capability and deployability presents a practical challenge, particularly for enterprises seeking to embed language models into real-time systems or cost-sensitive environments.

In recent years, small language models (SLMs) have emerged as a potential solution, offering reduced memory and compute requirements without entirely compromising on performance. Still, many SLMs struggle to provide consistent results across diverse tasks, and their design often involves trade-offs that limit generalization or usability.

ServiceNow AI Releases Apriel-5B: A Step Toward Practical AI at Scale

To address these concerns, ServiceNow AI has released Apriel-5B, a new family of small language models designed with a focus on inference throughput, training efficiency, and cross-domain versatility. With 4.8 billion parameters, Apriel-5B is small enough to be deployed on modest hardware but still performs competitively on a range of instruction-following and reasoning tasks.

The Apriel family includes two versions:

- Apriel-5B-Base, a pretrained model intended for further tuning or embedding in pipelines.

- Apriel-5B-Instruct, an instruction-tuned version aligned for chat, reasoning, and task completion.

Both models are released under the MIT license, supporting open experimentation and broader adoption across research and commercial use cases.

Architectural Design and Technical Highlights

Apriel-5B was trained on over 4.5 trillion tokens, a dataset carefully constructed to cover multiple task categories, including natural language understanding, reasoning, and multilingual capabilities. The model uses a dense architecture optimized for inference efficiency, with key technical features such as:

- Rotary positional embeddings (RoPE) with a context window of 8,192 tokens, supporting long-sequence tasks.

- FlashAttention-2, enabling faster attention computation and improved memory utilization.

- Grouped-query attention (GQA), reducing memory overhead during autoregressive decoding.

- Training in BFloat16, which ensures compatibility with modern accelerators while maintaining numerical stability.

These architectural decisions allow Apriel-5B to maintain responsiveness and speed without relying on specialized hardware or extensive parallelization. The instruction-tuned version was fine-tuned using curated datasets and supervised techniques, enabling it to perform well on a range of instruction-following tasks with minimal prompting.

Evaluation Insights and Benchmark Comparisons

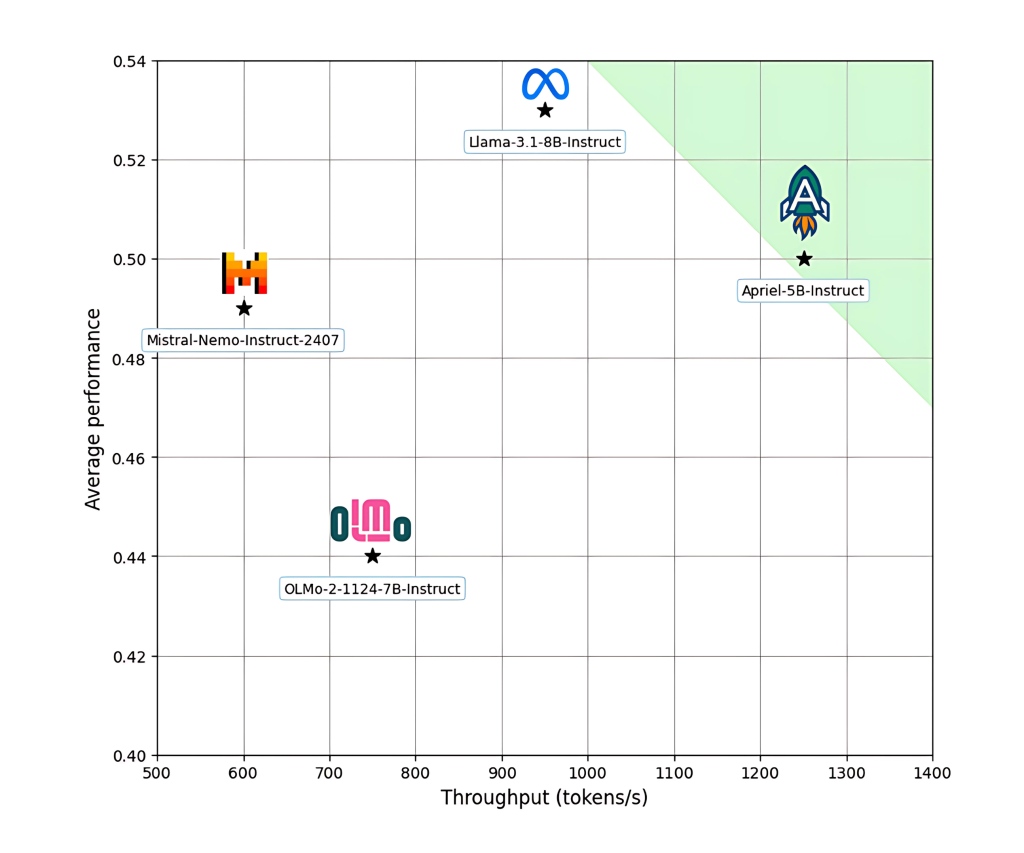

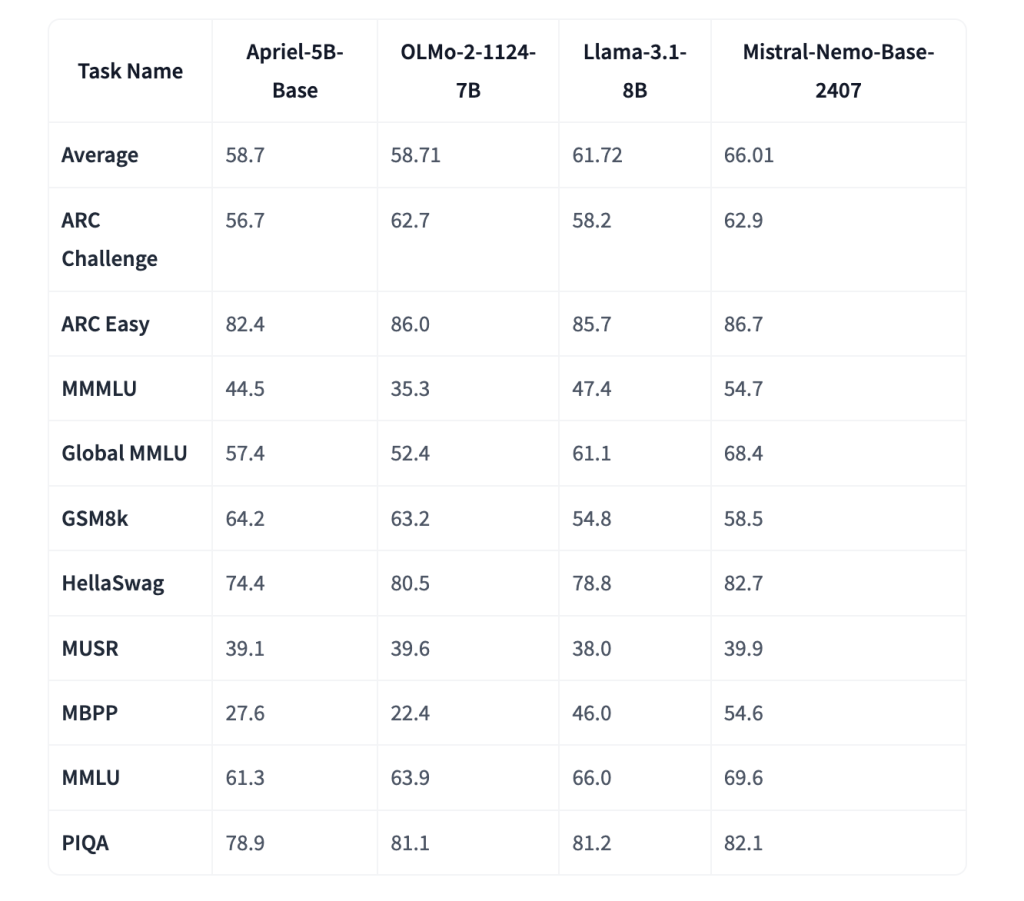

Apriel-5B-Instruct has been evaluated against several widely used open models, including Meta’s LLaMA 3.1–8B, Allen AI’s OLMo-2–7B, and Mistral-Nemo-12B. Despite its smaller size, Apriel shows competitive results across multiple benchmarks:

- Outperforms both OLMo-2–7B-Instruct and Mistral-Nemo-12B-Instruct on average across general-purpose tasks.

- Shows stronger results than LLaMA-3.1–8B-Instruct on math-focused tasks and IF Eval, which evaluates instruction-following consistency.

- Requires significantly fewer compute resources—2.3x fewer GPU hours—than OLMo-2–7B, underscoring its training efficiency.

These outcomes suggest that Apriel-5B hits a productive midpoint between lightweight deployment and task versatility, particularly in domains where real-time performance and limited resources are key considerations.

Conclusion: A Practical Addition to the Model Ecosystem

Apriel-5B represents a thoughtful approach to small model design, one that emphasizes balance rather than scale. By focusing on inference throughput, training efficiency, and core instruction-following performance, ServiceNow AI has created a model family that is easy to deploy, adaptable to varied use cases, and openly available for integration.

Its strong performance on math and reasoning benchmarks, combined with a permissive license and efficient compute profile, makes Apriel-5B a compelling choice for teams building AI capabilities into products, agents, or workflows. In a field increasingly defined by accessibility and real-world applicability, Apriel-5B is a practical step forward.

Check out ServiceNow-AI/Apriel-5B-Base and ServiceNow-AI/Apriel-5B-Instruct. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Small Models, Big Impact: ServiceNow AI Releases Apriel-5B to Outperform Larger LLMs with Fewer Resources appeared first on MarkTechPost.

Source: Read MoreÂ