In today’s deep learning landscape, optimizing models for deployment in resource-constrained environments is more important than ever. Weight quantization addresses this need by reducing the precision of model parameters, typically from 32-bit floating point values to lower bit-width representations, thus yielding smaller models that can run faster on hardware with limited resources. This tutorial introduces the concept of weight quantization using PyTorch’s dynamic quantization technique on a pre-trained ResNet18 model. The tutorial will explore how to inspect weight distributions, apply dynamic quantization to key layers (such as fully connected layers), compare model sizes, and visualize the resulting changes. This tutorial will equip you with the theoretical background and practical skills required to deploy deep learning models.

import torch

import torch.nn as nn

import torch.quantization

import torchvision.models as models

import matplotlib.pyplot as plt

import numpy as np

import os

print("Torch version:", torch.__version__)We import the required libraries such as PyTorch, torchvision, and matplotlib, and prints the PyTorch version, ensuring all necessary modules are ready for model manipulation and visualization.

model_fp32 = models.resnet18(pretrained=True)

model_fp32.eval()

print("Pretrained ResNet18 (FP32) model loaded.")A pretrained ResNet18 model is loaded in FP32 (floating-point) precision and set to evaluation mode, preparing it for further processing and quantization.

fc_weights_fp32 = model_fp32.fc.weight.data.cpu().numpy().flatten()

plt.figure(figsize=(8, 4))

plt.hist(fc_weights_fp32, bins=50, color='skyblue', edgecolor='black')

plt.title("FP32 - FC Layer Weight Distribution")

plt.xlabel("Weight values")

plt.ylabel("Frequency")

plt.grid(True)

plt.show()

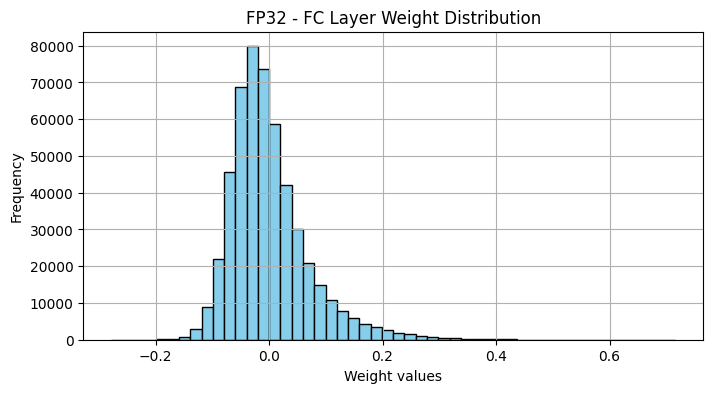

In this block, the weights from the final fully connected layer of the FP32 model are extracted and flattened, then a histogram is plotted to visualize their distribution before any quantization is applied.

quantized_model = torch.quantization.quantize_dynamic(model_fp32, {nn.Linear}, dtype=torch.qint8)

quantized_model.eval()

print("Dynamic quantization applied to the model.")We apply dynamic quantization to the model, specifically targeting the Linear layers—to convert them to lower-precision formats, demonstrating a key technique for reducing model size and inference latency.

def get_model_size(model, filename="temp.p"):

torch.save(model.state_dict(), filename)

size = os.path.getsize(filename) / 1e6

os.remove(filename)

return size

fp32_size = get_model_size(model_fp32, "fp32_model.p")

quant_size = get_model_size(quantized_model, "quant_model.p")

print(f"FP32 Model Size: {fp32_size:.2f} MB")

print(f"Quantized Model Size: {quant_size:.2f} MB")A helper function is defined to save and check the model size on disk; then, it is used to measure and compare the sizes of the original FP32 model and the quantized model, showcasing the compression impact of quantization.

dummy_input = torch.randn(1, 3, 224, 224)

with torch.no_grad():

output_fp32 = model_fp32(dummy_input)

output_quant = quantized_model(dummy_input)

print("Output from FP32 model (first 5 elements):", output_fp32[0][:5])

print("Output from Quantized model (first 5 elements):", output_quant[0][:5])A dummy input tensor is created to simulate an image, and both FP32 and quantized models are run on this input so that you can compare their outputs and validate that quantization does not drastically alter predictions.

if hasattr(quantized_model.fc, 'weight'):

fc_weights_quant = quantized_model.fc.weight().dequantize().cpu().numpy().flatten()

else:

fc_weights_quant = quantized_model.fc._packed_params._packed_weight.dequantize().cpu().numpy().flatten()

plt.figure(figsize=(14, 5))

plt.subplot(1, 2, 1)

plt.hist(fc_weights_fp32, bins=50, color='skyblue', edgecolor='black')

plt.title("FP32 - FC Layer Weight Distribution")

plt.xlabel("Weight values")

plt.ylabel("Frequency")

plt.grid(True)

plt.subplot(1, 2, 2)

plt.hist(fc_weights_quant, bins=50, color='salmon', edgecolor='black')

plt.title("Quantized - FC Layer Weight Distribution")

plt.xlabel("Weight values")

plt.ylabel("Frequency")

plt.grid(True)

plt.tight_layout()

plt.show()

In this block, the quantized weights (after dequantization) are extracted from the fully connected layer and compared via histograms against the original FP32 weights to illustrate the changes in weight distribution due to quantization.

In conclusion, the tutorial has provided a step-by-step guide to understanding and implementing weight quantization, highlighting its impact on model size and performance. By quantizing a pre-trained ResNet18 model, we observed the shifts in weight distributions, the tangible benefits in model compression, and potential inference speed improvements. This exploration sets the stage for further experimentation, such as implementing Quantization Aware Training (QAT), which can further optimize performance on quantized models.

Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 85k+ ML SubReddit.

The post A Coding Implementation on Introduction to Weight Quantization: Key Aspect in Enhancing Efficiency in Deep Learning and LLMs appeared first on MarkTechPost.

Source: Read MoreÂ