In this tutorial, we demonstrate a complete end-to-end solution to convert text into audio using an open-source text-to-speech (TTS) model available on Hugging Face. Leveraging the capabilities of the Coqui TTS library, the tutorial walks you through initializing a state-of-the-art TTS model (in our case, “tts_models/en/ljspeech/tacotron2-DDC”), processing your input text, and saving the resulting synthesis as a high-quality WAV audio file. In addition, we integrate Python’s audio processing tools, including the wave module and context managers, to analyze key audio file attributes like duration, sample rate, sample width, and channel configuration. This step-by-step guide is designed to cater to beginners and advanced developers who want to understand how to generate speech from text and perform basic diagnostic analysis on the output.

!pip install TTS!pip install TTS installs the Coqui TTS library, enabling you to leverage open-source text-to-speech models to convert text into high-quality audio. This ensures that all necessary dependencies are available in your Python environment, allowing you to experiment quickly with various TTS functionalities.

from TTS.api import TTS

import contextlib

import waveWe import essential modules: TTS from the TTS API for text-to-speech synthesis using Hugging Face models and the built-in contextlib and wave modules for safely opening and analyzing WAV audio files.

def text_to_speech(text: str, output_path: str = "output.wav", use_gpu: bool = False):

"""

Converts input text to speech and saves the result to an audio file.

Parameters:

text (str): The text to convert.

output_path (str): Output WAV file path.

use_gpu (bool): Use GPU for inference if available.

"""

model_name = "tts_models/en/ljspeech/tacotron2-DDC"

tts = TTS(model_name=model_name, progress_bar=True, gpu=use_gpu)

tts.tts_to_file(text=text, file_path=output_path)

print(f"Audio file generated successfully: {output_path}")The text_to_speech function accepts a string of text, along with an optional output file path and a GPU usage flag, and utilizes the Coqui TTS model (specified as “tts_models/en/ljspeech/tacotron2-DDC”) to synthesize the provided text into a WAV audio file. Upon successful conversion, it prints a confirmation message indicating where the audio file has been saved.

def analyze_audio(file_path: str):

"""

Analyzes the WAV audio file and prints details about it.

Parameters:

file_path (str): The path to the WAV audio file.

"""

with contextlib.closing(wave.open(file_path, 'rb')) as wf:

frames = wf.getnframes()

rate = wf.getframerate()

duration = frames / float(rate)

sample_width = wf.getsampwidth()

channels = wf.getnchannels()

print("nAudio Analysis:")

print(f" - Duration : {duration:.2f} seconds")

print(f" - Frame Rate : {rate} frames per second")

print(f" - Sample Width : {sample_width} bytes")

print(f" - Channels : {channels}")The analyze_audio function opens a specified WAV file and extracts key audio parameters, such as duration, frame rate, sample width, and number of channels, using Python’s wave module. It then prints these details in a neatly formatted summary, helping you verify and understand the technical characteristics of the synthesized audio output.

if __name__ == "__main__":

sample_text = (

"Marktechpost is an AI News Platform providing easy-to-consume, byte size updates in machine learning, deep learning, and data science research. Our vision is to showcase the hottest research trends in AI from around the world using our innovative method of search and discovery"

)

output_file = "output.wav"

text_to_speech(sample_text, output_path=output_file)

analyze_audio(output_file)

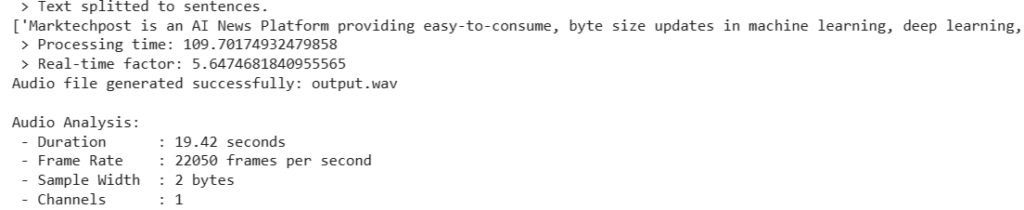

The if __name__ == “__main__”: block serves as the script’s entry point when executed directly. This segment defines a sample text describing an AI news platform. The text_to_speech function is called to synthesize this text into an audio file named “output.wav”, and finally, the analyze_audio function is invoked to print the audio’s detailed parameters.

Main Function Output

In conclusion, the implementation illustrates how to effectively harness open-source TTS tools and libraries to convert text to audio while concurrently performing diagnostic analysis on the resulting audio file. By integrating the Hugging Face models through the Coqui TTS library with Python’s robust audio processing capabilities, you gain a comprehensive workflow that synthesizes speech efficiently and verifies its quality and performance. Whether you aim to build conversational agents, automate voice responses, or simply explore the nuances of speech synthesis, this tutorial lays a solid foundation that you can easily customize and expand as needed.

Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 85k+ ML SubReddit.

The post Step by Step Guide on Converting Text to High-Quality Audio Using an Open Source TTS Model on Hugging Face: Including Detailed Audio File Analysis and Diagnostic Tools in Python appeared first on MarkTechPost.

Source: Read MoreÂ