Large language models are built on transformer architectures and power applications like chat, code generation, and search, but their growing scale with billions of parameters makes efficient computation increasingly challenging. Scaling such systems while maintaining low latency and high throughput puts pressure on algorithm design and system-level optimization. Effectively serving these models now requires careful orchestration of memory, communication, and compute resources.

A critical challenge in this space is how sparsity, introduced through Mixture-of-Experts (MoE) models, affects inference performance. These models selectively activate a subset of feed-forward networks per input, reducing computational load. However, this selective activation leads to underutilization of hardware. During inference, attention modules become bottlenecks due to frequent memory access to key-value caches, while the FFN modules become idle because each receives a small fraction of tokens. As a result, GPU utilization drops significantly, especially during decoding, creating inefficiencies and inflating operational costs.

While some methods like vLLM and TensorRT-LLM have attempted to address inference scaling through parallelism and optimized kernels, these solutions remain constrained. They process the model holistically, meaning they cannot independently adjust scaling for different components. As MoE models grow in size and sparsity, this approach leads to smaller active batches per expert, weakening the benefits of batching for FFNs. Moreover, tensor and pipeline parallelism approaches add communication overhead, especially across nodes, which becomes a limiting factor in multi-GPU environments.

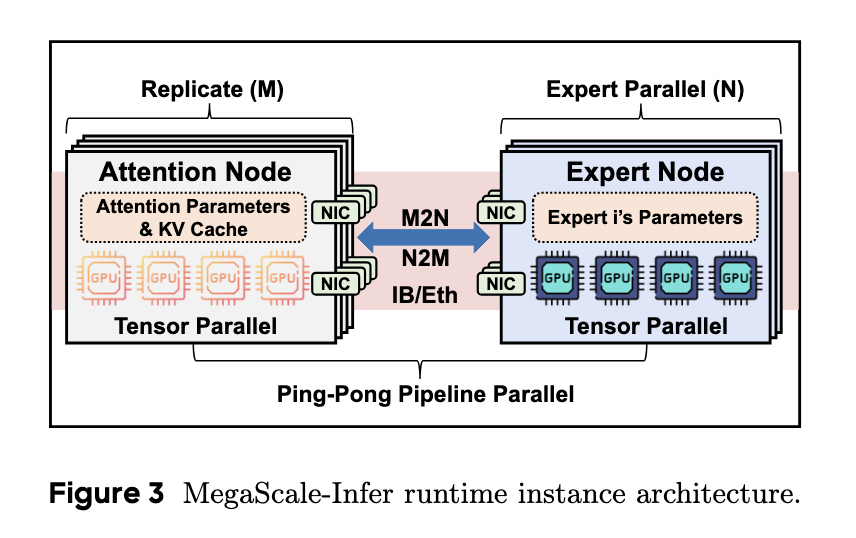

ByteDance and Peking University researchers have introduced MegaScale-Infer, a system that rethinks the architecture of MoE serving. Instead of serving the model as a monolithic block, the researchers disaggregate the attention and FFN modules, deploying them on separate GPUs. This separation enables customized scaling and parallelism strategies tailored to the specific needs of each module. Attention modules, which are memory-intensive, are replicated to aggregate requests, while FFN modules are scaled using expert parallelism. The system also supports heterogeneous GPU deployment, assigning cost-effective memory-heavy GPUs to attention tasks and compute-optimized GPUs to FFNs. This disaggregation dramatically improves resource usage and flexibility in deployment.

To further optimize performance, MegaScale-Infer employs a ping-pong pipeline parallelism strategy. The idea is to break down batches of requests into smaller micro-batches that alternate between attention and FFN modules, ensuring that neither component sits idle. The system determines the optimal number of micro-batches required to maintain high utilization, considering compute time, communication latency, and hardware setup. For example, if the communication time is less than half the compute time, at least three micro-batches are used. Further, the system integrates a high-performance M2N communication library that avoids unnecessary GPU-to-CPU data copies, reducing latency and instability. This library replaces the traditional All-to-All routing with a more efficient sender-receiver model designed specifically for MoE’s token dispatch pattern.

MegaScale-Infer was tested on multiple large-scale MoE models, including Mixtral 8×22B, DBRX, and a scaled custom model with 317 billion parameters. In experiments on homogeneous setups using NVIDIA Ampere GPUs, MegaScale-Infer improved per-GPU decoding throughput by up to 2.56× compared to vLLM and 1.28× over TensorRT-LLM. The scaled model achieved a 7.11× gain over vLLM and a 1.90× gain over TensorRT-LLM. On heterogeneous clusters with H20 GPUs for attention and L40S for FFNs, the system achieved up to 3.24× and 1.86× higher throughput per dollar than the baselines. Its M2N communication library delivered up to 4.2× higher throughput and 68.2% lower latency than NCCL.

This paper presents a clear problem of underutilized GPUs during MoE inference and offers a practical solution by modularizing the architecture. The proposed disaggregation strategy, combined with micro-batch pipelining and a custom communication protocol, substantially impacts serving efficiency and cost.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post This AI Paper from ByteDance Introduces MegaScale-Infer: A Disaggregated Expert Parallelism System for Efficient and Scalable MoE-Based LLM Serving appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]