AI agents quickly become core components in handling complex human interactions, particularly in business environments where conversations span multiple turns and involve task execution, information extraction, and adherence to specific procedural rules. Unlike traditional chatbots that handle single-turn questions, these agents must hold context over several dialogue exchanges while integrating external data and tool usage. These challenges demand systems capable of navigating user goals incrementally, engaging in feedback loops, and invoking structured functions like API calls based on the conversation state. These capabilities heavily depend on the availability of training datasets that reflect such tasks’ natural complexity and sequence. As these AI agents are expected to operate under domain-specific constraints and execute task-relevant functions in finance, retail, and customer support, the demand for nuanced and verified training data grows significantly.

The central bottleneck in scaling agent capability has been the lack of high-quality, multi-turn datasets that reflect realistic user interactions. Collecting such data manually is slow and costly and requires domain knowledge to construct tasks that represent actual use cases. Also, even leading language models tend to underperform in conversations that require tracking prior context, using tools precisely, or dynamically adjusting their strategy. Without structured training datasets that reflect these challenges, models are prone to errors in execution and struggle with maintaining goal alignment across turns. These limitations become more pronounced in scenarios that involve tool usage, such as executing function calls, retrieving external data, or fulfilling service requests with multiple stages of information exchange.

Various frameworks have attempted to bridge this gap through synthetic data generation or task-specific tuning. Some efforts like APIGen and knowledge distillation methods have helped generate single-turn task data or simplified templates. Tool-usage models have been enhanced using frameworks that provide fixed sets of functions but often lack the flexibility to adapt to dynamic tool environments. Other attempts, such as MAG-V, MATRIX, and BUTTON, use multi-agent systems to simulate training interactions but suffer from inadequate quality controls or rely on fixed instruction structures. Many of these tools either fail to capture long-term dependency or rely on brittle rule-based systems that lack generalizability. Even popular evaluation benchmarks like MultiChallenge and ToolDial struggle to emulate the intricacies of realistic conversations, often due to overly simplified interaction formats.

A research team from Salesforce AI Research introduced APIGen-MT, a novel two-phase data generation pipeline designed to create high-quality, multi-turn interaction data between agents and simulated human users. The approach focuses on realism, structure, and verification by constructing validated task blueprints and then simulating detailed agent-human conversations in executable environments. Unlike earlier approaches, this method employs a layered validation mechanism using both automated checkers and committees of large language models to assess task coherence, accuracy, and feasibility. The researchers train a family of models under the xLAM-2-fc-r series, ranging from 1 billion to 70 billion parameters, using this synthetic data to outperform major benchmarks in multi-turn agent evaluation significantly.

The architecture behind APIGen-MT is split into two main operational phases. In Phase 1, a task configuration is created using an LLM-driven generator that proposes user intent instructions, a sequence of groundtruth actions, and the expected outputs. These proposals are then validated for format correctness, executability, and semantic coherence using a combination of rule-based checkers and a multi-agent LLM review committee. If a proposal fails at any stage, a feedback mechanism will reflect on the errors and propose improvements. Successful tasks move to Phase 2, where a simulation engine generates realistic dialogues between a simulated human user and a test agent. The agent responds to user inputs by calling APIs, interpreting outputs, and evolving the conversation across turns. Only those dialogue trajectories that match the expected groundtruth are included in the final training dataset, ensuring functional accuracy and natural dialogue flow.

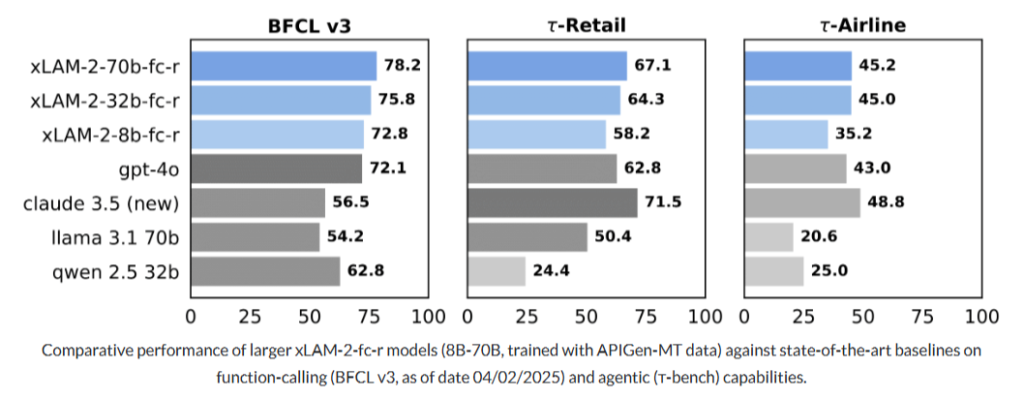

Models trained on APIGen-MT data, specifically the xLAM-2-fc-r models, demonstrate superior performance across two industry-standard evaluation benchmarks: τ-bench and BFCL v3. For example, on the BFCL v3 benchmark in the Retail domain, the xLAM-2-70b-fc-r model achieved a score of 78.2, surpassing Claude 3.5 (56.5) and GPT-4o (72.1). Similarly, the airline domain scored 67.1 compared to GPT-4o’s 62.8. In more complex environments involving iterative interactions, the xLAM-2-8b-fc-r model outperformed larger traditional models, illustrating the impact of higher-quality training data. These results confirm that detailed and verified training interactions are more valuable than sheer model size when structured carefully through feedback loops and task validation. Also, the consistency of these models across multiple trials shows enhanced robustness, a critical factor for deployment in enterprise environments.

The APIGen-MT framework is impactful not only because of its performance but also because of its scalability and open-source contribution. By releasing both the synthetic datasets and the xLAM-2-fc-r models to the public, the researchers aim to democratize access to high-quality agent training data. This modular, verifiable, and interaction-grounded approach opens avenues for future advancements in AI agents. It enables researchers to extend the framework across different domains, functions, and tools, making it adaptable to specific industrial requirements without sacrificing dialogue realism or execution integrity.

Some Key Takeaways from the Research:

- APIGen-MT creates multi-turn interaction datasets using a two-phase task blueprint generation followed by simulated conversation.

- The system integrates validation via format checks, execution tests, and LLM review committees.

- Feedback loops allow the improvement of failed tasks, creating a learning mechanism within the pipeline.

- Models trained with this data outperform GPT-4o and Claude 3.5 across τ-bench and BFCL v3 benchmarks.

- The xLAM-2-70b-fc-r scored 78.2 on Retail and 67.1 on Airline under BFCL v3, higher than all baselines.

- Smaller models like xLAM-2-8b-fc-r also beat larger alternatives in long-turn interactions, indicating better efficiency.

- The open-source release of both data and models ensures wider accessibility for research and industrial use.

- The framework enhances realism and technical reliability in agent training, setting a new standard for synthetic interaction data.

Check out the Paper and Model. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Salesforce AI Released APIGen-MT and xLAM-2-fc-r Model Series: Advancing Multi-Turn Agent Training with Verified Data Pipelines and Scalable LLM Architectures appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]