Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. Although an individual LLM can be highly capable, it might not optimally address a wide range of use cases or meet diverse performance requirements. The multi-LLM approach enables organizations to effectively choose the right model for each task, adapt to different domains, and optimize for specific cost, latency, or quality needs. This strategy results in more robust, versatile, and efficient applications that better serve diverse user needs and business objectives.

Deploying a multi-LLM application comes with the challenge of routing each user prompt to an appropriate LLM for the intended task. The routing logic must accurately interpret and map the prompt into one of the pre-defined tasks, and then direct it to the assigned LLM for that task. In this post, we provide an overview of common multi-LLM applications. We then explore strategies for implementing effective multi-LLM routing in these applications, discussing the key factors that influence the selection and implementation of such strategies. Finally, we provide sample implementations that you can use as a starting point for your own multi-LLM routing deployments.

Overview of common multi-LLM applications

The following are some of the common scenarios where you might choose to use a multi-LLM approach in your applications:

- Multiple task types – Many use cases need to handle different task types within the same application. For example, a marketing content creation application might need to perform task types such as text generation, text summarization, sentiment analysis, and information extraction as part of producing high-quality, personalized content. Each distinct task type will likely require a separate LLM, which might also be fine-tuned with custom data.

- Multiple task complexity levels – Some applications are designed to handle a single task type, such as text summarization or question answering. However, they must be able to respond to user queries with varying levels of complexity within the same task type. For example, consider a text summarization AI assistant intended for academic research and literature review. Some user queries might be relatively straightforward, simply asking the application to summarize the core ideas and conclusions from a short article. Such queries could be effectively handled by a simple, lower-cost model. In contrast, more complex questions might require the application to summarize a lengthy dissertation by performing deeper analysis, comparison, and evaluation of the research results. These types of queries would be better addressed by more advanced models with greater reasoning capabilities.

- Multiple task domains – Certain applications need to serve users across multiple domains of expertise. An example is a virtual assistant for enterprise business operations. Such a virtual assistant should support users across various business functions, such as finance, legal, human resources, and operations. To handle this breadth of expertise, the virtual assistant needs to use different LLMs that have been fine-tuned on datasets specific to each respective domain.

- Software-as-a-service (SaaS) applications with tenant tiering – SaaS applications are often architected to provide different pricing and experiences to a spectrum of customer profiles, referred to as tiers. Through the use of different LLMs tailored to each tier, SaaS applications can offer capabilities that align with the varying needs and budgets of their diverse customer base. For instance, consider an AI-driven legal document analysis system designed for businesses of varying sizes, offering two primary subscription tiers: Basic and Pro. The Basic tier would use a smaller, more lightweight LLM well-suited for straightforward tasks, such as performing simple document searches or generating summaries of uncomplicated legal documents. The Pro tier, however, would require a highly customized LLM that has been trained on specific data and terminology, enabling it to assist with intricate tasks like drafting complex legal documents.

Multi-LLM routing strategies

In this section, we explore two main approaches to routing requests to different LLMs: static routing and dynamic routing.

Static routing

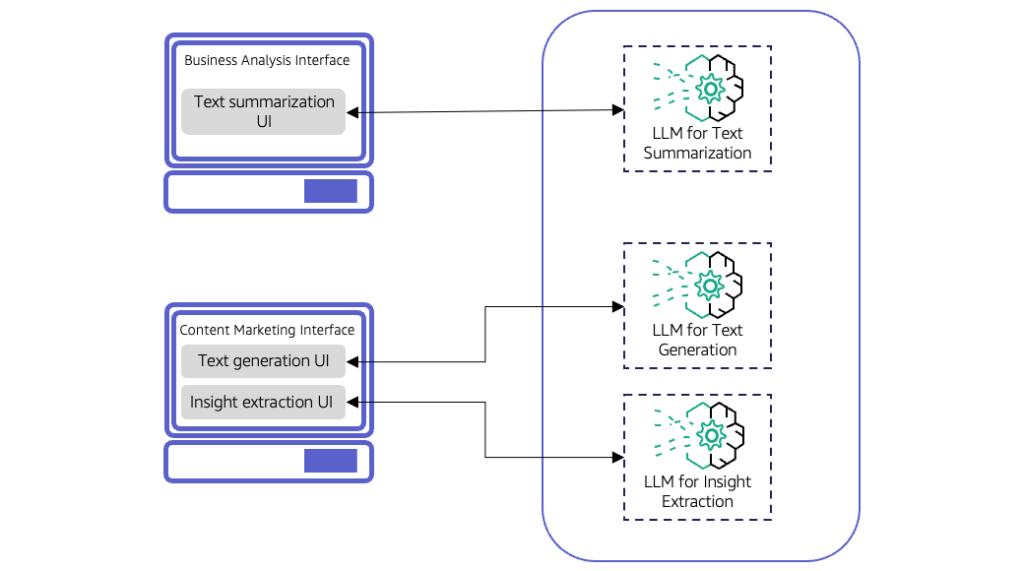

One effective strategy for directing user prompts to appropriate LLMs is to implement distinct UI components within the same interface or separate interfaces tailored to specific tasks. For example, an AI-powered productivity tool for an ecommerce company might feature dedicated interfaces for different roles, such as content marketers and business analysts. The content marketing interface incorporates two main UI components: a text generation module for creating social media posts, emails, and blogs, and an insight extraction module that identifies the most relevant keywords and phrases from customer reviews to improve content strategy. Meanwhile, the business analysis interface would focus on text summarization for analyzing various business documents. This is illustrated in the following figure.

This approach works well for applications where the user experience supports having a distinct UI component for each task. It also allows for a flexible and modular design, where new LLMs can be quickly plugged into or swapped out from a UI component without disrupting the overall system. However, the static nature of this approach implies that the application might not be easily adaptable to evolving user requirements. Adding a new task would necessitate the development of a new UI component in addition to the selection and integration of a new model.

Dynamic routing

In some use cases, such as virtual assistants and multi-purpose chatbots, user prompts usually enter the application through a single UI component. For instance, consider a customer service AI assistant that handles three types of tasks: technical support, billing support, and pre-sale support. Each of these tasks requires its own custom LLM to provide appropriate responses. In this scenario, you need to implement a dynamic routing layer to intercept each incoming request and direct it to the downstream LLM, which is best suited to handle the intended task within that prompt. This is illustrated in the following figure.

In this section, we discuss common approaches for implementing this dynamic routing layer: LLM-assisted routing, semantic routing, and a hybrid approach.

LLM-assisted routing

This approach employs a classifier LLM at the application’s entry point to make routing decisions. The LLM’s ability to comprehend complex patterns and contextual subtleties makes this approach well-suited for applications requiring fine-grained classifications across task types, complexity levels, or domains. However, this method presents trade-offs. Although it offers sophisticated routing capabilities, it introduces additional costs and latency. Furthermore, maintaining the classifier LLM’s relevance as the application evolves can be demanding. Careful model selection, fine-tuning, configuration, and testing might be necessary to balance the impact of latency and cost with the desired classification accuracy.

Semantic routing

This approach uses semantic search as an alternative to using a classifier LLM for prompt classification and routing in multi-LLM systems. Semantic search uses embeddings to represent prompts as numerical vectors. The system then makes routing decisions by measuring the similarity between the user’s prompt embedding and the embeddings for a set of reference prompts, each representing a different task category. The user prompt is then routed to the LLM associated with the task category of the reference prompt that has the closest match.

Although semantic search doesn’t provide explicit classifications like a classifier LLM, it succeeds at identifying broad similarities and can effectively handle variations in a prompt’s wording. This makes it particularly well-suited for applications where routing can be based on coarse-grained classification of prompts, such as task domain classification. It also excels in scenarios with a large number of task categories or when new domains are frequently introduced, because it can quickly accommodate updates by simply adding new prompts to the reference prompt set.

Semantic routing offers several advantages, such as efficiency gained through fast similarity search in vector databases, and scalability to accommodate a large number of task categories and downstream LLMs. However, it also presents some trade-offs. Having adequate coverage for all possible task categories in your reference prompt set is crucial for accurate routing. Additionally, the increased system complexity due to the additional components, such as the vector database and embedding LLM, might impact overall performance and maintainability. Careful design and ongoing maintenance are necessary to address these challenges and fully realize the benefits of the semantic routing approach.

Hybrid approach

In certain scenarios, a hybrid approach combining both techniques might also prove highly effective. For instance, in applications with a large number of task categories or domains, you can use semantic search for initial broad categorization or domain matching, followed by classifier LLMs for more fine-grained classification within those broad categories. This initial filtering allows you to use a simpler, more focused classifier LLM for the final routing decision.

Consider, for instance, a customer service AI assistant handling a diverse range of inquiries. In this context, semantic routing could initially route the user’s prompt to the appropriate department—be it billing, technical support, or sales. After the broad category is established, a dedicated classifier LLM for that specific department takes over. This specialized LLM, which can be trained on nuanced distinctions within its domain, can then determine crucial factors such as task complexity or urgency. Based on this fine-grained analysis, the prompt is then routed to the most appropriate LLM or, when necessary, escalated to a human agent.

This hybrid approach combines the scalability and flexibility of semantic search with the precision and context-awareness of classifier LLMs. The result is a robust, efficient, and highly accurate routing mechanism capable of adapting to the complexities and diverse needs of modern multi-LLM applications.

Implementation of dynamic routing

In this section, we explore different approaches to implementing dynamic routing on AWS, covering both built-in routing features and custom solutions that you can use as a starting point to build your own.

Intelligent prompt routing with Amazon Bedrock

Amazon Bedrock is a fully managed service that makes high-performing LLMs and other foundation models (FMs) from leading AI startups and Amazon available through an API, so you can choose from a wide range of FMs to find the model that is best suited for your use case. With the Amazon Bedrock serverless experience, you can get started quickly, privately customize FMs with your own data, and integrate and deploy them into your applications using AWS tools without having to manage infrastructure.

If you’re building applications with Amazon Bedrock LLMs and need a fully managed solution with straightforward routing capabilities, Amazon Bedrock Intelligent Prompt Routing offers an efficient way to implement dynamic routing. This feature of Amazon Bedrock provides a single serverless endpoint for efficiently routing requests between different LLMs within the same model family. It uses advanced prompt matching and model understanding techniques to predict the performance of each model for every request. Amazon Bedrock then dynamically routes each request to the model that it predicts is most likely to give the desired response at the lowest cost. Intelligent Prompt Routing can reduce costs by up to 30% without compromising on accuracy. As of this writing, Amazon Bedrock supports routing within the Anthropic’s Claude and Meta’s Llama model families. For example, Amazon Bedrock can intelligently route requests between Anthropic’s Claude 3.5 Sonnet and Claude 3 Haiku depending on the complexity of the prompt, as illustrated the following figure. Similarly, Amazon Bedrock can route requests between Meta’s Llama 3.1 70B and 8B.

This architecture workflow includes the following steps:

- A user submits a question through a web or mobile application.

- Anthropic’s prompt router predicts the performance of each downstream LLM, selecting the model that it predicts will offer the best combination of response quality and cost.

- Amazon Bedrock routes the request to the selected LLM, and returns the response along with information about the model.

For detailed implementation guidelines and examples of Intelligent Prompt Routing on Amazon Bedrock, see Reduce costs and latency with Amazon Bedrock Intelligent Prompt Routing and prompt caching.

Custom prompt routing

If your LLMs are hosted outside Amazon Bedrock, such as on Amazon SageMaker or Amazon Elastic Kubernetes Service (Amazon EKS), or you require routing customization, you will need to develop a custom routing solution.

This section provides sample implementations for both LLM-assisted and semantic routing. We discuss the solution’s mechanics, key design decisions, and how to use it as a foundation for developing your own custom routing solutions. For detailed deployment instructions for each routing solution, refer to the GitHub repo. The provided code in this repo is meant to be used in a development environment. Before migrating any of the provided solutions to production, we recommend following the AWS Well-Architected Framework.

LLM-assisted routing

In this solution, we demonstrate an educational tutor assistant that helps students in two domains of history and math. To implement the routing layer, the application uses the Amazon Titan Text G1 – Express model on Amazon Bedrock to classify the questions based on their topic to either history or math. History questions are routed to a more cost-effective and faster LLM such as Anthropic’s Claude 3 Haiku on Amazon Bedrock. Math questions are handled by a more powerful LLM, such as Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock, which is better suited for complex problem-solving, in-depth explanations, and multi-step reasoning. If the classifier LLM is unsure whether a question belongs to the history or math category, it defaults to classifying it as math.

The architecture of this system is illustrated in the following figure. The use of Amazon Titan and Anthropic models on Amazon Bedrock in this demonstration is optional. You can substitute them with other models deployed on or outside of Amazon Bedrock.

This architecture workflow includes the following steps:

- A user submits a question through a web or mobile application, which forwards the query to Amazon API Gateway.

- When API Gateway receives the request, it triggers an AWS Lambda

- The Lambda function sends the question to the classifier LLM to determine whether it is a history or math question.

- Based on the classifier LLM’s decision, the Lambda function routes the question to the appropriate downstream LLM, which will generate an answer and return it to the user.

Follow the deployment steps in the GitHub repo to create the necessary infrastructure for LLM-assisted routing and run tests to generate responses. The following output shows the response to the question “What year did World War II end?”

The question was correctly classified as a history question, with the classification process taking approximately 0.53 seconds. The question was then routed to and answered by Anthropic’s Claude 3 Haiku, which took around 0.25 seconds. In total, it took about 0.78 seconds to receive the response.

Next, we will ask a math question. The following output shows the response to the question “Solve the quadratic equation: 2x^2 – 5x + 3 = 0.”

The question was correctly classified as a math question, with the classification process taking approximately 0.59 seconds. The question was then correctly routed to and answered by Anthropic’s Claude 3.5 Sonnet, which took around 2.3 seconds. In total, it took about 2.9 seconds to receive the response.

Semantic routing

In this solution, we focus on the same educational tutor assistant use case as in LLM-assisted routing. To implement the routing layer, you first need to create a set of reference prompts that represents the full spectrum of history and math topics you intend to cover. This reference set serves as the foundation for the semantic matching process, enabling the application to correctly categorize incoming queries. As an illustrative example, we’ve provided a sample reference set with five questions for each of the history and math topics. In a real-world implementation, you would likely need a much larger and more diverse set of reference questions to have robust routing performance.

You can use the Amazon Titan Text Embeddings V2 model on Amazon Bedrock to convert the questions in the reference set into embeddings. You can find the code for this conversion in the GitHub repo. These embeddings are then saved as a reference index inside an in-memory FAISS vector store, which is deployed as a Lambda layer.

The architecture of this system is illustrated in the following figure. The use of Amazon Titan and Anthropic models on Amazon Bedrock in this demonstration is optional. You can substitute them with other models deployed on or outside of Amazon Bedrock.

This architecture workflow includes the following steps:

- A user submits a question through a web or mobile application, which forwards the query to API Gateway.

- When API Gateway receives the request, it triggers a Lambda function.

- The Lambda function sends the question to the Amazon Titan Text Embeddings V2 model to convert it to an embedding. It then performs a similarity search on the FAISS index to find the closest matching question in the reference index, and returns the corresponding category label.

- Based on the retrieved category, the Lambda function routes the question to the appropriate downstream LLM, which will generate an answer and return it to the user.

Follow the deployment steps in the GitHub repo to create the necessary infrastructure for semantic routing and run tests to generate responses. The following output shows the response to the question “What year did World War II end?”

The question was correctly classified as a history question and the classification took about 0.1 seconds. The question was then routed and answered by Anthropic’s Claude 3 Haiku, which took about 0.25 seconds, resulting in a total of about 0.36 seconds to get the response back.

Next, we ask a math question. The following output shows the response to the question “Solve the quadratic equation: 2x^2 – 5x + 3 = 0.”

The question was correctly classified as a math question and the classification took about 0.1 seconds. Moreover, the question was correctly routed and answered by Anthropic’s 3.5 Claude Sonnet, which took about 2.7 seconds, resulting in a total of about 2.8 seconds to get the response back.

Additional considerations for custom prompt routing

The provided solutions uses exemplary LLMs for classification in LLM-assisted routing and for text embedding in semantic routing. However, you will likely need to evaluate multiple LLMs to select the LLM that is best suited for your specific use case. Using these LLMs does incur additional cost and latency. Therefore, it’s critical that the benefits of dynamically routing queries to the appropriate LLM can justify the overhead introduced by implementing the custom prompt routing system.

For some use cases, especially those that require specialized domain knowledge, consider fine-tuning the classifier LLM in LLM-assisted routing and the embedding LLM in semantic routing with your own proprietary data. This can increase the quality and accuracy of the classification, leading to better routing decisions.

Additionally, the semantic routing solution used FAISS as an in-memory vector database for similarity search. However, you might need to evaluate alternative vector databases on AWS that better fit your use case in terms of scale, latency, and cost requirements. It will also be important to continuously gather prompts from your users and iterate on the reference prompt set. This will help make sure that it reflects the actual types of questions your users are asking, thereby increasing the accuracy of your similarity search classification over time.

Clean up

To avoid incurring additional costs, clean up the resources you created for LLM-assisted routing and semantic routing by running the following command for each of the respective created stacks:

Cost analysis for custom prompt routing

This section analyzes the implementation cost and potential savings for the two custom prompt routing solutions, using an exemplary traffic scenario for our educational tutor assistant application.

Our calculations are based on the following assumptions:

- The application is deployed in the US East (N. Virginia) AWS Region and receives 50,000 history questions and 50,000 math questions per day.

- For LLM-assisted routing, the classifier LLM processes 150 input tokens and generates 1 output token per question.

- For semantic routing, the embedding LLM processes 150 input tokens per question.

- The answerer LLM processes 150 input tokens and generates 300 output tokens per question.

- Amazon Titan Text G1 – Express model performs question classification in LLM-assisted routing at $0.0002 per 1,000 input tokens, with negligible output costs (1 token per question).

- Amazon Titan Text Embeddings V2 model generates question embedding in semantic routing at $0.00002 per 1,000 input tokens.

- Anthropic’s Claude 3 Haiku handles history questions at $0.00025 per 1,000 input tokens and $0.00125 per 1,000 output tokens.

- Anthropic’s Claude 3.5 Sonnet answers math questions at $0.003 per 1,000 input tokens and $0.015 per 1,000 output tokens.

- The Lambda runtime is 3 seconds per math question and 1 second per history question.

- Lambda uses 1024 MB of memory and 512 MB of ephemeral storage, with API Gateway configured as a REST API.

The following table summarizes the cost of answer generation by LLM for both routing strategies.

| Question Type | Total Input Tokens/Month | Total Output Tokens/Month | Answer Generation Cost/Month |

| History | 225,000,000 | 450,000,000 | $618.75 |

| Math | 225,000,000 | 450,000,000 | $7425 |

The following table summarizes the cost of dynamic routing implementation for both routing strategies.

| LLM-Assisted Routing | Semantic Routing | ||||

| Question Type | Total Input Tokens/Month | Classifier LLM Cost/Month | Lambda + API Gateway Cost/Month | Embedding LLM Cost/Month | Lambda + API Gateway Cost/Month |

| History + Math | 450,000,000 | $90 | $98.9 | $9 | $98.9 |

The first table shows that using Anthropic’s Claude 3 Haiku for history questions costs $618.75 per month, whereas using Anthropic’s Claude 3.5 Sonnet for math questions costs $7,425 per month. This demonstrates that routing questions to the appropriate LLM can achieve significant cost savings compared to using the more expensive model for all of the questions. The second table shows that these savings come with an implementation cost of $188.9/month for LLM-assisted routing and $107.9/month for semantic routing, which are relatively small compared to the potential savings in answer generation costs.

Selecting the right dynamic routing implementation

The decision on which dynamic routing implementation is best suited for your use case largely depends on three key factors: model hosting requirements, cost and operational overhead, and desired level of control over routing logic. The following table outlines these dimensions for Amazon Bedrock Intelligent Prompt Routing and custom prompt routing.

| Design Criteria | Amazon Bedrock Intelligent Prompt Routing | Custom Prompt Routing |

| Model Hosting | Limited to Amazon Bedrock hosted models within the same model family | Flexible: can work with models hosted outside of Amazon Bedrock |

| Operational Management | Fully managed service with built-in optimization | Requires custom implementation and optimization |

| Routing Logic Control | Limited customization, predefined optimization for cost and performance | Full control over routing logic and optimization criteria |

These approaches aren’t mutually exclusive. You can implement hybrid solutions, using Amazon Bedrock Intelligent Prompt Routing for certain workloads while maintaining custom prompt routing for others with LLMs hosted outside Amazon Bedrock or where more control on routing logic is needed.

Conclusion

This post explored multi-LLM strategies in modern AI applications, demonstrating how using multiple LLMs can enhance organizational capabilities across diverse tasks and domains. We examined two primary routing strategies: static routing through using dedicated interfaces and dynamic routing using prompt classification at the application’s point of entry.

For dynamic routing, we covered two custom prompt routing strategies, LLM-assisted and semantic routing, and discussed exemplary implementations for each. These techniques enable customized routing logic for LLMs, regardless of their hosting platform. We also discussed Amazon Bedrock Intelligent Prompt Routing as an alternative implementation for dynamic routing, which optimizes response quality and cost by routing prompts across different LLMs within Amazon Bedrock.

Although these dynamic routing approaches offer powerful capabilities, they require careful consideration of engineering trade-offs, including latency, cost optimization, and system maintenance complexity. By understanding these tradeoffs, along with implementation best practices like model evaluation, cost analysis, and domain fine-tuning, you can architect a multi-LLM routing solution optimized for your application’s needs.

About the Authors

Nima Seifi is a Senior Solutions Architect at AWS, based in Southern California, where he specializes in SaaS and GenAIOps. He serves as a technical advisor to startups building on AWS. Prior to AWS, he worked as a DevOps architect in the ecommerce industry for over 5 years, following a decade of R&D work in mobile internet technologies. Nima has authored over 20 technical publications and holds 7 US patents. Outside of work, he enjoys reading, watching documentaries, and taking beach walks.

Nima Seifi is a Senior Solutions Architect at AWS, based in Southern California, where he specializes in SaaS and GenAIOps. He serves as a technical advisor to startups building on AWS. Prior to AWS, he worked as a DevOps architect in the ecommerce industry for over 5 years, following a decade of R&D work in mobile internet technologies. Nima has authored over 20 technical publications and holds 7 US patents. Outside of work, he enjoys reading, watching documentaries, and taking beach walks.

Manish Chugh is a Principal Solutions Architect at AWS based in San Francisco, CA. He specializes in machine learning and is a generative AI lead for NAMER startups team. His role involves helping AWS customers build scalable, secure, and cost-effective machine learning and generative AI workloads on AWS. He regularly presents at AWS conferences and partner events. Outside of work, he enjoys hiking on East SF Bay trails, road biking, and watching (and playing) cricket.

Manish Chugh is a Principal Solutions Architect at AWS based in San Francisco, CA. He specializes in machine learning and is a generative AI lead for NAMER startups team. His role involves helping AWS customers build scalable, secure, and cost-effective machine learning and generative AI workloads on AWS. He regularly presents at AWS conferences and partner events. Outside of work, he enjoys hiking on East SF Bay trails, road biking, and watching (and playing) cricket.

Source: Read MoreÂ